TL;DR

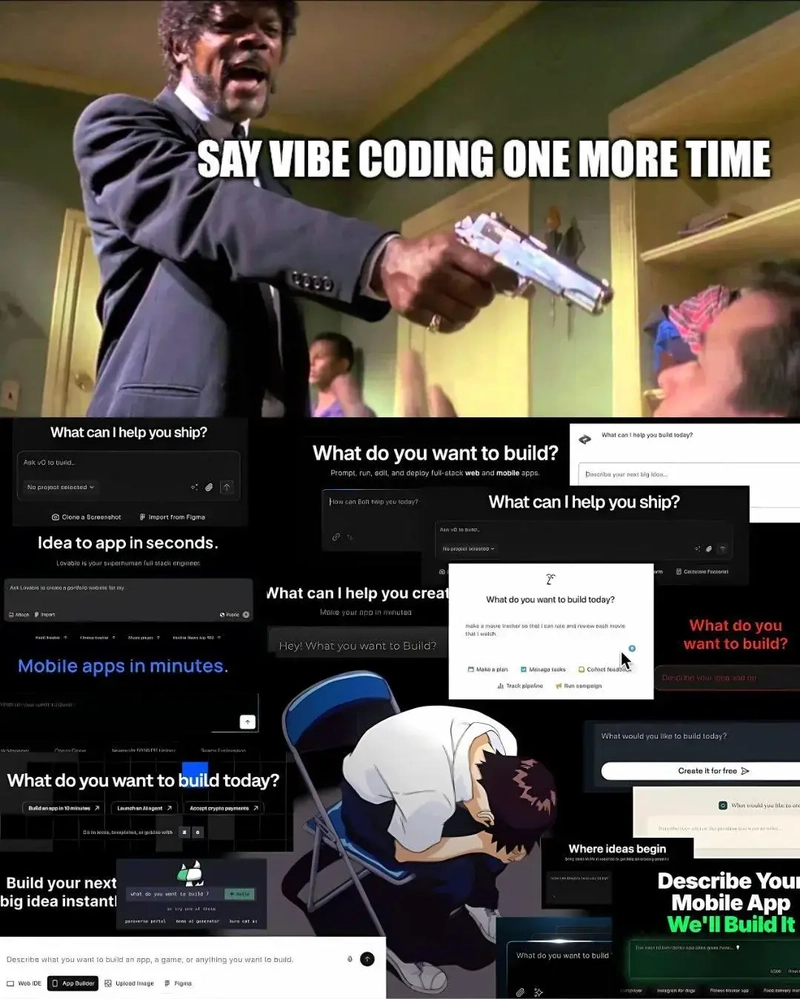

If you're hiring vibe coders, think again before it's too late. This post isn't about dissing Dioxus; It's about raising awareness around the fragility of modern software engineering, especially when inexperienced developers use powerful tools. Always hire engineers with the proper experience, particularly when working in critical areas like full-stack development. Vibe coding isn't inherently bad, but when handed to the wrong people, it becomes a dangerous practice.

Introduction

Hello friends 👋!

Today I want to share with you a deep frustration, a boiling discontent built up over two sleepless weekends trying to report and explain multiple security vulnerabilities to a well-known and publicly available open-source project in the Rust ecosystem: Dioxus. For those unfamiliar, Dioxus is a modern full-stack UI framework for Rust, and its promises are big. It aims to deliver the kind of seamless development experience we've grown to expect from JavaScript ecosystems, but powered by Rust's memory safety and type system. While its goals are admirable and its developers clearly passionate, what I've encountered has exposed a darker side of the modern "move fast and break things" mindset that has mixed into even our most robust and theoretically secure ecosystems.

This blog post is a reflection on not just Dioxus itself, but the overall degeneration of software engineering practices caused by what I refer to as "vibe coding". It's not an attack on Dioxus as a tool or its creators personally. Instead, this is a petition to the industry to take security seriously. Dioxus merely serves as a case study, a concrete example of the dangers of prioritizing developer experience and hype over secure engineering principles. And before anyone gets defensive, understand: I've been in this field for years, if you measure experience by sleepless hours, millions of lines of code written, and real systems that were actually used, and I've dealt with real security threats. This isn't my first time doing this.

The title is provocative, yes, but it is also literal. Vibe coding is destroying the very foundation of what software engineering used to mean: precision, responsibility, reliability, security. I completely understand that product managers and investors breathe down your neck for fast delivery. I've shipped code under pressure. I've dealt with the "why isn't it done yet?" beam. But none of that justifies ignoring the fundamentals. We're not building TikTok filters, we're constructing software that handles real data, affects real users, and, in some cases, controls real-world infrastructure. A culture of shipping at all costs with minimal inspection is not only irresponsible, it's dangerous.

This post will take you through multiple security flaws discovered in Dioxus over the past two weeks. It will explain how these issues could have been avoided with proper engineering practices and why vibe coding, where the developer is more concerned about the aesthetic or the "feel" of writing code rather than understanding the underlying system, leads to systemic failures. And yes, while some Dioxus maintainers may claim these aren't "security issues", the principles of secure software design demand we treat them as such.

What is a Software Engineer / Developer?

Before we get into the complicated stuff, let's clarify some terminology that the industry often uses interchangeably but should not. A software engineer is fundamentally different from a developer. While both write code, the responsibilities and mindset between the two are worlds apart. A software engineer is someone who is capable of designing systems from first principles. They understand memory models, design constraints, threat surfaces, performance bottlenecks, and scalability trade-offs. They don't just write code, they architect robust, maintainable, and secure systems that solve real-world problems in an optimized manner.

On the other hand, a software developer is typically focused on building within the confines of those systems. They may be responsible for adding features, fixing bugs, tweaking performance, or even just connecting APIs together in a frontend interface. There's nothing inherently wrong with being a developer. But conflating the two leads to mismatched expectations, especially in hiring processes or in open-source communities where contributions affect thousands of users.

Why is this distinction important in a post about Dioxus and vibe coding? Because Dioxus, like many modern frameworks, abstracts away a lot of complexity, which makes it very inviting to developers who are new or who may not understand the consequences of their actions. This abstraction isn't evil by itself. In fact, well-designed abstraction is one of the cornerstones of good engineering. But when those abstractions are handed to people who don't fully understand the implications of the code they're writing, particularly in a systems language like Rust, we get insecure software.

Software engineers are responsible not just for the code they write but for the consequences of that code in production. When a type system is bypassed, when untrusted input is handled without validation, when unsafe code is introduced without proper justification and bounds checking, that is not a momentary lapse in judgment. That is a systemic failure in design responsibility. That's what vibe coding enables.

Ultimately, the security and integrity of an application lies in the hands of the engineers behind it. If something goes wrong, pointing fingers at the "framework" or the "community" is insufficient. The engineer must accept responsibility. And so, if a tool encourages insecure patterns or fails to enforce good practices, it's not just a developer problem, it's a failure of engineering culture, documentation, and design. This is where Dioxus, as a case study, becomes illustrative.

What is Vibe Coding?

Let's now take a moment to define this central concept: vibe coding. Vibe coding is the act of programming based on "feel" rather than understanding. It's when a developer wires together code snippets they've seen in documentation, StackOverflow, or AI-generated outputs without understanding the system's internals. It's the act of treating your framework or language as a magical black box, feeding it inputs, hoping for the right outputs, and assuming that "if it compiles, it's probably fine".

Vibe coding is particularly dangerous in systems programming. Languages like Rust were designed to enforce safety through strict compile-time guarantees. But even Rust cannot protect your system if you deliberately (or ignorantly) bypass those guarantees. Unsafe blocks, dynamic plugin systems, stringly-typed APIs, all of these are opportunities for subtle, dangerous bugs when wired without understanding.

When someone vibe codes a UI in React, the worst-case scenario might be a broken button or a misaligned div. When someone vibe codes a server function in Dioxus using unsafe function pointers, CSRF-vulnerable APIs, or SSRF-prone static site generation, the consequences scale up quickly. And yet, because modern frameworks accommodate developer ergonomics, they don't always poke the developer toward secure or well-reasoned patterns. They prioritize speed, simplicity, and joy. But joy in programming should not come at the expense of discipline.

It raises an important question: when software fails, who is responsible? The developer who vibe coded it? The framework that encouraged it? The runtime that failed to detect it? In truth, all share a slice of the blame. But the lion's share falls upon the person who wrote the code. Because the machine does not write your software. It merely executes it. No matter how advanced LLMs become or how "magical" our dev tools feel, the human remains in the loop.

This is not just a philosophical discussion. It has real-world implications, as the next section will demonstrate. Because over the course of two weekends, I went deep into Dioxus's internals, not as a casual user, but as a software engineer intent on breaking it open and seeing what lies beneath. What I found was troubling. What I reported was met with dismissal. And what I learned is that we are sleepwalking into a new era of insecure software built by developers who don't know what they're building.

Hacking Dioxus

I've been using Dioxus full-time for over 7 months. Not passively. Not as a toy. I've used it in production systems, built fullstack apps, integrated with WebAssembly, deployed to cloud environments, and experimented with server-side rendering and static generation. My feedback comes not from a place of friction, but from a place of personal familiarity. And what I found during a deep audit of its codebase should concern anyone using or contributing to the project.

Let's begin with the first vulnerability: Open Redirect in the Link component.

Open Redirect Vulnerability

This issue was reported here. It may sound minor, but its implications are not. The Dioxus Link component currently accepts arbitrary strings as its to parameter. That means a developer can write something like:

Link { to: "https://some-malicious-website.com" }

The maintainers argue that this is fine, it's up to the developer to decide whether they're linking internally or externally. But here's the catch: the Link component is part of the Dioxus router, which is meant for internal navigation. It exists to manage in-app routing, maintain client-side history, and provide a seamless UX without full page reloads. Allowing arbitrary external URLs through this component breaks the contract of trust between the developer and the router system. It blurs the line between internal routing and external redirection in a way that opens up phishing, and redirect attack vectors.

Compare this to Yew, a Rust framework that does this correctly. Yew's Link component only accepts values from a Routable enum. This enforces compile-time guarantees that a route is valid and internal. You cannot accidentally pass in a user-controlled string and redirect them to a malicious site. That's type safety. That's Rust's promise. And that's what Dioxus breaks.

So when I filed this as a vulnerability, I wasn't just nitpicking. I was advocating for Dioxus to honor Rust's philosophy: preventing errors at compile time wherever possible. The maintainers disagreed. One even said, dismissively, "This is not a security vulnerability and it's ridiculous to claim it is." That kind of tone is not only unprofessional, it's dangerous. It discourages responsible disclosure. It chills open source security culture. And it misses the point entirely.

I proposed a simple fix: if a user wants to link to an external site, use the standard HTML a tag:

a { href: "https://google.com" }

And if the user wants in-app routing, use:

Link { to: Route::Home }

This ensures type safety, separation of concerns, and better DX without compromising security. A win-win. But the maintainers were not interested. Perhaps because this fix wasn't "vibe" enough.

CSRF in Server Functions

Let's start by analyzing how CSRF works at a technical level and why it becomes a critical threat when not properly mitigated in server-side APIs, especially those serving frontend clients over the web. Cross-Site Request Forgery is a well-known and dangerous form of attack where an attacker tricks a user into performing unwanted actions on a web application in which they're currently authenticated. These actions can range from changing account settings, submitting data, or even making financial transactions. The core vulnerability comes from the fact that browsers automatically attach cookies to requests, including authentication tokens and session cookies, making the victim's authenticated state exploitable if not validated properly. In this case, Dioxus's server functions, designed to provide developer-friendly async APIs with automatic serialization and routing, lack built-in CSRF protection, which puts developers in a precarious position where secure-by-default isn't guaranteed.

When I proposed the #[with_csrf] macro, it wasn't just about syntactic sugar or convenience, it was about aligning the Dioxus stack with the principle of secure defaults, something that the Rust language and its ecosystem pride themselves on. Rust's core philosophy is centered around safety and correctness. The moment we shift that burden of correctness entirely onto the developer, we break that implicit promise. Let's take frameworks like Next.js as an example. Even though it's written in JavaScript, an infamously permissive and unsafe language, Next.js still goes out of its way to encourage CSRF tokens and offers middleware and utilities that reduce the chance of such oversights. The argument that Dioxus shouldn't be responsible for CSRF because it doesn't manage sessions or authentication directly is, in my opinion, insufficient. Providing security primitives like CSRF tokens should be the minimum any modern fullstack web framework offers, this isn't asking for a feature; this is about foundational safety in a connected world where web exploitation is the norm, not the exception.

Furthermore, from a developer experience standpoint, introducing a #[with_csrf] procedural macro adds virtually no additional cognitive overhead, but dramatically improves the likelihood that server functions are protected against CSRF attacks. The proposed implementation could easily check for a valid X-CSRF-Token in the request headers and validate it against a signed session token. This is similar to what popular frameworks like Django and Laravel have done for years. It's a battle-tested pattern. What I'm asking for isn't new or revolutionary, it's standard, mature, and secure. What makes Rust unique is that it allows us to do all this at compile time with strong type checking, minimizing room for human error.

Now, when I raised this as an issue, I was met with the counterpoint that enforcing CSRF tokens universally would restrict valid use cases, like calling server functions from unauthenticated APIs or external clients. And yes, that's technically true, but that's precisely why I suggested making it an opt-in system. This is not about enforcing behavior globally, but about giving developers the option to choose the secure path with minimal friction. If you're building an app where CSRF is not a concern, you simply don't add the macro. If you're building an app that deals with forms, user inputs, account management, or anything remotely sensitive, you slap #[with_csrf] on top of the server function and move on with confidence. How is that a bad tradeoff?

It also seems like the Dioxus team is deeply committed to keeping the framework light and developer-friendly, which I greatly respect. In fact, I admire the effort that has gone into the router, server functions, and CLI tooling. However, friendliness should not come at the cost of safety. Even if we assume that most developers are smart and security-conscious, we cannot assume that every developer is. Security must be idiot-proof, not because we think developers are idiots, but because the stakes are just that high. A forgotten CSRF token is not an academic problem; it's a potential PR disaster or a data leak. And in today's hyper-connected world, one data breach is all it takes to lose user trust, investor confidence, and sometimes even legal ground under GDPR or CCPA.

If the Dioxus maintainers are concerned about maintaining clarity in the macro system, there are several routes to improve this. The macro could emit compile-time warnings if used incorrectly. We could even generate server logs that explain what the CSRF system is doing during runtime in debug mode. Better yet, we could allow users to configure a CSRF strategy at a higher level, something like ServerConfig::enable_csrf_protection(true) which makes every server function CSRF-aware by default, unless explicitly opted out. There are a dozen sane, ergonomic design paths we can follow to achieve this goal, and none of them degrade the developer experience.

I want to emphasize again that this isn't just about Dioxus. The Rust ecosystem needs to have a broader conversation about security ergonomics. We've gotten excellent at zero-cost abstractions, safe concurrency, and data race prevention, but web security, in the fullstack space, still feels like a second-class citizen. Libraries like axum, actix, and warp have CSRF middleware maintained by third parties. This fragmentation is bad for the ecosystem and makes it harder for new developers to follow best practices. A modern fullstack web framework like Dioxus is the perfect place to show leadership and establish secure defaults out-of-the-box. It sets a strong precedent for other libraries to follow.

I've seen too many developers brush off CSRF as "not their problem", only to end up doing damage control after their app is compromised. A decade ago, this was forgivable. Today, it's not. So let me say it loud and clear for anyone reading this: if your app handles user input, authentication, or sensitive data, and you're not protecting your server functions from CSRF, then you are shipping a vulnerable app. It doesn't matter whether you used Rust or Brainfuck or Haskell. Security is language-agnostic. And it's high time we stop excusing unsafe defaults just because they make onboarding easier.

DoS Caused by Arbitrary Function Pointer Transmute

This next issue is probably one of the most outstandingly and technically shocking vulnerabilities I came across while exploring the internals of the Dioxus fullstack server runtime. Specifically, it involves the use of unsafe Rust code to transmute raw function pointers during hot reload operations in development mode. The relevant code resides in the hot reload path and involves this line:

let new_root = unsafe {

std::mem::transmute::<*const (), fn() -> Element>(new_root_addr)

};

At a glance, the casual observer might not see why this is an issue, especially since this block of code is clearly marked as part of the development hot reload infrastructure. But anyone with experience in systems programming, or anyone who has ever been burned by undefined behavior in C or C++, should immediately see red flags here. Transmuting a raw pointer to a function pointer is a classically dangerous move unless you are absolutely sure that the pointer is valid and correctly aligned. In this case, the assumption is that the hot reload system can blindly accept any memory address provided via the loader and call it like a proper function. This is just asking for a segmentation fault, or worse.

And indeed, that's exactly what happens. If an invalid or malformed function pointer is introduced, intentionally or otherwise, the result is a runtime crash. You can trivially reproduce this by sending a bogus pointer to the reload system, causing the entire Dioxus fullstack server to segfault. This is not merely a bug. It is a weaponizable DoS vector, especially if an attacker can influence the plugin loading system. Even though the maintainers are correct that this only affects development builds, we cannot ignore the implications: developers running hot reload tools are now at risk of crashing their dev servers with malformed inputs. More importantly, this kind of unchecked unsafe logic sends the wrong message about Rust safety practices in high-level frameworks.

Let me be clear: the use of unsafe in Rust is sometimes unavoidable. Rust gives you the unsafe keyword not to avoid safety, but to isolate and explicitly contain unsound operations that would otherwise be impossible in safe code. But when using unsafe, you are entering a contract with the compiler and the runtime: you must manually uphold all the guarantees that Rust normally provides for you. That includes pointer validity, lifetime correctness, memory alignment, and type soundness. Transmuting raw pointers violates all of these unless handled with surgical precision. The current Dioxus code does none of that validation. It simply transmutes and executes.

So what's the fix? Actually, there are several. The most straightforward one is to validate the pointer before transmutation. If the pointer is null, or misaligned, the system should refuse to execute and return an explicit panic with a diagnostic message. This would prevent the segmentation fault and provide developers with a clear understanding of what went wrong. Alternatively, the system could require signed metadata for the loaded functions, ensuring that only trusted code paths are executed. This would effectively sandbox the reload system and dramatically reduce the attack surface. We could also adopt the pattern seen in hot-reloading plugins from Unity, where plugin registration is explicit and compile-time-checked. This would mean that hot reload targets would need to register their function exports explicitly, allowing the compiler to generate safe entry points. This way, any change to the application logic would require an explicit recompile of the plugin manifest, and all the address resolution could be validated against a known set of safe signatures.

In response to this report, the Dioxus maintainers asserted that since this only affects development, the priority of fixing it is low. While I understand their reasoning, I respectfully disagree. Development-time tooling is often the first contact point for new users and teams evaluating a technology. If a developer encounters a crash during hot reload because of malformed function pointers, it doesn't matter that it's a dev-only issue, their perception of Dioxus as a reliable toolchain is already dulled. Worse, in large enterprise environments where development is done at scale across multiple teams and sandboxes, a rogue reload bug can bring down a staging environment or corrupt a shared cache. It's not just about safety; it's about trust.

What makes this particularly ironic is that Rust's strongest selling point, its promise of memory safety and crash resistance, is completely nullified by poor usage of unsafe. We don't get to brag about "fearless concurrency" and "safe systems programming" if we turn around and write code that would make a C compiler blush. Every unsafe block is a loaded gun. And it's the framework's job, not the developer's, to make sure that trigger isn't pulled without proper safeguards in place.

In short, this vulnerability is demonstrative of a deeper issue: vibe coding is creeping into the core libraries of a safety-first ecosystem. When we cut corners for the sake of speed, especially in unsafe contexts, we end up with fragile foundations that betray everything Rust stands for. That's not just poor engineering. That's a breach of trust with the entire community.

SSRF in CLI SSG Loop

When you have a build tool like the Dioxus CLI, which includes server-side rendering (SSR) and static site generation (SSG) capabilities, you're already operating in a semi-privileged environment. Even if "dx serve" is labeled as a development tool, the implications of introducing unvalidated input routes into a loop that performs HTTP requests should raise significant red flags, especially considering the increasing number of teams using these tools in CI/CD pipelines or for local staging environments. As reported in this issue, by blindly fetching each route using a formatted HTTP GET request, without sanitizing or validating these paths, you introduce a clear vector for Server-Side Request Forgery (SSRF). SSRF, as widely recognized in the OWASP Top 10 list of security vulnerabilities, allows attackers to trick servers into making requests to unintended destinations, like internal systems, cloud metadata services (e.g., AWS' 169.254.169.254), or even other vulnerable services that trust internal traffic.

To quote OWASP directly: "SSRF flaws occur whenever a web application is fetching a remote resource without validating the user-supplied URL." (OWASP SSRF). Dioxus's current CLI behavior precisely fits that description. And while the maintainers may have dismissed this by saying "it's just a dev tool", I challenge the logic behind accepting insecure defaults, even in dev-mode utilities. A dev tool used today might be embedded in tomorrow's automation pipeline. We all know that lines between "development" and "production" blur quickly in modern workflows.

Moreover, an attacker who can poison the route list, via environmental variables, or other misconfigurations, can easily exploit the SSRF vector. Even if it's "just development", the potential risk of leaking internal system data, or pivoting into more sensitive areas should warrant at least a minimum validation layer. We're not talking about rewriting the tool, just sanitize route strings before they're passed into the request loop. Ensure routes are relative paths, prevent http:// or https:// prefixed routes, and error out when absolute domains are encountered. These are trivial patches that could stop an entire class of attack vectors dead in their tracks.

It's ironic that Dioxus, a Rust framework meant to leverage Rust's strengths like type safety and security, is allowing basic security hygiene issues to go unchecked. Developers pivote to Rust because they want control and reliability. If your toolchain weakens the very foundation the language was built on, then you're not shipping Rust. You're shipping Vibe Rust™, a watered-down promise of what secure systems programming could be.

Reflection

Let's be crystal clear here: this isn't about ego, disputation, or any personal campaign against a project. This is a much-needed wake-up call that echoes across the entire open-source Rust ecosystem. When I reported these issues, I did so not to nitpick or throw shade, but because I genuinely care about the quality of the tools we are all using. I've written countless lines of Rust over the past years, read through core Rust source, and followed the design philosophies of Rust since its early stable days. I've watched as people celebrated Rust's memory safety, but ignored the growing cracks in userland safety, especially in web frameworks and glue code.

Security is not an afterthought, nor should it ever be one. When you say, "developers are responsible for using the tools safely", you're technically correct, but morally negligent. Let me ask this: Would you trust a car company that says, "Oh, the brakes work if the driver applies them correctly, but sometimes they don't respond unless you write your own brake controller"? That's exactly what some libraries and frameworks are saying to developers right now. Shifting security responsibilities to the end user, especially when such issues can be proactively mitigated in the framework layer, is irresponsible engineering.

We're not talking about exotic attack vectors. We're talking about Open Redirects, CSRF, SSRF, and segfaults. These are day-one bugs. These are the types of vulnerabilities that show up in bug bounty reports and make headlines when they go unpatched in production apps. It is deeply concerning when the response to these reports is outright dismissal, immediate issue closure, or marking them as "spam" instead of engaging in a technical discussion. That's not just poor communication, it's bad governance.

I fully understand open source is a labor of love. Maintainers often work on nights and weekends, unpaid and underappreciated. But part of being an open source master is knowing how to respond to critical input. Even if you don't agree, the least you can do is not treat the messenger like the enemy. Otherwise, the entire idea of open collaboration collapses into a gatekept monoculture where critique is seen as attack and security is seen as "someone else's job."

Final Thoughts

Let's revisit the big picture. Vibe coding isn't a term coined to insult people. It's a shorthand for describing a pattern where tools are used intuitively without foundational understanding. It's what happens when libraries become too easy to use at the expense of correctness. Developers start composing applications by copying examples, skimming docs, and leaning on autocomplete. And while that's okay for prototyping, it's absolutely unacceptable in production.

The Rust community has often prided itself on going slow, doing things right, and shipping quality code. But if we don't hold our frameworks to the same standard, we're just gaslighting ourselves. Security doesn't just live in the compiler. It lives in APIs. It lives in interfaces. It lives in assumptions baked into design. That's why we need ergonomic and secure frameworks. That's why I wrote this post.

If you build systems that encourage cargo-culting, where people use features without understanding their impact, you are on the hook for making those features safer. If you dismiss every security report with "you're using it wrong", then you've built an unsafe abstraction. Periodt.

Dioxus has tremendous potential. The community is growing. The DX is excellent. But none of that matters if the foundation is riddled with avoidable pitfalls. My hope is that this post helps others understand that being fast and fun doesn't excuse being careless. Rust deserves better. Developers deserve better. Users deserve better.

If you're a Dioxus user, take a second look at how you're handling routing, server functions, and any unsafe FFI magic. If you're using Dioxus in a production deployment, even "just testing", audit your setup. Is there an open redirect? Are you issuing GETs to dynamic routes? Do your server functions validate inputs and defend against CSRF?

If you're a maintainer, of Dioxus or any Rust project, please consider the following:

- Don't treat security reports like spam. Take 5-10 minutes to validate the concern.

- Use type systems to prevent misuse, especially in routing and I/O APIs.

- Provide optional guardrails (macros, features, traits) for common security needs.

- Document security assumptions clearly. Make it hard to use things incorrectly.

- When in doubt, err on the side of safety. Rust taught us that. Live it.

Despite the critiques, I want to end this on a positive note. Dioxus is a brilliant project. The work being done to unify desktop, mobile, and web apps in a type-safe Rust UI layer is nothing short of visionary. The fact that I can run the same app on WASM, and native platforms with minimal code changes is incredible. Server functions are powerful. The SSG capabilities are ahead of their time. And OpenSASS being built entirely on top of it proves that Dioxus isn't just hype, it's usable and scalable.

But a great tool is not immune from critique. It's through feedback, yes, sometimes harsh, that tools evolve from "good enough" to "industry standard". I sincerely hope this blog is read in that spirit.

So, thank you to the Dioxus team. Thank you for the hours of work. Thank you for the documentation, the examples, the bug fixes, all of which I am actively contributing to and improving. Just please, take security as seriously as you take developer experience. Because in the end, DX without security is just fast failure.

At Open SASS, we're working tirelessly on making Rust web development extremely easy for everyone.

If you made it this far, it would be nice if you could join us on Discord.

Till next time, keep building, but build responsibly 👋!

Top comments (17)

"Vibe coders" should just hack on fun hobby/weekend projects - employers or companies who hire "vibe coders" to work on mission critical projects are out of their mind :)

True, but some companies might outsource their staff and hire the cheapest options available, thinking that when paired with LLMs, they can function as senior professionals. So, always hire based on actual experience.

Yeah you get what you pay for :-)

Of course it's fine to use AI, but anyone calling themselves a "web developer" should at least have a solid understanding of how HTTP works, plus HTML/CSS/JS, plus a grasp of security fundamentals ... and that's the bare minimum, the bar should of course be a lot higher than that for more complex projects - otherwise how can our 'vibe coder' possibly know if their "AI" is generating anything that makes sense, or meets minimal quality standards? ;-)

But of course I'm preaching to the converted ;-)

Absolutely! AI can help, but it's only as good as the dev using/reviewing it. Without that baseline knowledge, you're just gambling with product reliability and security ;-)

Totally agree security should be the default, not an afterthought. How do you think frameworks could best help newer devs gain the right depth without just locking them out?

Frameworks can help newer developers by providing clear patterns and tools to build real-world projects, while also encouraging them to explore underlying concepts instead of relying solely on abstractions.

In case you're wondering, from my experience in the Rust ecosystem, here's the security tier list:

Leptos is the most type-safe framework I've ever seen/used 🗿.

In terms of developer experience:

Dioxus is the most developer friendly framework in the wild, offering a complete set of features, including CLI tooling, SSR, SSG, server functions, and cross-platform support. For ease of use and prototyping, I always start with Dioxus. I really like its features.

How do you know that these issues were introduced by AI and even more specifically by vibe coding?

Good question! The rise in security vulnerabilities in deployed applications correlates directly with the mainstream adoption of AI-assisted development, especially what's now called "vibe coding". While correlation doesn't automatically imply causation, the timing and scale of these issues are too aligned to ignore. Before 2022 or so, before AI coding tools became omnipresent, we simply did not see this volume or this type of critical security failures appearing at such a rapid rate across the software ecosystem.

"Vibe coding", rapid, intuition-based development with little to no traditional architecture, review, or testing rigor, is inherently at odds with the foundations of software engineering: precision, responsibility, reliability, and security, as mentioned in this post. Scaling code generation by the hundreds or thousands of lines through AI doesn't scale quality, it scales risk. You can't mass-produce reliability. You can't shortcut deep understanding. AI-generated code still hallucinates, makes assumptions, and often lacks contextual awareness of the larger system architecture or evolving threat models. That introduces attack surface, every single time.

Even the best prompt engineering can't prevent these hallucinations completely. And in security, a single oversight, a misplaced validation, an overly permissive config, a missed auth check, is all it takes. The more you flood the codebase with AI-generated snippets, the more surface area you have to secure, and the more likely it is something slips through.

Let's be clear: software engineering is one of the hardest disciplines to fully automate. If we could reliably, securely automate it end-to-end, it would mean nearly all knowledge work could be automated, and we're just not there yet, no matter what the marketing says. That means we're in a transitional era, where AI can assist, but if used recklessly (as it often is under "vibe coding" culture), it degrades the integrity of the product.

So yeah, AI, and especially the culture of unstructured, rapid AI-assisted coding, is absolutely a driving force behind the rise of insecure applications. And this is exactly the moment to double down on learning cybersecurity, because behind every vibe coded app is a job opportunity waiting to be filled.

Good luck 🍀!

Yep, I guess your AI generated response is a good example, but poor way to demonstrate correlation between the 2. We have decades of examples of engineers producing similar and worse vulnerabilities despite the foundations you mentioned. I personally would take your accusations with caution, especially given your low credibility.

Looks objectively human to me, gg!

I am still working on a white paper to demonstrate the correlation between the 2. Expected release: this summer, unless it escapes first.

But, decades of vulnerabilities reflect systemic issues, not the work of well-trained engineers who follow secure design principles since day 0. When precise engineering practices are applied, critical flaws are rare, proving that discipline and training make a measurable difference.

I am scared 😱!

Bruh, who even are you? Talking about credibility with zero facts and/or logic, try again.

You can't reason with "vibes", just hack something that use this framework. Will be way more effective.

That's right! You can't reason through "vibes". Vibes in coding are early signals of deeper architectural flaws, developer friction, or systemic weaknesses. Seasoned engineers know that when something looks off, it often is, even if the exploit hasn't surfaced yet. Ignoring those signals just because they aren't immediately actionable is like ignoring smoke because you don't see fire. Vibes are what often guide us to problems before they become critical.

And yes, if you're about results, just hack something using the framework. That absolutely proves a point. Being a locksmith can make you rich, especially when responsible disclosure gets you ghosted or dismissed. Many vendors deny issues, delay fixes, or offer no reward. So ethically reporting a security vulnerability? Often useless. Meanwhile, those same vulnerabilities can quietly bankroll black-hat folks. It's not legal or recommended, but it works. Proof wins the argument, but vibes start the investigation. Ignore them at your own risk 🤞.

This kind of conversation is exactly what the ecosystem needs...

💯

Vibe coding works if you're already a software engineer. It makes the development significantly faster. But it can backfire if your fundamental as a software engineer is not strong.

I personally built my project Kattalog with vibe-coding initially. Around 50% of the codes are generated by AI, with with careful code-reviews. I shared the story here:

I Built My 1st AI SaaS, It's Not as Hard as You Think

Syakir ・ May 27

"Vibe coders" should just hack on fun hobby/weekend projects - employers or companies who hire "vibe coders" to work on mission critical projects are out of their mind :)

This comment isn't just peak AI slop, it's the Mount Everest of laziness. You didn't even bother using your brain or AI. Just straight-up

CTRL+C'd someone else's comment (@leob). Inspirational stuff, really. Bravo!Some comments may only be visible to logged-in visitors. Sign in to view all comments.