Let’s build an application that processes audio uploads, such as podcast snippets, meeting recordings, or voice notes, by automatically transcribing the audio to text, summarizing the main points, analyzing sentiment and topics, and delivering the results through an API.

The main parts of this article:

1- 💡 Tech Stack (AWS Services)

2- 🎯 Technical Part (Code)

3- 📝 Conclusion

💡 Tech Stack (AWS Services)

We are going to use several AWS services: AWS Lambda, Amazon S3 Bucket, Amazon Bedrock, Amazon Comprehend.

Amazon S3: The bucket that will hold our .mp3 files.

AWS Lambda: Take an uploaded .mp3 or .wav file, call Amazon Transcribe to convert audio to text then it will use Use Claude (via Bedrock) to summarize and highlight key insights. Use Amazon Comprehend to extract sentiment and topics and finally return everything in a beautiful JSON.

Amazon Bedrock: We will use Claude to summarize and highlight key insights.

Amazon Comprehend: Will help us to extract sentiment and topics.

🎯 Technical Part (Code)

import boto3

import json

import time

from urllib.parse import urlparse, unquote_plus

transcribe = boto3.client('transcribe')

s3 = boto3.client('s3')

def lambda_handler(event, context):

print("Event:", json.dumps(event))

bucket = event['Records'][0]['s3']['bucket']['name']

audio_key = unquote_plus(event['Records'][0]['s3']['object']['key'])

job_name = f"transcription-{int(time.time())}"

file_uri = f"s3://{bucket}/{audio_key}"

transcribe.start_transcription_job(

TranscriptionJobName=job_name,

Media={'MediaFileUri': file_uri},

MediaFormat=audio_key.split('.')[-1],

LanguageCode='en-US',

OutputBucketName=bucket

)

while True:

status = transcribe.get_transcription_job(TranscriptionJobName=job_name)

if status['TranscriptionJob']['TranscriptionJobStatus'] in ['COMPLETED', 'FAILED']:

break

time.sleep(5)

if status['TranscriptionJob']['TranscriptionJobStatus'] == 'FAILED':

raise Exception("Transcription failed")

transcript_uri = status['TranscriptionJob']['Transcript']['TranscriptFileUri']

parsed = urlparse(transcript_uri)

transcript_bucket = parsed.path.split('/')[1]

transcript_key = '/'.join(parsed.path.split('/')[2:])

obj = s3.get_object(Bucket=transcript_bucket, Key=transcript_key)

transcript_data = json.loads(obj['Body'].read())

transcript_text = transcript_data['results']['transcripts'][0]['transcript']

print("--> Transcribed Text:\n", transcript_text)

Once we run the start_transcription_job function, it converts the audio to text and saves the files inside the S3 bucket.

Also you can see that the transcribed file is placed inside the same S3 bucket.

Perfect! We've successfully converted our .mp3 files to text, now let's take it further by adding Amazon Comprehend for sentiment and topic analysis, and Amazon Bedrock for intelligent summarization.

comprehend = boto3.client('comprehend')

bedrock = boto3.client('bedrock-runtime')

BEDROCK_MODEL_ID = "anthropic.claude-3-sonnet-20240229-v1:0"

sentiment = comprehend.detect_sentiment(Text=transcript_text[:5000], LanguageCode='en')

summary = summarize_with_claude(transcript_text)

def summarize_with_claude(text):

prompt = f"""Summarize this meeting or speech in clear bullet points, highlight any action items or topics discussed:\n\n{text[:4000]}"""

body = {

"prompt": f"\n\nHuman: {prompt}\n\nAssistant:",

"max_tokens_to_sample": 500,

"temperature": 0.7,

"top_k": 250,

"top_p": 0.9,

"stop_sequences": ["\n\nHuman:"]

}

response = bedrock.invoke_model(

modelId=BEDROCK_MODEL_ID,

body=json.dumps(body),

contentType="application/json",

accept="application/json"

)

response_body = json.loads(response['body'].read())

return response_body.get("completion", "No summary generated.")

Also make sure the enable the right Model inside the AWS console.

Now to test the function, input the following json:

{

"Records": [

{

"s3": {

"bucket": {

"name": "<YOUR_S3_BUCKET>"

},

"object": {

"key": "AD-CONAF-2019BC-MID-JPWiser-v2.mp3"

}

}

}

]

}

Also make sure the Lambda has the required permissions and add the timeout of Lambda function:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:PutObject",

"transcribe:StartTranscriptionJob",

"transcribe:GetTranscriptionJob",

"comprehend:DetectSentiment",

"bedrock:InvokeModel"

],

"Resource": "*"

}

]

}

Now let us try to test the code. Go to AWS Lambda Test section, and input the required json and run the script.

Finally here is the full code for our Lambda function:

import boto3

import json

import time

from urllib.parse import urlparse, unquote_plus

transcribe = boto3.client('transcribe')

comprehend = boto3.client('comprehend')

bedrock = boto3.client('bedrock-runtime')

s3 = boto3.client('s3')

BEDROCK_MODEL_ID = "eu.anthropic.claude-3-7-sonnet-20250219-v1:0"

def lambda_handler(event, context):

print("Event:", json.dumps(event))

bucket = event['Records'][0]['s3']['bucket']['name']

audio_key = unquote_plus(event['Records'][0]['s3']['object']['key'])

job_name = f"transcription-{int(time.time())}"

file_uri = f"s3://{bucket}/{audio_key}"

transcribe.start_transcription_job(

TranscriptionJobName=job_name,

Media={'MediaFileUri': file_uri},

MediaFormat=audio_key.split('.')[-1],

LanguageCode='en-US',

OutputBucketName=bucket

)

while True:

status = transcribe.get_transcription_job(TranscriptionJobName=job_name)

if status['TranscriptionJob']['TranscriptionJobStatus'] in ['COMPLETED', 'FAILED']:

break

time.sleep(5)

if status['TranscriptionJob']['TranscriptionJobStatus'] == 'FAILED':

raise Exception("Transcription failed")

transcript_uri = status['TranscriptionJob']['Transcript']['TranscriptFileUri']

parsed = urlparse(transcript_uri)

transcript_bucket = parsed.path.split('/')[1]

transcript_key = '/'.join(parsed.path.split('/')[2:])

obj = s3.get_object(Bucket=transcript_bucket, Key=transcript_key)

transcript_data = json.loads(obj['Body'].read())

transcript_text = transcript_data['results']['transcripts'][0]['transcript']

print("--> Transcribed Text:\n", transcript_text)

sentiment = comprehend.detect_sentiment(Text=transcript_text[:5000], LanguageCode='en')

summary = summarize_with_claude(transcript_text)

return {

"statusCode": 200,

"body": {

"summary": summary,

"sentiment": sentiment,

"transcription": transcript_text[:500],

}

}

def summarize_with_claude(text):

body = {

"anthropic_version": "bedrock-2023-05-31",

"max_tokens": 200,

"top_k": 250,

"stop_sequences": [],

"temperature": 1,

"top_p": 0.999,

"messages": [

{

"role": "user",

"content": [

{

"type": "text",

"text": (

"Summarize this meeting or speech in clear bullet points, "

"highlight any action items or topics discussed:\n\n"

+ text[:4000]

)

}

]

}

]

}

response = bedrock.invoke_model(

modelId=BEDROCK_MODEL_ID,

body=json.dumps(body),

contentType="application/json",

accept="application/json",

)

response_body = json.loads(response["body"].read())

return response_body.get("content", "No summary generated.")

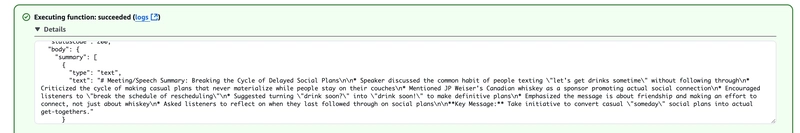

Perfect, we can see our code generated the Sentiment analysis data, and also summarized bullet points of our audio file.

📝 Conclusion

Combining multiple AWS services allows you to build truly innovative systems. In this example, we demonstrated how using a few straightforward services can result in a production-ready application with real-world applications across various industries. I hope you found this article helpful!

Happy coding 👨🏻💻

💡 Enjoyed this? Let’s connect and geek out some more on LinkedIn.

Top comments (2)

This Voice-to-Insights demo using Amazon Bedrock, Comprehend, and AWS Lambda is awesome! It’s amazing to see how voice data can be transformed into actionable insights in real time—truly a game changer for building smarter application.

Thank you, glad you liked the article :)