In March we saw 2 major releases of image generation tools ((Google, OpenAI)) that are very much different from what we're used to:

- Continuity - you can now proceed with the generated picture and iterate (e.g., it's possible to have the same character in multiple generated pics).

- Edits with prompts - it's nothing like the previous workarounds with in-paint, you can now ask a model to re-color an object or modify some details in the picture.

- Much better text/font rendering - no more hallucinated texts in non-existing languages.

- Instruction following - remember the "no elephant in the picture" meme, it's now fixed :)

Previously image gen was the domain of diffusion models. That's how Midjourney, Flux, DALL-E, Stable Diffusion work. With them every prompt is a one-off generation, i.e. there's no concept of dialog or context that we are used to in chatbots. Chatbots that produced images relied on external diffusion models to create them. And chatbots/LLMs - those were dominated by decoder-only autoregressive transformer models.

To repeat, since the Gen AI unfolding in 2021-2022 we've seen 2 different tech concepts with distinct areas, were:

- Transformer models dominated LLMs. They are based on next token prediction, producing a sequence of tokens one at a time.

- Diffusion models reigned image gen. They use noising and denoising, produce all pixels at once.

In the releases of Gemini 2 Flash and 4o image gen, both companies say they do it natively. OpenAI has also stated their model is autoregressive. While there are not many details shared, it's reasonable to assume that the new techniques are built upon the transformer models - we've seen before how they were repurposed for image inputs in so called multi-modal LLMs.

If we go 1 month back, to February 2025, there were 2 worth noting releases:

- Inception Labs introduced their Mercury Coder Small LLM that demonstrates similar to GPT-4o Mini performance (i.e. how smart it is) while at 10x the speed (generating 800 tokens/second)

- A team from China introduced LLaDA - an open source 8B model rivalling Llama 3 8B.

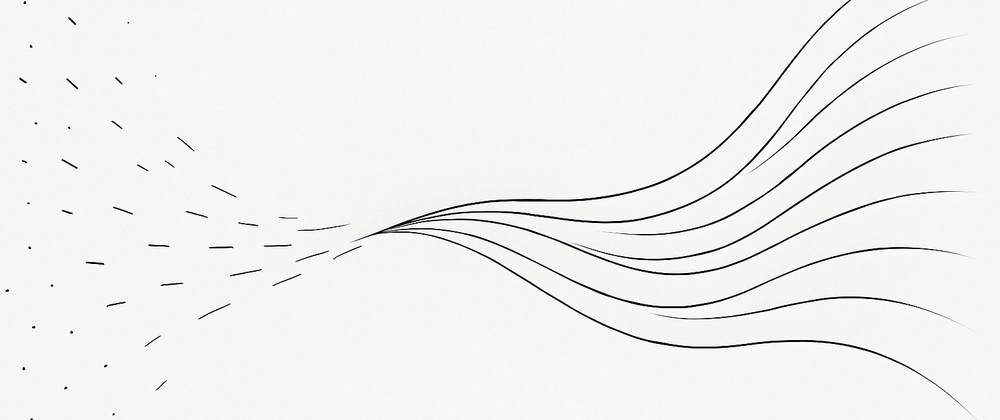

What's so special about the models? They apply diffusion - the concept previously used for image generation:

I.e. these 2 models have brought diffusion models (apparently with some tweaks and clever modifications) into language modeling. And they did it while matching small LLMs' performance and promising new capabilities, such as much faster generation!

Now, in March we saw the opposite move, LLMs stepping on the turf of image gen. Let's see how this cross-over unfolds!

P.S>

I was very much excited about diffusion models back in February - that seemed like something very new and unconventional, applying diffusion to LLMs and building chat models where transformers were uncontested. The other major architectural concept applied to chatbots was the Mamba architecture. Yet it doesn't seem that Mamba models got any traction compared to their transformer counterparts. Hoping for the best and for attention to shift away from transformers to exploring the newer LLM architectures.

Top comments (0)