TL;DR: Created a version checker for GitHub Actions using AI that made me question my life choices, but at least now I know why semver exists and how to write AI prompts better.

Chaotic Beginnings: Riding the AI Wave

As a CTO, I'm always on the lookout for ways to make our technical stack less... well, let's just say "interesting" to maintain. You know how it is - you start with a clean, modern codebase, and before you know it, you're dealing with a digital archaeology project.

So when my buddy (let's call him Patrick to just pretend his name is completely different) started raving about Cursor AI, I was skeptical. My previous fling with Copilot was about as successful as my attempt to learn the flute - lots of noise, not much music.

But Patrick was persistent. "Dude, you've got to try this", he said, "It's like having a coding buddy who actually understands what you're trying to do." I was quite puzzled, unsure which part of the story was more astonishing - AI evolving so rapidly or the fact that Patrick actually ended up with a buddy finally (even if it was artificial).

The tech world is going crazy over this "vibe coding" thing (thanks, Andrej Karpathy, for giving us yet another buzzword!). According to two articles, Google reports that over 25% of code is now being written by AI, and there are startups operating entirely on AI-generated code. Whether you're excited about this or just trying to keep your job, it's worth knowing what these tools can and can't do (at least without an explicit prompt to do so!).

The Project: GitHub Actions Versioner (or How I Learned to Stop Worrying and Love Version Numbers)

I decided to put Cursor AI through its paces with a project that seemed simple at first: a tool to check if your GitHub Actions are up to date. "How hard can it be?" I thought. Famous last words, right?

Turns out, version comparison is one of those things that looks simple until you actually try to do it properly. Just ask the folks at semver.org - they've been at it for years! My journey through the commit history of this project reads like a tragicomedy of version comparison attempts.

Here's what the tool actually does (after several iterations of "oh, I didn't think of that" - for that reason once again, I warn you - please don't even try to check the history of my PRs...):

-

Version Detective Work:

- Scans your workflow files (because who remembers where they put that one action?)

- Tries to make sense of whatever version format you've thrown at it

- Keeps track of where each version is used (because context matters)

-

The Great Version Comparison Circus:

- "Are these the same commit? Let me check..."

- "Is this a proper semver? Let me check..."

- "Is this a branch? Let me check..."

- "Is this a custom tag? Let me check..."

- "Did someone just use 'latest'? facepalm"

-

Reporting (Because We All Love Reports):

- ✅ "Looking good, champ!"

- ⚠️ "Time for an upgrade, buddy"

- ❌ "I have no idea what's going on here"

The result is GitHub Actions Versioner, a tool that's less about automatic updates and more about giving you the information you need to make smart decisions. Because let's face it, sometimes you want to know what's out there before you jump into an upgrade.

The "What I Actually Learned" Section (AKA Why You're Still Reading This)

Now, let me share the five most important lessons I learned while building this tool - lessons that might save you from some of the facepalms I experienced along the way.

1. Code Organisation - Or How I Learned to Stop Worrying and Love Modules

The first version of the code worked. Sort of. It was like my cousin's bedroom - everything was there, but good luck finding anything specific. The code was one big, happy (read: messy) family of functions all living together in perfect chaos.

After staring at this digital spaghetti for a while, I had a moment of clarity: "Single Responsibility Principle, you beautiful thing!" I sat down with Cursor AI and laid out a clear plan: separate modules for file reading, version parsing, comparison logic, and reporting. It was like watching a hoarder's house transform into a minimalist's dream - same stuff, just organised in a way that doesn't make you want to cry.

Lessons learned: If you want to build something even remotely maintainable, plan it first - or ask the AI to do it for you - before diving into the implementation. It'll help you structure your code properly from the start.

2. The Dependency Dating Game

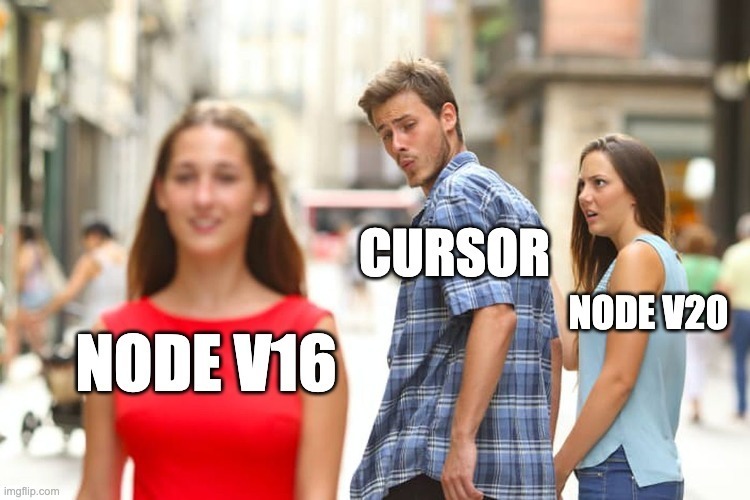

Cursor AI has this weird habit of suggesting older versions of dependencies, like it's stuck in some nostalgic time warp (not to mention that as a millennial guy, I still believe 1990 was 20 years ago - don't try to argue with me!). "Oh, you want to use Node.js? How about version 16? It was really popular back in the day!"

On the other hand, I had one particularly memorable moment when it tried to set me up with Node 22, completely ignoring the fact that

GitHub Actions runners are still living in 2023. It was like trying to use a time machine to attend a party that hasn't happened yet.

Lesson learned: Always be specific about wanting the latest, greatest, but well-supported versions. It's like online dating - you have to be clear about what you're looking for, or you'll end up with some interesting surprises.

3. The Version Comparison Circus and the Great Debugging Mystery

If you think comparing versions is straightforward, I have a bridge to sell you. What started as a simple "check if this version is newer than that one" quickly turned into a complex logic puzzle.

I had to handle:

- Branch names (the hipsters of versioning)

- Tags (the organised ones)

- Commit SHAs (the mysterious ones)

- Custom versions (the rebels)

At one point, I felt like I was writing a sophisticated version matching system. Picture this: you're Sheldon Cooper trying to organise his comic books (or in this case, version numbers):

- "Oh, you both point to the same commit? That's a match! 🔄" (like finding two copies of the same comic in different editions)

- "Different tags but same code? Still a match! ✅" (like realizing that "The Flash #1" and "Barry Allen's First Appearance" are the same issue)

- "One's a branch and one's a tag? Let's see what your commit dates say... ⏱️" (like determining if a comic is a first print or a reprint)

But here's the kicker: debugging this circus was about as fun as trying to find a needle in a haystack... while blindfolded... and the haystack is on fire. The logging was about as helpful as a chocolate teapot, leaving me with more "What in the name of all that's holy is happening here?" moments than I care to admit.

I had to sit Cursor AI down for a serious chat: "Look, if you're going to generate this version comparison logic, at least tell me what it's doing!" After some serious prompting (and maybe a few tears), we got proper logging in place. Now it's like having a GPS that actually tells you where you are, instead of just saying "You're somewhere in Europe, good luck!"

And just like on dating apps (I hope you're not tired of this topic yet...), sometimes you get unexpected matches. Two versions might look completely different (v1.0.0 and v1.0.0-hotfix-urgent-fix-please-dont-delete), but they're actually the same code underneath - like two profiles with different pictures but the same person. It's enough to make you want to send a rm -rf /* to the whole system and call it a day. #DontTryThisAtHome

Lessons learned: When AI generates complex logic, force it to narrate its thinking - ask for step-by-step comments or interim outputs, or it'll ghost you right when things break.

4. The Performance Tango

The first version worked, but it was about as efficient as a sloth running a marathon. Every time it found the same dependency in different files, it would check it again, like a goldfish with short-term memory loss.

I had a lightbulb moment: "Wait a minute... we don't need to check actions/checkout a million times just because it's used in every workflow file!" It was like realising you don't need to count every grain of rice in a bag - one sample will do just fine.

So I implemented a "unique dependencies" system, which was basically like putting all the versions in a spreadsheet and saying "Okay, you've already checked this one, move along!" Suddenly, the tool went from "I'll get back to you next week" to "Here's your report, hot off the press!"

Lessons learned: AI won't optimise unless you explicitly ask it to - so prompt for performance from the beginning, not just for functionality, unless you're into painfully slow surprises.

5. The AI-Human Tango

Working with Cursor AI is like having a really smart but slightly overenthusiastic developer. It means well, but sometimes it gets a bit too excited and starts changing things that were working just fine. For example, when reporting outdated versions, elements such as the commit SHA in the code's output would randomly disappear or change, leaving me wondering if they had ever been implemented correctly.

Besides that, I spent what felt like an eternity fixing tests. Just when I thought we were making progress, I'd get that dreaded message: Sorry, you've used up your 25 requests in this conversation. If you want to continue, please start a new prompt. It was like trying to have a conversation with someone who keeps saying, "Sorry, I have to go now", just when things were finally getting interesting.

And here's a pro tip: those premium requests aren't unlimited, so make each one count. Imagine asking a magic genie to show you a photo of someone smiling. If you don't specify exactly what you want, you might end up, at best, with what some call a “Polish smile” - which, as a Polish guy, I can tell you falls far short of a full, genuine smile. You want that complete, authentic smile, not a half-hearted smirk. (If you're still not sure what a "Polish smile" is, just Google it!)

The key is to work in small, manageable chunks and keep a close eye on what it's doing. It's like teaching someone to cook - you don't hand them the whole kitchen on day one, and you definitely don't let them near the pierogi until they've proven they can handle the basics.

Lessons learned: Don't throw your entire feature idea into one giant prompt. Work incrementally, commit working code often, validate each result - and brace yourself for some unexpected changes that AI might sneak in just for fun.

Wrapping Up

Working with Cursor AI on this project was like having a coding partner who's really smart but occasionally needs you to explain the obvious (still, believe me - sometimes it's better than AI trying to be too smart...). The key was learning how to ask for what I needed and when to trust my own judgment over the AI's suggestions.

If you've made it this far, you're either really interested in version comparison or really bored at work. Either way, I'd love to hear your thoughts! Star the repo, open an issue, add something to the Who's Using This? section (it's currently as empty as my coffee cup at 4 PM, but I'm hoping that'll change), or just drop by to say hi.

And if you find a bug, well... let's just say I won't be surprised (basically I just found one causing some issues with reaching rate limits of GH API from time to time). After all, version comparison is hard! ;-)

Top comments (0)