Logical data warehouses can implement multi-source mixed computations, but require configuring views and preprocessing data, resulting in an overly heavyweight architecture. DuckDB offers a lightweight solution but lacks a native Oracle connector, rendering custom development too complex. esProc, as another lightweight solution, supports the common JDBC interface, enabling mixed computations between any RDBs.

First, download and install esProc (Standard Edition recommended),Try It Free~~

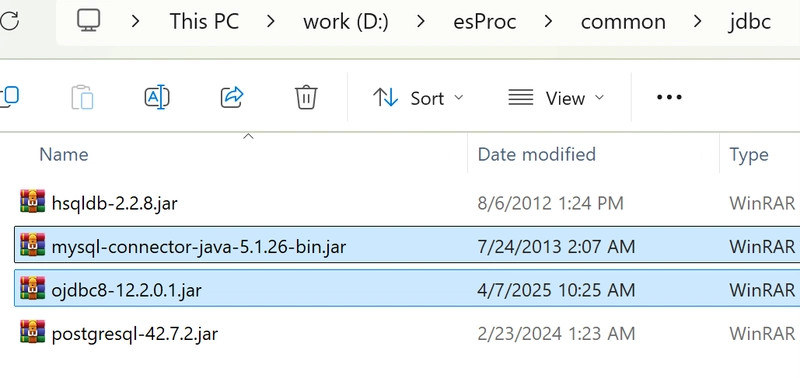

Place the database’s JDBC driver in the directory ‘[Installation Directory]\common\jdbc,’ which is one of esProc’s classpath directories. The following are the JDBC drivers for MySQL and Oracle:

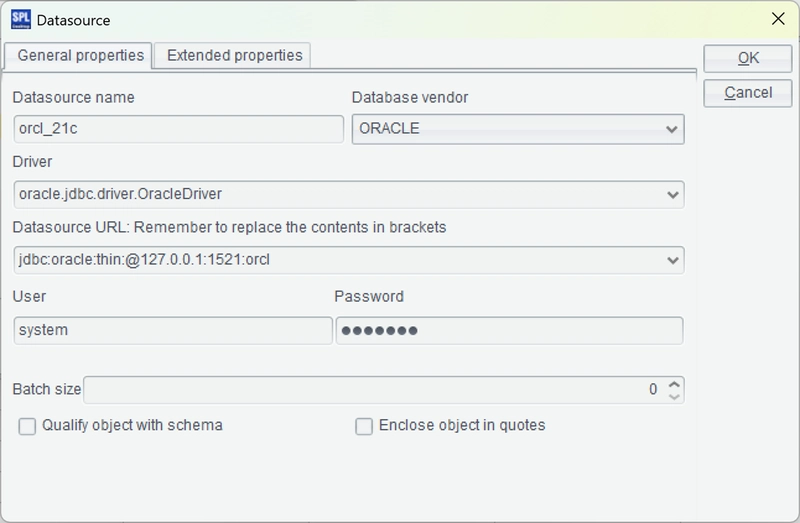

Open the esProc IDE. From the menu bar, select Tool -> Connect to Data Source to create new JDBC data sources. Enter the connection information for MySQL and Oracle.

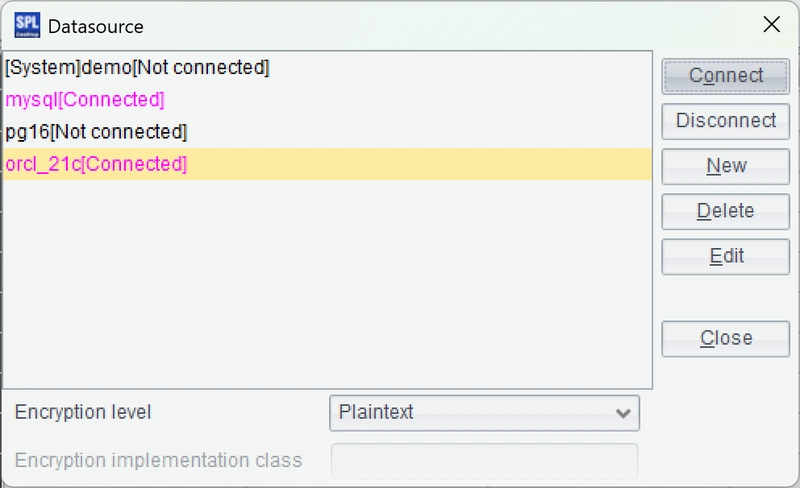

Return to the data source interface and connect to the two data source we just configured. If the data source names turn pink, the configuration is successful.

Create a new script in the IDE, write SPL statements, connect to Oracle, and load the data using a simple SQL query:

=connect("orcl_21c").query@x("select * from ALifeSurvey")

Press Ctrl+F9 to execute the script. The execution results will appear as a data table on the right side of the IDE, which is convenient for debugging SPL code.

Once the data is loaded successfully, we can begin to develop the cross-database mixed computation code:

Filter the MySQL vehicle insurance survey table using parameters, then perform an inner join with the Oracle life insurance survey table based on phone number. Group the results by age and marital status from the life insurance survey table, and calculate the average vehicle purchase price from the vehicle insurance survey table for each group.

Save the above script to a directory (e.g., D:\data\SurveyCross.splx). Run the script to see the results:

The above code loads the data into memory before joining, which is suitable for small data-volume scenarios. For large data-volume scenarios, the data should first be sorted in SQL, and then retrieved and joined simultaneously, which is known as merge and association. The code is:

The code changes little. It uses the cursor function to retrieve data and the joinx function to merge and associate data, and adds time statistics in A1 and A5.

The execution time is displayed in the log window in the lower right corner. This shows that for two tables with data volumes exceeding ten million rows, cross-database mixed computation takes approximately 100 seconds.

Having completed debugging in the IDE, the next step is to configure the Java application environment.

Locate the esProc JDBC-related jars, esproc-bin-xxxx.jar and icu4j_60.3.jar, in the [installation directory]\esProc\lib directory.

Place the two jars and the database’s JDBC driver in the classpath of the Java development environment.

Next, locate the esProc configuration file, raqsoftConfig.xml, in the [installation directory]\esProc\config directory. Also, place it in the classpath of the Java development environment.

Place the two jars and the database’s JDBC driver in the classpath of the Java development environment.

In the configuration file, modify the mainPath setting, which specifies the default path for scripts and other files. Note that the data source information is also in this configuration file.

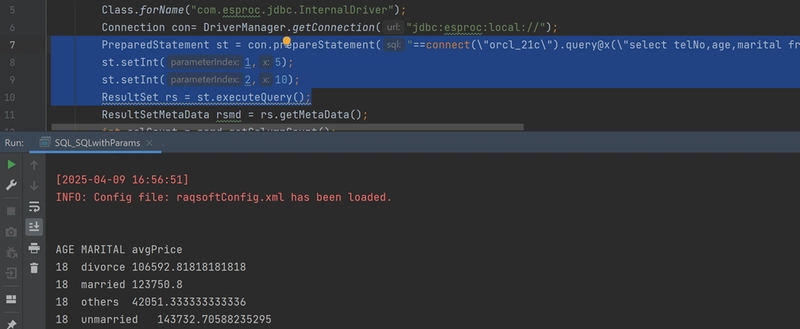

Now, we can write Java code and use esProc JDBC to execute the SPL script:

Class.forName("com.esproc.jdbc.InternalDriver");

Connection con= DriverManager.getConnection("jdbc:esproc:local://");

PreparedStatement st = con.prepareCall("call SurveyCross(?,?)");

st.setInt(1,10);

st.setInt(2,20);

ResultSet rs = st.executeQuery();

After execution, the results are visible:

As you can see, calling an SPL script with esProc JDBC is the same as calling a stored procedure with a database JDBC driver.

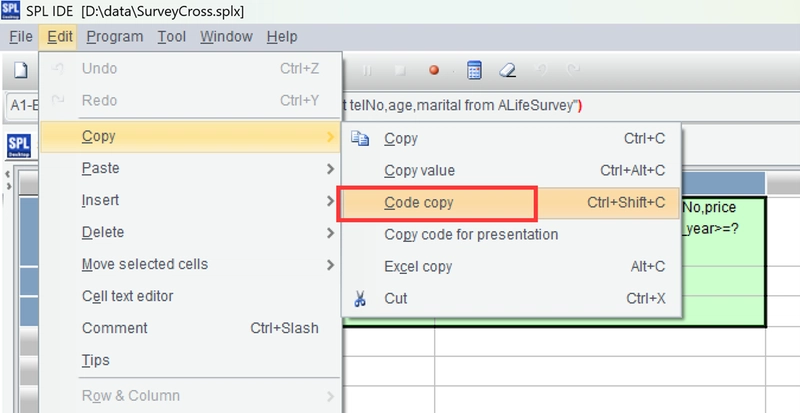

SPL scripts are not mandatory; you can convert them to SPL code and embed them in Java, much like SQL. To do this, open the script file in the IDE, select the cells containing the code (A1-B3), and then click “Edit -> Copy -> Code copy”. This will convert the multi-row, multi-column cellset code to single-line SPL code, which is temporarily stored on the clipboard.

Copy the converted SPL code into the Java code, replace parameter names with question marks, and then execute the code:

PreparedStatement st = con.prepareStatement("==connect(\"orcl_21c\").query@x(\"select telNo,age,marital from ALifeSurvey\")\t=connect(\"mysql\").query@x(\"select telNo,price from AVehicleSurvey where purchase_year>=? and purchase_year<=?\",?,?)\n=join(A1:L, long(TELNO); B1:V, telNo)\t\n=A2.groups(L.AGE, L.MARITAL; avg(V.price):avgPrice)\t");

st.setInt(1,5);

st.setInt(2,10);

ResultSet rs = st.executeQuery();

It can be seen that calling SPL code in Java is just like calling SQL code. The results are then visible upon execution:

esProc offers extensive data source support, including not only RDB but also NoSQL, BigData, cloud storage, message queues, and local files, all of which can be used in mixed computations. Visit the official website for more information.

Top comments (0)

Some comments may only be visible to logged-in visitors. Sign in to view all comments.