🧭 Why ForgeMT Exists

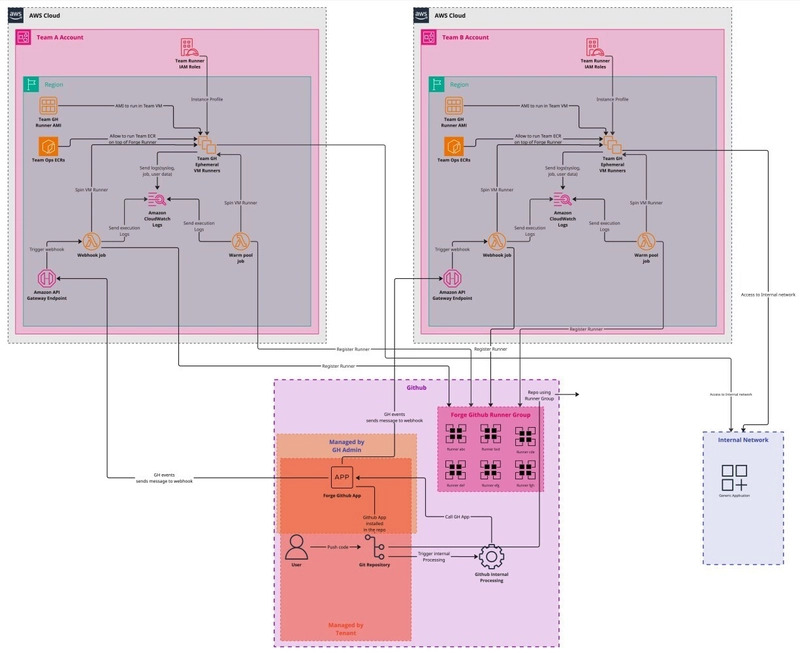

ForgeMT is a centralized platform that enables engineering teams to run GitHub Actions securely and efficiently — without building or managing their own CI infrastructure.

It provides ephemeral runners (EC2 or Kubernetes), strict tenant isolation, and full automation behind a hardened, shared control plane.

Before ForgeMT, every team in Cisco’s Security Business Group had to build and maintain their own CI setup — leading to duplicated effort, inconsistent security, slow onboarding, and rising operational overhead.

ForgeMT replaced this fragmented approach with a secure, scalable, multi-tenant platform — saving time, reducing risk, and accelerating adoption.

⚡ Fast Facts (ForgeMT Impact)

- ⏱️ 80+ engineering hours saved/month per team

- 📦 40,000+ GitHub Actions jobs/month

- ✅ 99.9% success rate across tenants

This post explains:

- 🚀 Why ForgeMT was needed

- 💼 What impact it had — From reliability to cost savings and security compliance.

- 🧱 How it works - Deep Dive into Architecture

👉 Jump to what matters most:

- 💼 Business Impact – For leadership and stakeholders

- 🏗️ Architecture – For platform engineers and DevOps

- 🧠 Or keep reading for full technical context and background

🚨 From Fragmented CI to Scalable, Secure Solutions: The Journey to ForgeMT

Credit: Prototype by Matthew Giassa - MASc, EIT —who championed the Philips Labs GitHub Runner module across multiple teams.

Before ForgeMT, each team used its own CI stack—Jenkins, Travis, or Concourse. While these tools met local needs, they created long-term issues: inconsistent patching, security gaps, and poor scalability.

Matthew built a promising PoC, but it was a siloed setup with manual AWS, GitHub, and Terraform steps. Rigid subnetting caused IPv4 exhaustion, and teams copy-pasting Terraform modules led to high maintenance overhead and config drift.

To address this complexity, I drove the end‑to‑end technical design and implementation of ForgeMT—a centralized, multi‑tenant GitHub Actions runner service on AWS—while coordinating with infrastructure, security, and platform stakeholders to ensure a smooth production launch.

At scale, teams were running thousands of Actions jobs across dozens of isolated environments—each with its own patch cadence, network quirks, and IAM policies. ForgeMT unifies these into a single control plane, delivering consistent security, predictable performance, and dramatically simplified operations.

For detailed business impact metrics (time saved, reliability gains, cost optimization), see the Business Impact section.

It builds on proven ephemeral EC2 and EKS/ARC runner modules, adding:

- IAM/OIDC-based tenant isolation

- Built-in observability (metrics, logs, dashboards)

- Automation for patching, Terraform drift, repo onboarding, and global Actions locks

By consolidating infrastructure into a hardened control plane, ForgeMT ensured that security and compliance were at the forefront while enabling rapid onboarding, eliminating manual patching, and solving IPv4 exhaustion. This was achieved by scaling pod-based runners via EKS + Calico CNI, with a strong focus on tenant isolation, IAM roles, and security groups (SG) to control access. The hardened control plane preserved the security and flexibility of the original prototype, delivering a secure, compliant, and scalable platform.

📊 Business Impact

ForgeMT has not only met the demands of various teams but also helped scale securely under Cisco’s guidance, optimizing cloud spend and increasing reliability across all stakeholders:

- Dramatic time savings (80 + hours/month per team): By automating every aspect of runner lifecycle—OS patching, Terraform module updates, ephemeral provisioning, and even repository registration—teams were freed from manual CI maintenance and could refocus on shipping features.

- Optimized cloud spend: Spot and On-demand Instances, right‑sized instance selection per job type, and EKS + Calico’s IP‑efficient networking cut infrastructure costs without slowing builds. ForgeMT also supports warm instance pools for high-frequency jobs, avoiding cold starts when speed is critical—striking a smart balance between performance and cost.

- Rock‑solid reliability (99.9% success over 40K+ jobs/month): Centralizing infrastructure eliminated snowflake environments and drift, reducing job failures caused by misconfiguration or stale runners to near zero.

- Enterprise‑grade security & compliance: IAM/OIDC per‑tenant isolation, CIS‑benchmarked AMIs, and end‑to‑end logging into Splunk ensured every action was auditable, vault‑grade credentials were never exposed, and internal audits passed with zero findings.

- True multi‑tenancy at scale: Teams retain autonomy over AMIs, ECRs, and workflow definitions while ForgeMT transparently handles networking, isolation, and autoscaling—supporting dozens of teams without additional IP consumption or operational overhead.

- AWS account isolation per tenant: Each tenant can have one or more individual AWS accounts, with full control over their own network setup. This includes the flexibility to configure internal or public subnets within their AWS accounts, ensuring strong security boundaries and independent resource management without ForgeMT managing their network.

Together, these outcomes turned a fractured, high‑toil CI landscape into a self‑service platform that scales securely, reduces costs, and accelerates delivery.

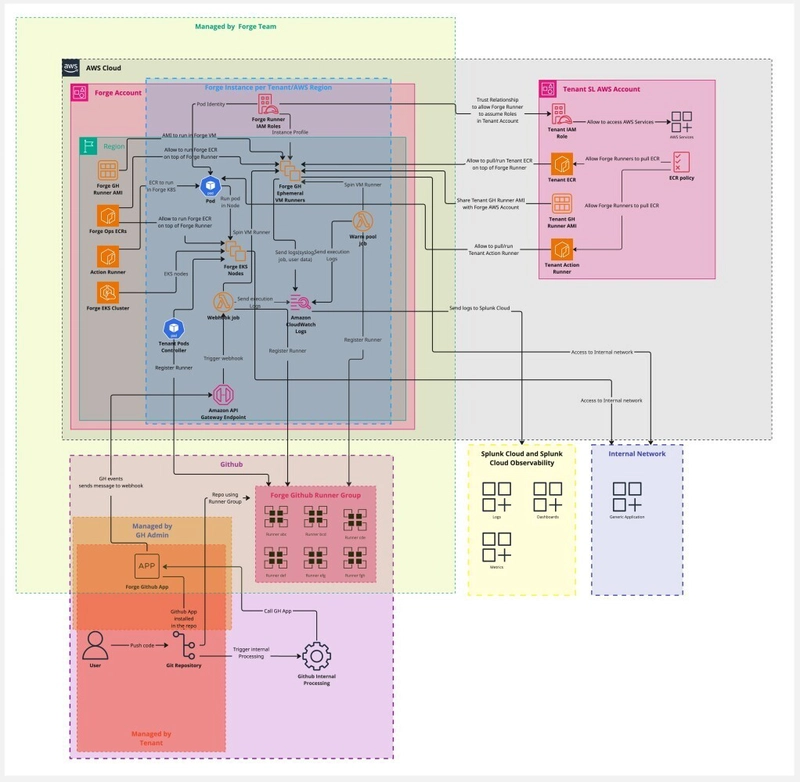

⚙️ ForgeMT Architecture Overview

📦 Core Components & Technical Foundations

These results are enabled by the following technical components:

- Terraform module for EC2 runners: Utilized as a Terraform module to provision ephemeral EC2-based GitHub Actions runners, supporting auto-scaling and cost optimization by using AWS spot and on-demand instances. This setup ensures that runners are created on-demand and terminated after use, aligning with the ephemeral nature of ForgeMT's infrastructure.

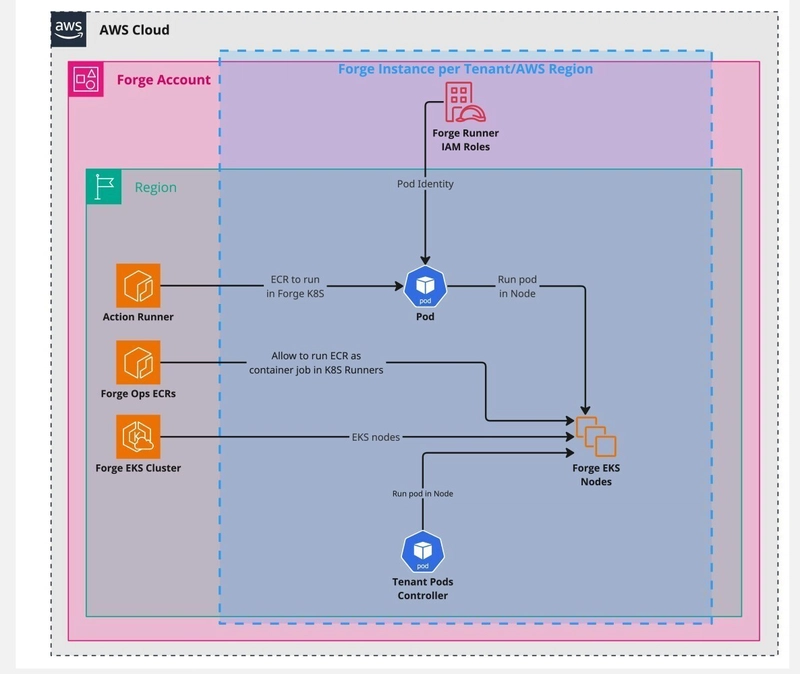

- ARC (Actions Runner Controller): Employed to manage EKS-based GitHub Actions runners, enabling containerized, isolated job execution via Kubernetes. This approach leverages Kubernetes' orchestration capabilities for efficient scaling and management of CI/CD workloads.

- OpenTofu + Terragrunt: Implemented for Infrastructure as Code (IaC), ensuring region-, account-, and tenant-specific infrastructure deployments with DRY (Don't Repeat Yourself) principles. This methodology facilitates consistent and repeatable infrastructure provisioning across multiple environments.

- IAM Trust Policies: Adopted to secure runner access using short-lived credentials via IAM roles and trust relationships, eliminating the need for static credentials and enhancing security.

- Splunk Cloud & O11y(Observability): Integrated for centralized logging and metrics aggregation, providing real-time observability across ForgeMT components. This setup enables detailed telemetry, including per-tenant dashboards for monitoring resource usage and optimization insights.

- Teleport: Utilized to provide secure, auditable SSH access to EC2 runners and Kubernetes pods, enhancing compliance, access control, and auditing capabilities.

- EKS + Calico CNI: Leveraged to scale pod provisioning without consuming additional VPC IPs, utilizing Calico's efficient networking. This setup ensures tenant isolation and optimizes network resource usage within limited VPC subnets.

- EKS + Karpenter: Enables dynamic, demand-driven autoscaling of Kubernetes worker nodes. Automatically provisions the most suitable and cost-effective EC2 instance types based on real-time pod requirements. Supports spot and on-demand capacity, prioritizing efficiency and performance. Warm pools can be configured to reduce cold start latency while maintaining cost control—ideal for high-churn CI/CD workloads.

These technologies form the backbone of ForgeMT, enabling its robust performance and scalability.

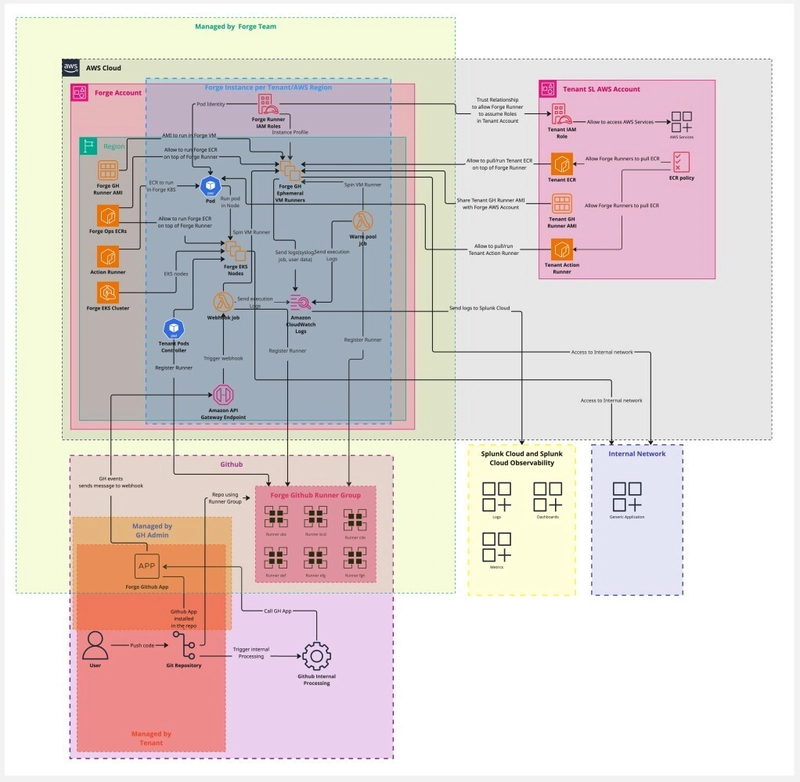

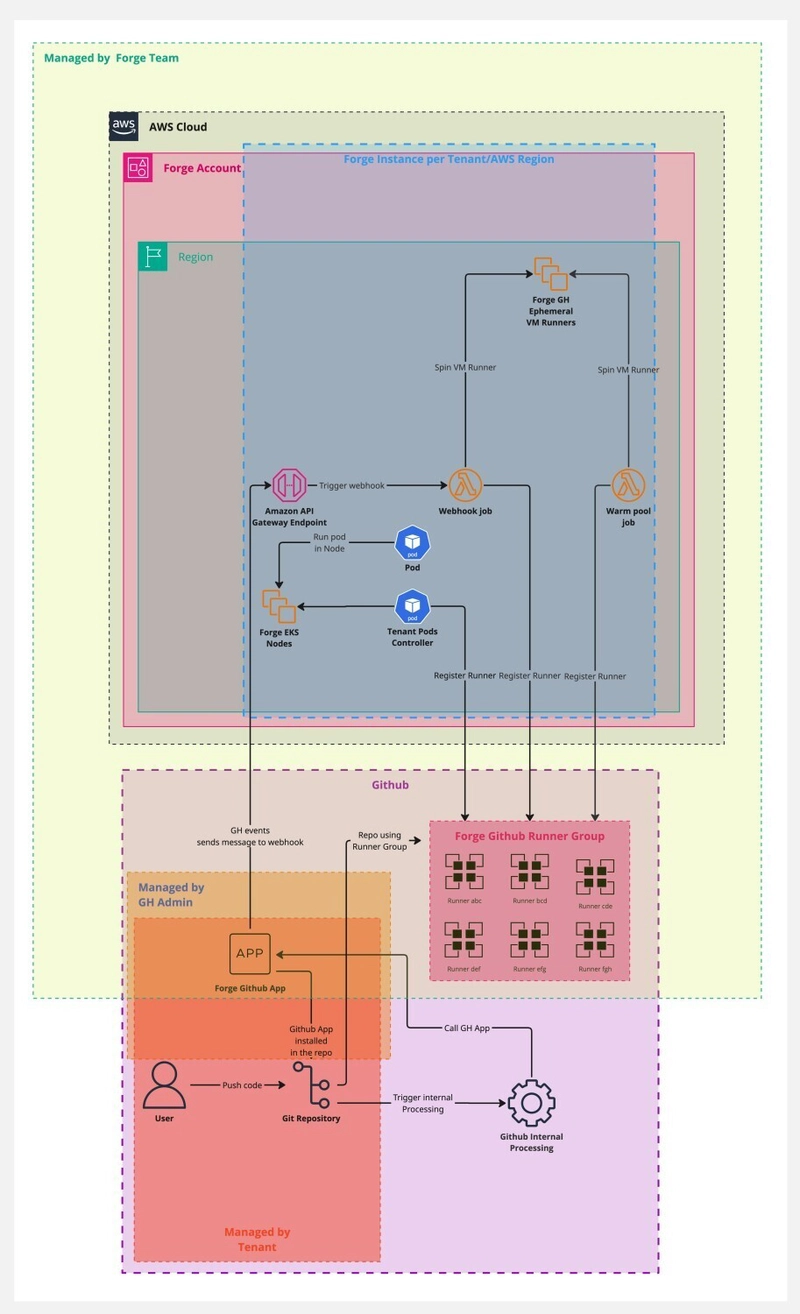

🧠 ForgeMT Control Plane (Managed by Forge Team)

The ForgeMT control plane hosts shared infrastructure and reusable IaC modules:

- ForgeMT GitHub App: Installed on tenant repositories to listen for GitHub workflow events and dynamically register ephemeral runners.

- ForgeMT AMIs & Forge ECR: Default base images for runners (VMs and containers).

- Terraform Modules: Each tenant-region pair deploys an isolated ForgeMT instance.

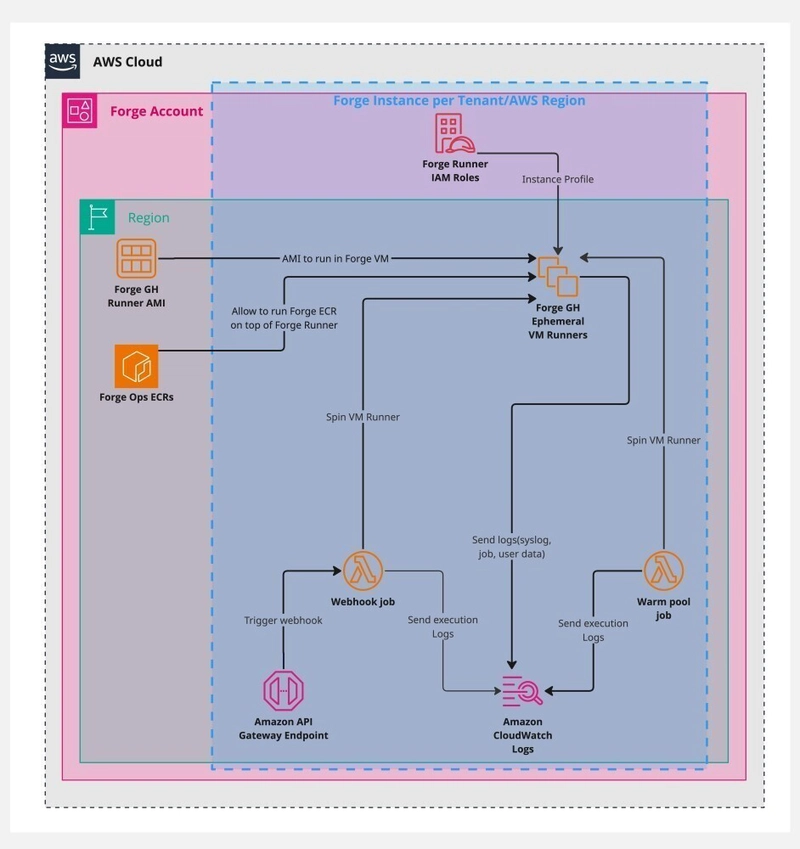

- API Gateway + Lambda: Processes GitHub webhook jobs to trigger runner provisioning.

- Centralized Logging: Runner logs are forwarded to CloudWatch, then into Splunk Cloud Platform.

- Centralized Observability: All AWS metrics are sent to Splunk O11y Cloud

- Teleport: Secure, role-based SSH access to VM runners (if needed), with session logging.

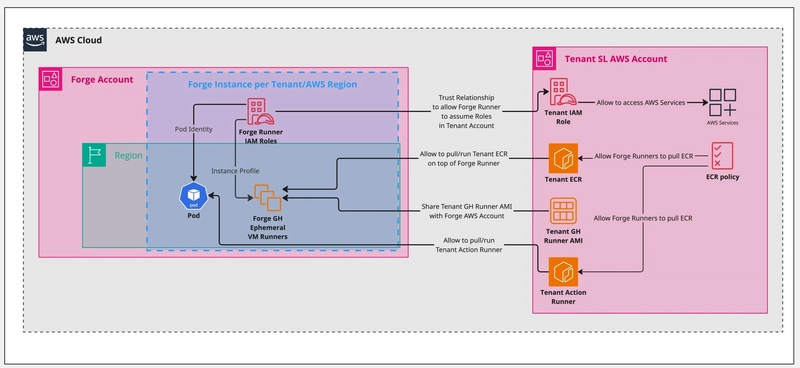

🏗️ Tenant Isolation

Each ForgeMT deployment is dedicated to a single tenant, ensuring full isolation within a specific AWS region. This approach guarantees that IAM roles, policies, services, and AWS resources are scoped uniquely for each tenant-region pair, enforcing strict security, compliance, and minimizing the blast radius.

💻 Runner Types

🧱 AWS EC2-Based Runners (VM and Metal)

- Ephemeral Runner Provisioning: EC2 runners are provisioned using Forge-provided AMIs or tenant-specific custom AMIs. These instances are pre-configured with the necessary tools to execute CI/CD jobs.

- Workload Execution: Jobs can be executed directly on the EC2 instance or via containers, using container: blocks in GitHub workflows.

- Security: Authentication to tenant AWS resources is handled through IAM roles and trust policies, eliminating the need for static credentials and ensuring dynamic, secure access control.

- Ephemeral Nature: Once a job is completed, the EC2 instance is terminated, maintaining a completely stateless environment.

☸️ EKS-Based Runners (Kubernetes)

- Kubernetes-Orchestrated Actions: Using the Actions Runner Controller (ARC), EKS runners are provisioned as pods within an Amazon EKS cluster.

- Resource Isolation: Each tenant is assigned a dedicated namespace, service account, and IAM role, ensuring strict isolation of resources and permissions.

- Container Images: Runners can pull container images from either the Forge ECR or the tenant’s own ECR, depending on the configuration.

- Scalability: EKS is ideal for high-scale operations, leveraging Kubernetes' orchestration capabilities to manage the lifecycle of runners efficiently.

🔁 Warm Pool

- Reducing Startup Latency: An optional warm pool can be configured for both EC2 and EKS runners, pre-initializing instances or pods to reduce waiting times during high demand.

- Importance for EKS: For EKS runners, the need for warm pools is significantly reduced, as Kubernetes already provides rapid scaling and efficient pod initialization.

- Usage in EC2: The warm pool helps minimize the initialization time for EC2 instances, resulting in faster job execution times for critical tasks.

💻 Examples of Runner Types in ForgeMT

ForgeMT offers flexibility for tenants to configure multiple runner types simultaneously, adapting to their workload needs. Each tenant can define as many runners as needed, with a parallelism limit set per tenant and runner type. Here are some typical runner examples and their use cases:

🧱 EC2 Runners

- Small: Lightweight instances for tasks with minimal resource usage, such as quick tests or linting.

- Standard: Instances for balanced workloads, ideal for code compilation or integration tests.

- Large: High-performance instances for tasks requiring more processing power, such as complex builds or load tests.

- Bare Metal: Bare-metal instances for applications that need full control over the hardware, such as simulations or intensive processing tasks.

☸️ Kubernetes Runners

- Dependabot: Used for automated dependency update jobs.

- Light (k8s): Runners for simple tasks that don't require Docker, like linting or unit test execution.

- Docker-in-Docker (DinD): Used for jobs that require Docker inside Kubernetes, such as image building or integration tests involving containers.

🔄 Configurable Parallelism per Tenant and Runner Type

- Each tenant can configure their own set of runners and use different EC2 instance types or Kubernetes pods simultaneously.

- The parallelism limit can be configured per runner type and tenant, ensuring that running multiple jobs does not overload resources.

- This allows each team to run jobs in parallel based on their needs without impacting the performance of other tenants or jobs.

Considerations

- Choosing the Right Runner: Depending on workload complexity and job requirements, you may choose EC2 or EKS runners. EKS is generally preferred for lightweight, scalable workloads, while EC2 may be necessary for jobs with specific hardware or memory requirements.

⚙️ GitHub Integration

GitHub events trigger ForgeMT through a webhook via API Gateway, dynamically registering runners into the appropriate GitHub Runner Groups associated with the tenant. The runner lifecycle is designed to be ephemeral: runners are registered just-in-time for job execution and are destroyed once the job is completed. When a new repository is installed, it is automatically registered with the correct GitHub Runner Group, ensuring seamless integration with the right tenant's runners.

🔌 Extensibility

Each tenant account can optionally manage the following resources:

- Tenant AMIs (for AWS EC2 runners): Custom-built images with pre-installed tooling tailored to the tenant's specific requirements.

- Tenant ECR: Houses custom container images used for VM-based container jobs, GitHub composite actions, or full pod images in EKS.

- Tenant IAM Role: Configured with trust relationships to allow ForgeMT runners to securely assume roles without the need for AWS access keys.

- ForgeMT offers flexibility for teams to customize their runners according to their specific needs. If a tenant requires a custom Amazon Machine Image (AMI) or container image, it is their responsibility to build and maintain it. We provide a base image to get them started, but the final configuration is under their control. Once the custom image is ready, it can be shared with our accounts and integrated into the ForgeMT platform, enabling the team to meet their unique requirements.

🔄 Optional Configurations

Tenants can choose to configure the following based on their specific needs:

- Accessing AWS Resources via Runners: To enable runners to interact with AWS services within the tenant's account, an IAM role must be established with a trust relationship permitting ForgeMT to assume it.

- Pulling Images from Tenant ECR: If runners need to pull images from the tenant's ECR—be it for container jobs, composite actions, or Kubernetes pods—the tenant must configure appropriate repository policies and IAM permissions to allow these operations.

- Accessing Additional Tenant Resources: For runners to access other AWS resources within the tenant's account, the IAM role assumed by ForgeMT must have policies granting the necessary permissions. This might involve setting up a chain of role assumptions or defining specific resource-based policies.

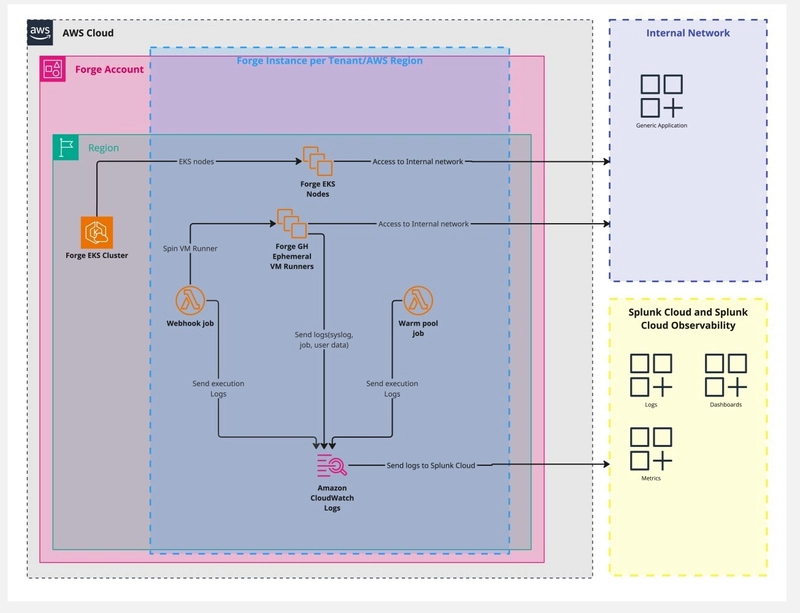

📊 Observability: Splunk Cloud & O11y

ForgeMT delivers full-stack observability with centralized logging and per-tenant metrics:

- Centralized logging: All relevant logs — syslog, AWS EC2 user data, GitHub runner job logs, worker logs, and agent logs — are sent to CloudWatch Logs and forwarded to Splunk Cloud for full visibility and auditability.

- Metrics via Splunk O11y: Captures detailed telemetry.

- Per-tenant dashboards: Each team gets dedicated dashboards showing cost breakdowns, resource usage, and optimization insights (e.g., high-memory job detection).

🔐 Security Model

- Strong tenant isolation: Every tenant has its own IAM roles, namespaces, and resources.

- IAM Role Assumption: Eliminates use of long-lived AWS credentials.

- No cross-tenant visibility: Runners cannot access other tenant workloads or secrets.

- Fine-grained access control: Each tenant defines what their runners can access by configuring the IAM role being assumed—this can include direct resource access or chained role assumptions for more advanced patterns.

- 🔒 Ephemeral Isolation: ForgeMT runners are automatically destroyed after every job — success or failure. This guarantees a clean slate every time, eliminates environment drift, blocks credential persistence, and prevents resource leaks by default.

🛡️ Compliance & Observability

ForgeMT ensures strict compliance and security throughout the lifecycle of its ephemeral runners, from provisioning to execution and shutdown.

- Full Audit Trail: Every runner lifecycle event — including provisioning, execution, and shutdown — is logged, ensuring complete visibility and traceability for compliance audits. This audit trail is vital for maintaining transparency in high-security environments.

- CloudWatch → Splunk Integration: Logs from the runners are forwarded from CloudWatch to Splunk, enabling teams to perform real-time queries on logs. This integration supports compliance audits by providing detailed, queryable logs that can be easily reviewed and accessed for regulatory requirements.

- IAM Integration: By using IAM (Identity and Access Management), ForgeMT eliminates the use of hardcoded credentials or AWS long-term access keys. This significantly reduces the risk of unauthorized access and enhances security by enforcing role-based access and temporary credentials that follow the principle of least privilege.

- Security Standards Compliance: ForgeMT meets internal security standards, which are aligned with industry best practices such as CIS Benchmarks. This ensures that the platform adheres to rigorous security controls and provides a secure environment for multi-tenant workloads.

🔍 Debugging Securely and Effectively

ForgeMT offers teams the option to choose between EC2 Spot Instances and On-Demand Instances, allowing for flexibility in cost optimization. While Spot Instances can provide significant cost savings, they come with the inherent risk that AWS may reclaim the instance at any time. Teams are responsible for evaluating this risk and determining whether to use Spot or On-Demand Instances based on the criticality of their workloads.

Given ForgeMT's design of ephemeral runners, which are terminated immediately after each job to prevent state persistence and credential leakage, debugging presents unique challenges. However, the platform offers robust solutions to address these challenges.

For real-time debugging, developers can access running jobs via Teleport. By including a sleep step in the workflow or using a custom wrapper, the runner can be kept alive temporarily. This allows for manual inspection and troubleshooting while the job is still running.

Additionally, even without live access, ForgeMT maintains comprehensive observability. Teams can rely on syslogs, GitHub Actions job logs, and runner-level telemetry to understand job behavior. Every job runs in a fully reproducible environment, meaning developers can simply rerun failed jobs through the GitHub UI, replicating the exact conditions without side effects while maintaining full auditability.

For Kubernetes-based runners, the same debugging approach applies: Teleport can be used for live access to running jobs. The integration with Kubernetes allows teams to extend the same debugging capabilities while leveraging the scalability and flexibility of the containerized environment.

🚀 ForgeMT: Powering Tenants with Flexibility and Control

- 💥 Ephemeral by design — Runners are created per job and disappear afterward. No drift. No patching. No residual garbage.

- 🛠️ Infra-as-Code from top to bottom — Fully automated. Declarative. Version-controlled. No snowflakes.

- 🔐 Strong isolation baked in — IAM, OIDC, and security group segmentation per tenant. No cross-tenant blast radius.

- 📦 Run anything, per tenant — EC2 or EKS. k8s, dind, or metal. Each tenant defines their own mix.

- 🚦 Control usage at scale — Enforce parallelism limits per tenant/type. No surprises. No abuse.

- 🕹️ Custom policies, zero effort — Tenants define autoscaling, labels, and configurations via GitHub — no AWS skills required.

- 🧘 No infra for tenants to manage — No patching, no VPCs, no accounts. Just push code.

- 🕵️ Observability without ownership — Logs, metrics, and traces exposed per tenant. No nodes to babysit.

- ⚡ Fast time-to-first-run — Cold starts optimized. Most runners boot in <20s, even for large jobs.

- 🌎 Network-aware provisioning — Runners automatically deploy into the correct subnet, zone, or region.

- 📊 Usage-aware scaling — Instance types are selected based on cost/performance tradeoffs — no more overprovisioning by default.

- 🧩 GitHub-native workflows — No toolchain rewrites required. Just drop in the runs-on labels and go.

- 🚫 No global queues — Each tenant is scoped, isolated, and throttled independently.

🛠️ Implementation & Adoption

It took about 2 months to evolve from a single-tenant, EC2-only setup into a fully multi-tenant platform. Highlights:

- 🔹 *Kubernetes support *— with Calico CNI + Karpenter

- 🔹 Tenant isolation by design

- 🔹 Per-tenant automation & base images

- 🔹 EKS pod identity for secure access

- 🔹 Integrated with Teleport, Splunk, and full observability

- 🔹 Custom dashboards with enriched telemetry

🚀 Frictionless Adoption

Onboarding was dead simple.

For most tenants, switching to ForgeMT meant updating just the runs-on label in their GitHub Actions workflows — ⚡ No rewrites. No migrations. No downtime.

For teams that required deeper isolation, assuming their own IAM role was just as straightforward:

- name: Configure AWS Credentials

uses: aws-actions/configure-aws-credentials@v4

with:

role-to-assume: arn:aws:iam::<tenant-account>:role/<role-name>

aws-region: <aws-region>

role-duration-seconds: 900

- name: Example

run: aws cloudformation list-stacks

💡 This approach made adoption fast, safe, and low-friction — even for teams skeptical of platform changes.

🚫 Overkill Warning: When ForgeMT Is Too Much

If you're a small team, ForgeMT might be overkill. Start with the basics: ephemeral runners (EC2 or ARC), GitHub Actions, and Terraform automation. Scale up only when you hit real pain. ForgeMT shines in multi-team setups where governance, tenant isolation, and platform automation matter. For solo teams, it may just add complexity you don’t need.

🔭 What’s Next

I’m currently focused on:

- Cost-aware scheduling — Prioritizing jobs based on real-time pricing and instance efficiency, optimizing for performance while reducing costs.

- Dynamic autoscaling — Moving from static warm pool rules to a more responsive, metrics-driven approach that adapts to the bursty nature of GitHub Actions workloads.

- Deeper observability — Integrating GitHub metrics for actionable insights that drive optimized runner performance.

- AI-driven scaling optimization — Leveraging historical data to predict workload demands, optimize resource allocation, and automate scaling decisions based on both performance and cost metrics.

If you’re tackling similar problems — or looking to adopt, extend, or contribute to ForgeMT — let’s talk. I’m always open to collaborating with engineers building serious DevSecOps infrastructure.

🧪 Dive Into the ForgeMT Project

Ideas are cheap — execution is everything. The ForgeMT source code is now publicly available — check it out:

👉 https://github.com/cisco-open/forge/

⭐️ Don’t forget to give it a star ;)!

✍️ In Short

ForgeMT emerged from real-world CI pain at enterprise scale. What began as a prototype to fix local inefficiencies has grown into a secure, multi-tenant, production-grade runner platform. I’m sharing this so others can skip the trial-and-error and build smarter from the start.

🤝 Connect

Let’s connect on LinkedIn and GitHub.

Always happy to trade notes with like-minded builders.

This article was originally published on LinkedIn.

Top comments (0)