I'm a .NET solution architect, AI enthusiast, and... yes, a vibe coder.

It feels like just 10 years ago the big topic was breaking monoliths into microservices. Now, it's all about multi-agent frameworks, and .NET is definitely far behind Python and TS here.

The Good

.NET is a strong choice for enterprise development, enabling the creation of big, scalable, fault-tolerant, and distributed real-time applications.

The Bad

The most popular in .NET Semantic Kernel focuses on enabling AI capabilities in new applications, specifically designed for building AI-first experiences rather than serving as the foundation for traditional enterprise systems like ERP or broker platforms.

The Ugly

I want something similar to LangGraph: a combination of traditional bytecode infrastructure and AI integrations.

By "traditional bytecode," I mean the foundational elements like routing, messaging, recovery, observability, concurrency, isolation, ACID, timers and scheduling, state management, and all the workflow logic required around an application.

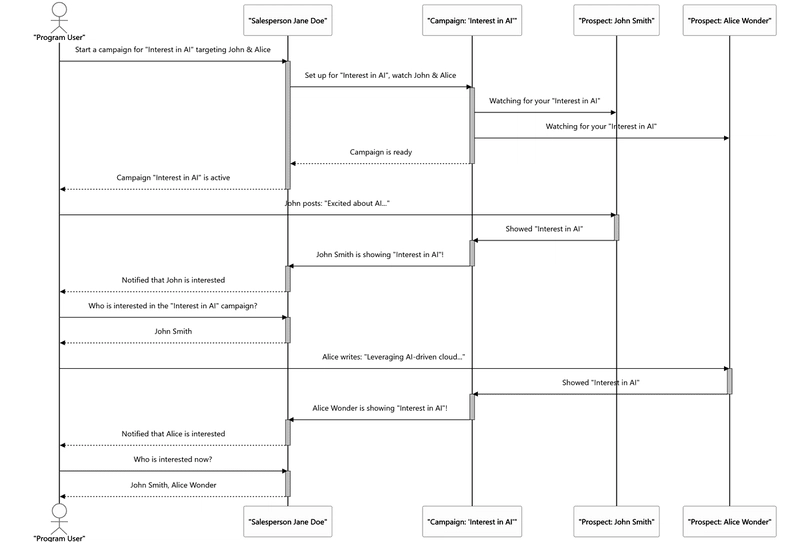

In our case, an Actor is an AI agent encapsulated within this bytecode layer. It's distributed and runs across multiple processes on a network.

Tech Stack

- .NET 9, C#

- Microsoft.Extensions.Ai

- Microsoft Orleans

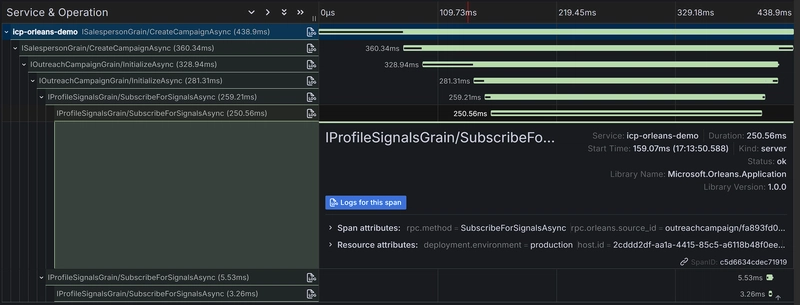

- Grafana and OpenTelemetry

Why Microsoft Orleans

Microsoft Orleans is a cloud-native framework based on the virtual actor model. In Orleans, each actor (called a grain) is identified by a stable key and is always "virtually" available. Grains are activated on-demand and automatically garbage-collected when idle. This means developers write code as if all actors are in-memory, while the Orleans runtime transparently handles activation, placement, and recovery.

Grains encapsulate their own state and behavior, enabling intuitive modeling of business entities (customers, accounts, orders, etc.) as long-lived objects.

Orleans was designed for massive scale. By default grains automatically partition application state and logic, letting the system scale out simply by adding silos (server hosts).

Simply saying "refund agent for user 12345" in support chat is our grain, and we can have millions of them, with no engineering overhead.

public class RefundGrain: Grain, IRefundGrain

{

public async Task Refund(decimal amount, string currency)

{

// The state is loaded; all you need to do is call an LLM.

}

}

// no db calls, no api calls, no routes, simply like that.

var refundGrain = client.GetGrain<IRefundGrain>(12345);

await refundGrain.Refund(100, "USD");

Microsoft.Extensions.Ai

Microsoft.Extensions.AI libraries provide with a unified and consistent way to integrate and interact with various generative AI services, offering core abstractions like IChatClient and IEmbeddingGenerator to simplify the process, promote portability, and enable the easy addition of features such as telemetry and caching through dependency injection and middleware patterns.

Similar to LangChain, abstracts away OpenAI or Ollama from implementation details.

var response = await _chatClient.GetResponseAsync<ResponseModelType>(prompt);

Show me the code!

The primary goal of this application is to empower sales development representatives (SDRs) to reach out to prospects at the most opportune moment with highly relevant and personalized messaging, increasing the chances of engagement and conversion. Instead of cold outreach, it enables "warm" outreach based on real-time triggers.

Running the cluster is super simple.

.UseOrleans(siloBuilder =>

{

siloBuilder

.UseLocalhostClustering()

.AddMemoryGrainStorageAsDefault()

.AddActivityPropagation();

})

Then, moving to k8s is also quite straightforward

What I love about .NET is that it's perfect for day-two operations. The debugging, troubleshooting, and observability tools are seamlessly integrated for large enterprise products. Traces, metrics, logs to different sinks, everything is just couple of lines of configuration!

Next Steps

I hope you now have an idea of what Orleans is and how it can be useful for building distributed applications.

Next time, we'll dive deep into AI implementation and explore using Orleans to build a chat application where each grain manages its own memory.

Top comments (0)