TL;DR: In this post, we explore how to use Mastra AI — a TypeScript-native agent framework — with Couchbase Vector Search to build a production-ready, multi-agent RAG (Retrieval-Augmented Generation) blog-writing assistant.

2025 has marked a significant shift toward agent-based AI systems. There have been a large number of AI Agent frameworks released in the past few months. One such powerful agent framework is Mastra.

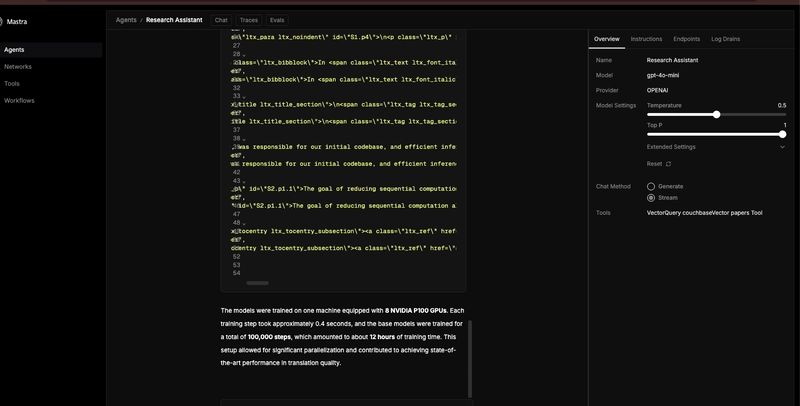

Mastra is an open-source TypeScript agent framework. You can use Mastra to build AI agents that have memory, can execute functions, or chain LLM calls in deterministic workflows. You can also feed them application-specific knowledge using RAG. Mastra also comes with built-in support for running evals and observability, making it very suitable for production use cases.

What are Mastra Workflows

There has been growing complexity in building robust AI applications. What starts as a simple LLM call quickly becomes extremely complex with a large number of agents, prompts, and coordination logic among agents. This is where Mastra workflows come in. The Mastra Workflows bring structure, reliability, and developer-friendly patterns to building generative AI applications.

Mastra workflows are graph-based state machines that allow you to orchestrate complex sequences of AI operations. Workflows let you define discrete steps with clear inputs, outputs, and execution logic.

This functionality combines the flexibility of TypeScript with the structure of a workflow engine.

With workflows providing orchestration, the next crucial component is knowledge retrieval. That’s where Couchbase Vector Store fits in.

Couchbase Vector Store for Mastra

Mastra now natively supports Couchbase as a vector store for RAG workflows.

In Mastra, you can process your documents into chunks, create embeddings, store them in a vector database, and then retrieve relevant context at query time.

Setting up the Couchbase vector store is relatively simple:

// Initialize Couchbase connection

const couchbaseStore = new CouchbaseVector({

connectionString: process.env.CB_CONNECTION_STRING,

username: process.env.CB_USERNAME,

password: process.env.CB_PASSWORD,

bucket: process.env.CB_BUCKET,

scope: process.env.CB_SCOPE,

collection: process.env.CB_COLLECTION

});

// Create vector search index

await couchbaseStore.createIndex({

indexName: "research_embeddings", // name of Couchbase Vector Search Index

dimension: 1536, // Embedding dimensionality

similarity: "cosine"

});

Note: When setting up vector search in Couchbase, developers can optimize for either recall (accuracy) or latency based on application needs. The system supports vectors with dimensions up to 4096, making it compatible with most modern embedding models.

Implementing a Multi-Agent Research-Writer Workflow using Mastra AI and Couchbase

The foundation of this example is a multi-agent architecture in which the user initially provides a search query. Based on this, the Researcher Agent performs RAG, leveraging Couchbase vector search to retrieve relevant documents. Then, the Writer Agent transforms the research output into polished content.

You can check out the Github Repo for this project here

Creating a Research Agent

The research agent takes an input query from the user and retrieves relevant information using RAG:

const researchAgent = new Agent({

name: 'Research Agent',

instructions: `You are a research assistant that analyzes academic papers.

Find relevant information using vector search and provide accurate answers.`,

model: openai('gpt-4o-mini'),

tools: {

vectorQueryTool: createVectorQueryTool({

vectorStoreName: 'couchbaseStore',

indexName: 'research_embeddings',

model: openai.embedding('text-embedding-3-small'),

}),

},

});

This agent uses OpenAI's GPT-4o-mini model and is equipped with a vector query tool that allows it to search through embedded documents stored in Couchbase.

Creating a Writer Agent

The writer agent takes the research output and transforms it into polished content

import { Agent } from "@mastra/core/agent";

import { openai } from "@ai-sdk/openai";

export const writerAgent = new Agent({

name: "Writer Assistant",

instructions: `You are a professional blog writer that creates engaging content.

Your task is to write a well-structured blog post based on the research provided.

Focus on creating high-quality, informative, and engaging content.

Make sure to maintain a clear narrative flow and use the research effectively.

Focus on the specific content available in the tool and acknowledge if you cannot find sufficient information to answer a question.

Base your responses only on the content provided, not on general knowledge.`,

model: openai("gpt-4o-mini"),

});

Creating a Writer Workflow using Mastra Workflows

As mentioned previously, Mastra’s workflow system provides a standardized way to define steps and link them together. In this example, we create a workflow for the Researcher and Writer Agents to connect these agents in a sequential process.

import { Step, Workflow } from "@mastra/core/workflows";

import { z } from "zod";

import { researchAgent } from "../agents/researchAgent";

import { writerAgent } from "../agents/writerAgent";

// Define the research step

const researchStep = new Step({

id: "researchStep",

execute: async ({ context }) => {

if (!context?.triggerData?.query) {

throw new Error("Query not found in trigger data");

}

const result = await researchAgent.generate(

`Research information for a blog post about: ${context.triggerData.query}`

);

console.log("Research result:", result.text);

return {

research: result.text,

};

},

});

// Define the writing step

const writingStep = new Step({

id: "writingStep",

execute: async ({ context }) => {

const research = context?.getStepResult<{ research: string }>("researchStep")?.research;

if (!research) {

throw new Error("Research not found from previous step");

}

const result = await writerAgent.generate(

`Write a blog post using this research: ${research}. Focus on the specific content available in the tool and acknowledge if you cannot find sufficient information to answer a question.

Base your responses only on the content provided, not on general knowledge.`

);

console.log("Writing result:", result.text);

return {

blogPost: result.text,

research: research,

};

},

});

// Create and configure the workflow

export const blogWorkflow = new Workflow({

name: "blog-workflow",

triggerSchema: z.object({

query: z.string().describe("The topic to research and write about"),

}),

});

// Run steps sequentially

blogWorkflow.step(researchStep).then(writingStep).commit();

This code defines the workflow with two steps: first research, then writing. Data flows between the steps, with the research output feeding directly into the writing process.

Conclusion

The Couchbase Vector Store integration with Mastra AI enables you to build scalable and production-ready AI agents, thanks to Mastra's agent orchestration capabilities and Couchbase's vector search.

As AI agent adoption continues to evolve, we can expect even more powerful AI systems that combine the strengths of multiple specialized agents and knowledge retrieval mechanisms.

Top comments (0)