There’s a lot of noise right now around vibe coding.

People are generating entire apps off vibes, using LLMs to go from idea to code in minutes. And I’ll admit—it’s impressive. But having been in testing for over 20 years, I couldn’t help but notice something was missing.

The energy is real. The speed is addictive. But the stability? The reliability? Often nowhere in sight.

That’s where I come in.

Who I Am

I’ve been a tester most of my life. My job has always been to make sure products deliver on their promise—not just in ideal demos, but in real-world use.

I’ve seen every testing angle: automation, exploratory, performance, security, chaos. And when vibe coding entered the chat, I was curious.

I started building with it.

I started testing it.

And I started breaking it—easily.

The Problem with Raw Vibes

Vibe coding is missing a backbone. It’s missing intention. The AI can generate, but can it validate? Can it correct itself? Can it know when it’s broken?

Not unless we give it the tools to do so.

How I Made It Work

That’s where my tester mindset kicked in. I created a project called vibe-todo to prove that vibe coding can be viable—if it’s guided by structure and testing.

Here’s what made the difference:

- Hypothesis tests as self-correction checkpoints

- A

.windsurfrulesfile that tells the AI how to behave - Benchmarks with SLA enforcement (10ms max per op)

- Logs that are structured for LLMs to observe and react to

- A Grammatical Evolution engine that generates edge cases dynamically

Yes, I even wired in a GE-based evolutionary testing suite using DEAP to evolve inputs over time. This thing will literally mutate payloads to find failures your unit tests missed.

And just like that—LLM code went from vibes to validated.

Real Talk: What Happens Without Tests?

Let me give you an example.

The LLM once generated a beautiful CRUD API. Looked perfect.

Until I ran it.

POST requests with empty strings didn’t trigger validation. Worse—no unit test existed to catch it. I only saw the issue when Hypothesis tests started failing fast.

This wasn’t an edge case. This was a gap.

Without testing, LLMs hallucinate correctness.

Structured Logs = Observability for LLMs

I output structured JSON logs with every operation:

{

"operation": "add_task",

"duration_ms": 8,

"sla_pass": true,

"timestamp": 1713040000.01

}

This lets Windsurf agents watch the output, check SLA compliance, and even suggest fixes based on violation patterns.

It's like putting Grafana in your LLM’s head.

What Every Vibe Coder Should Add

You don’t need 20 years of testing experience to strengthen your vibes. Start here:

Minimal Testing Manifesto:

-

.windsurfrules(your AI coding contract) - Hypothesis tests for behavior validation

- Regression tests for known fail states

- Benchmark tests with SLA enforcement

- Grammatical Evolution (GE) for fuzzing + edge discovery

- Structured logs for agent feedback

If you’re doing even half of this—you’re ahead of the game.

My Ask to the Community

Don’t abandon vibe coding.

Just add intent.

Make your vibes testable. Add rules. Use benchmarks. Think like a tester.

And then? Let the LLM earn that green check.

Closing

I've tested software for decades. And I can tell you this:

Code that feels good isn’t always good.

But code that’s structured, tested, benchmarked—and still feels good?

That’s the future.

Vibe coding doesn’t need to be risky. It can be resilient.

It can be with intent.

Built with vibes. Verified by tests. Ready for prod.

Top comments (4)

I think you are bringing up great ideas and improvements here. You're right, now I manually need to validate the code. And even manually need to validate the generated (unit) tests. I don't trust the output so most of the work is now in QA.

So your manifesto is very helpful, insightful and inspiring. I will definitely try to apply your ideas.

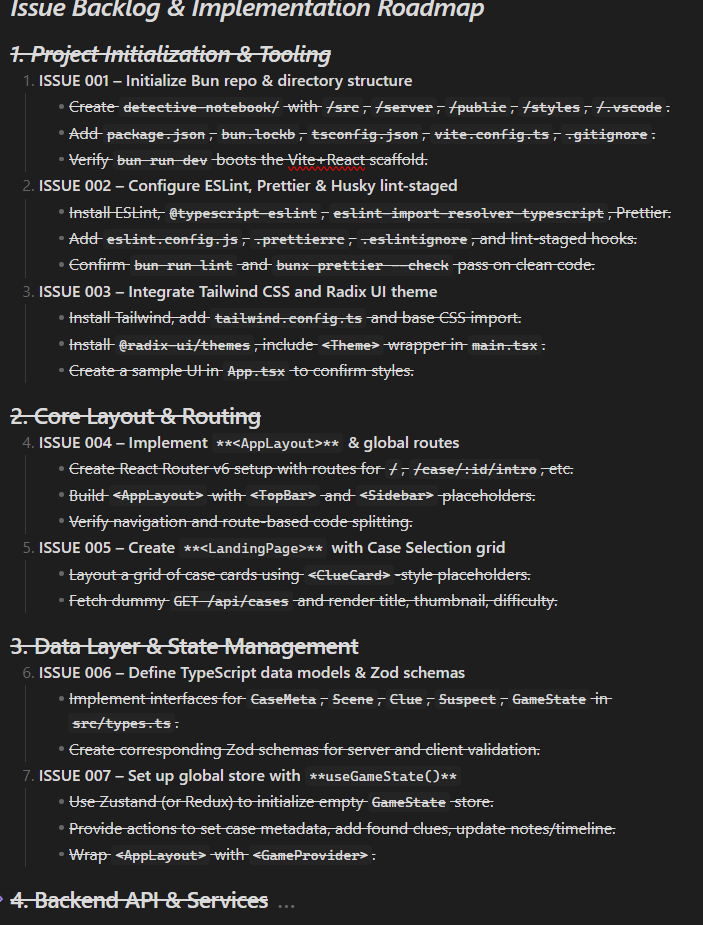

A little tip from a veteran vibe coder, before it was even a thing. Have your llm create a list of issues in issue form. It's often difficult to get the model to get really granular with it's planning at times, but a way to kinda trick it into looking closer and deeper is making it create thought out issues by category.

It also aids the planning stage in figuring out the ordering as well. Here is a little peak at my obsidian notebook showing a little section of what I'm talking about:

This is a great post so thanks for sharing these valuable insights. I’m going to explore these in the coming weeks for sure

Great to hear from QA's perspective. Hope I can have the enthusiastic to vibe- testing as you did with vibe-coding. Sure will try. Thanks