A few new open source models were released this March 2025, two of them being the QwQ 32B model from Alibaba and the new Gemma 3 27B model from Google, which are said to be good at reasoning. 🤔

Let’s see how these models compare against each other and against one of the finest open source reasoning models, the Deepseek R1 model.

And, if you’ve been reading my posts for quite some time, you know that I don’t agree with the benchmarks until I test them myself! 😉

TL;DR

If you want to jump straight to the conclusion, when compared against these three models, the answer is not that obvious as the other blog posts, QwQ leads on coding but the other two models are again equally top notch on reasoning.

If it is for coding, go with the QwQ 32B model or Deepseek R1, or for anything else, Gemma 3 is equally awesome and should get your work done.

Brief on QwQ 32B Model

In the first week of March, Alibaba released this new model with a 32B model size, claiming it is capable of rivaling Deepseek R1, which has a model size of 671B. 🤯

This marks their initial step in scaling RL (Reinforcement Learning) to improve the model's reasoning capabilities.

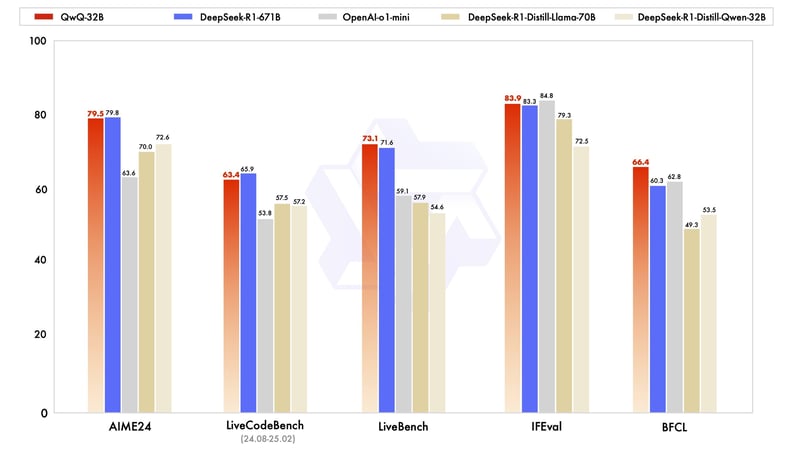

Below is the benchmark they have made public to highlight QwQ-32B’s performance in comparison to another leading model, Deepseek R1.

Now, this is looking a lot more interesting, especially with the comparison they have done with a model that is about 20x their size, Deepseek R1.

Brief on Gemma 3 27B Model

Gemma 3 is Google's new open-source model based on Gemini 2.0. The model comes in 4 different sizes (1B, 4B, 12B, and 27B), but that's not what's interesting.

It is said to be the "most capable model you can run on a single GPU or TPU". 🔥 This directly means that this model is capable of running on resource-limited devices.

It supports a 128K context window with support for over 140 languages, and it's mainly built for reasoning tasks.

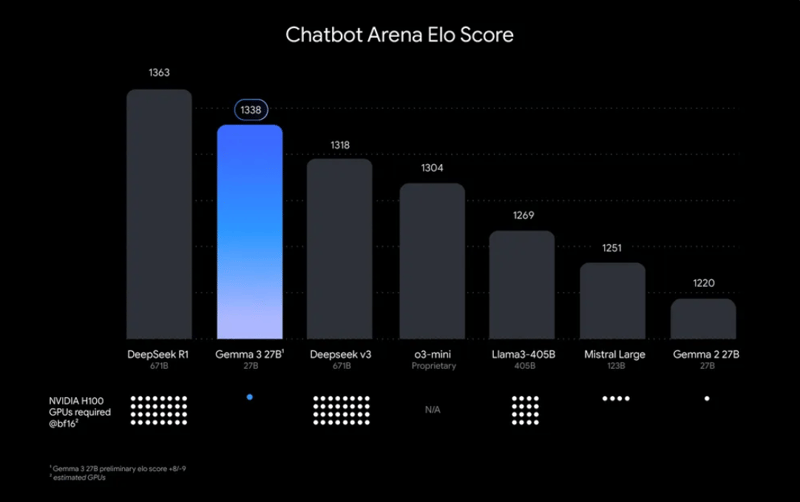

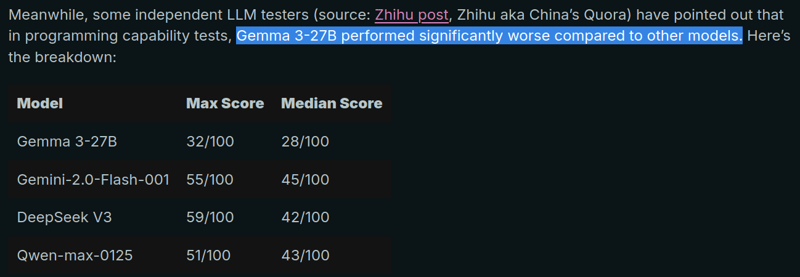

However, the Gemma 3 27B model does not seem to perform as well on different coding benchmarks compared by many folks.

Let's see if that really is the case and how good this model is at reasoning.

Coding Problems

💁 In this section, I will be testing the coding capabilities of all three of these models on an animation and a tough LeetCode question.

1. Rotating Sphere with Alphabets

Prompt: Create a JavaScript simulation of a rotating 3D sphere made up of alphabets. The closest letters should be in a brighter color, while the ones farthest away should appear in gray color.

Response from QwQ 32B

You can find the code it generated here: Link

Here's the output of the program:

The output we got from QwQ is completely insane. It is exactly everything I asked for, from the animation to the letters spinning to their colors changing. Everything is on point. So good!

Response from Gemma 3 27B

You can find the code it generated here: Link

Here's the output of the program:

It does not seem to have completely followed my prompt. There definitely seems to be something happening, but I asked for a 3D sphere, and it seems that it got a ring spinning with the alphabets.

Knowing this model isn’t that good at coding, at least we got something working though!

Response from Deepseek R1

You can find the code it generated here: Link

Here's the output of the program:

It kind of got it correct as well and implemented exactly what I asked for. No doubt about that, but compared to the output from the QwQ 32B model, this really does not seem to compare in terms of overall output.

Summary:

In this section, no doubt, QwQ 32B model is the winner. It really crushed both our coding tests with the animation and the hard LeetCode question. It does not quite seem to be fare that we are comparing these small models with Deepseek which is 671B (37B active per query) in model size, but quite surprisingly QwQ 32B beats Deepseek R1 here.

2. LeetCode Problem

For this one, let’s do a quick LeetCode check with a super hard LeetCode question to see how these models handle solving a tricky LeetCode question with an acceptance rate of just 14.4%: Strong Password Checker.

Considering this is a tough LeetCode question, I have little to no hope with all three models as they are not as good as some other code models like Claude 3.7.

If you want to see how Claude 3.7 compares to some top models like Grok 3 and o3-mini-high, check out this blog post:

Claude 3.7 Sonnet vs. Grok 3 vs. o3-mini-high: Coding comparison

Shrijal Acharya for Composio ・ Feb 27

Prompt:

A password is considered strong if the below conditions are all met:

- It has at least 6 characters and at most 20 characters.

- It contains at least one lowercase letter, at least one uppercase letter, and at least one digit.

- It does not contain three repeating characters in a row (i.e., "Baaabb0" is weak, but "Baaba0" is strong).

Given a string password, return the minimum number of steps required to make password strong. if password is already strong, return 0.

In one step, you can:

- Insert one character to password,

- Delete one character from password, or

- Replace one character of password with another character.

Example 1:

Input: password = "a"

Output: 5

Example 2:

Input: password = "aA1"

Output: 3

Example 3:

Input: password = "1337C0d3"

Output: 0

Constraints:

1 <= password.length <= 50

password consists of letters, digits, dot '.' or exclamation mark '!'.

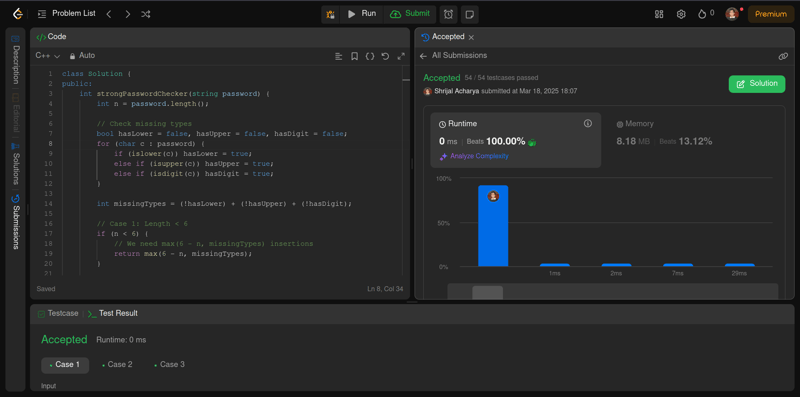

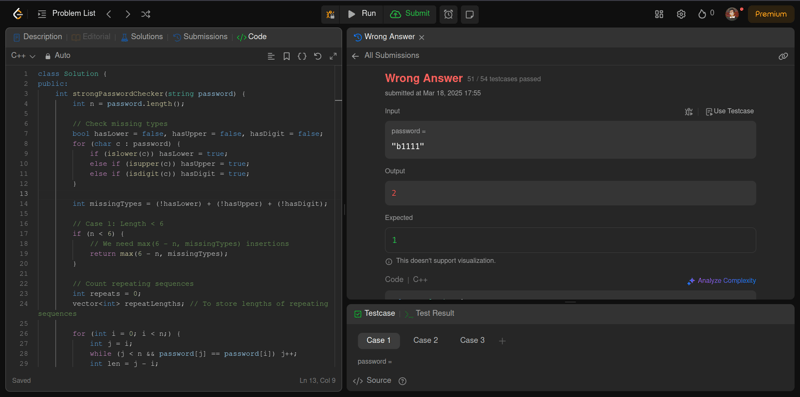

Response from QwQ 32B

You can find the code it generated here: Link

Damn, got the answer correctly. Not just that, it was able to write the entire code in O(N) time complexity, which is within the expected time complexity.

If I have to compare the code quality, I would say it is decent. Not just good code, it documented everything properly. So fair enough, the model seems to have a lot of potential.

Though it took a lot of time to think, it’s the working answer that really matters here.

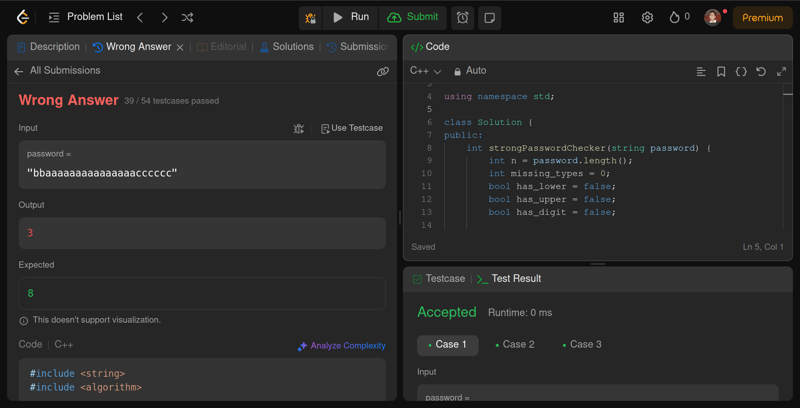

Response from Gemma 3 27B

You can find the code it generated here: Link

Okay, Gemma 3 falls short here. It got almost halfway there with 39/54 test cases passing, but such faulty code does not even help. Better not to bother generating code at all than to code it poorly. 🤷♂️

But considering this model is an open-source model with just 27B parameters, which operates on a single GPU or TPU, that’s one thing we can consider.

Response from Deepseek R1

I have almost no hope for this model on this question. In my previous test, where I compared Deepseek R1 with Grok 3, Deepseek R1 failed pretty badly. If you’d like to take a look:

Grok 3 vs. Deepseek r1: A deep analysis

Shrijal Acharya for Composio ・ Feb 21

You can find the code it generated here: Link

Cool, it almost got it with 51/54 test cases passing. But even a single test case failure will result in an incorrect submission, so yeah, hard luck here for Deepseek R1.

Summary:

The result is pretty clear when comparing all three of these models on two coding questions. QwQ 32B model is clearly the winner 🔥, Gemma 3 27B did try but is definitely not something you would want to use for advanced coding. Can’t really say much on Deepseek, it’s mid but gets the job done for most basic to intermediate coding problems as I do use this model on a daily basis.

Reasoning Problems

💁 Here, we will check the reasoning capabilities of both the models.

1. Fruit Exchange

Let’s start off with a pretty simple question (not tricky at all) which requires a bit of common sense. But let’s see if these models have it.

I just wanted to test if the models can parse just what is asked and reason just what is needed or deal with everything that is given. Similar to asking what is 10000*3456*0*1234? 🥱

Prompt: You start with 14 apples. Emma takes 3 but gives back 2. You drop 7 and pick up 4. Leo takes 4 and gives 5. You take 1 apple from Emma and trade it with Leo for 3 apples, then give those 3 to Emma, who hands you an apple and an orange. Zara takes your apple and gives you a pear. You trade the pear with Leo for an apple. Later, Zara trades an apple for an orange and swaps it with you for another apple. How many pears do you have? Answer me just what is asked.

As you can see, we provide all the unnecessary background with apples and oranges, but about Pear, which is just what is asked, it is at the end with one single trade, resulting in us having 0 pear.

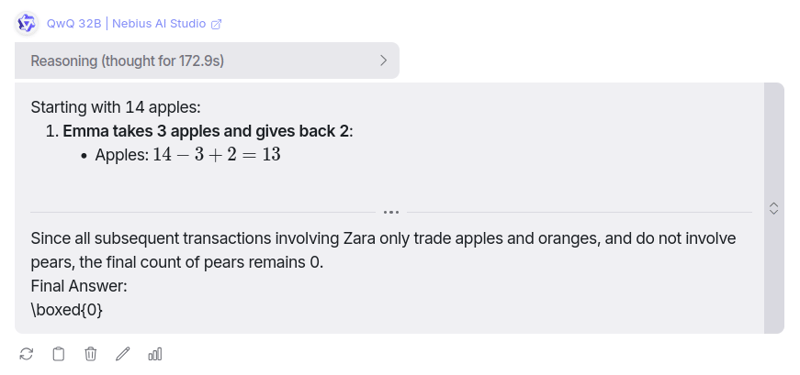

Response from QwQ 32B

You can find it's reasoning here: Link

Just as I thought, it seems to lack it completely. 😮💨 Seriously, it thought for 172 seconds (~2.9 minutes) doing all the calculations of apples and oranges. This is definitely disappointing from QwQ 32B.

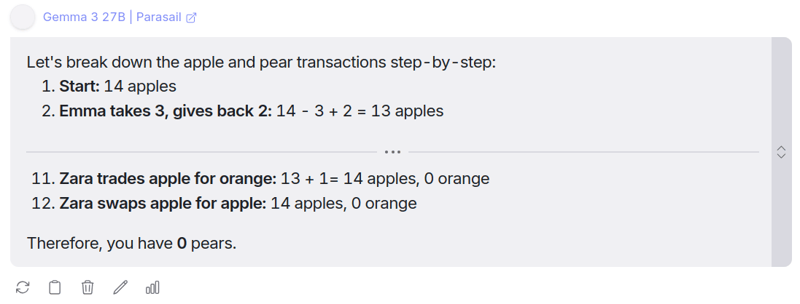

Response from Gemma 3 27B

You can find it's reasoning here: Link

In just a few seconds, it was able to calculate all the situations and return the total pear count. Can't really complain much here.

The response was really super quick, completely impressed by this model.

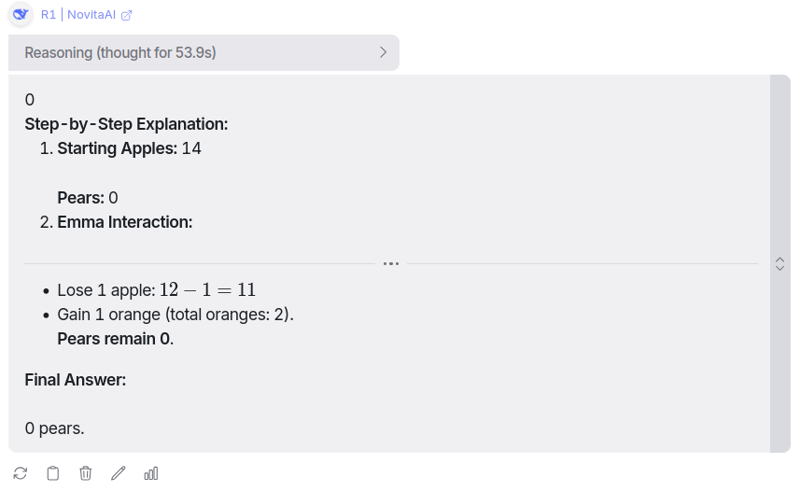

Response from Deepseek R1

You can find it's reasoning here: Link

It thought for about a minute and did come up with the answer. It was expected that it would come up with the correct answer, but I just wanted to see if it could give me the answer to what I asked without doing all the unnecessary calculations with apples and oranges. Sadly, it failed as well.

Summary:

To be honest, with this question, I wasn’t really looking for the correct answer; even a first grader could answer it. I was simply trying to see if these LLMs could filter out all the unnecessary details and answer just what is asked, but sadly, all of them failed, even though I added this sentence: "Answer me just what is asked." at the end of the prompt. 😮💨

2. Women in an Elevator

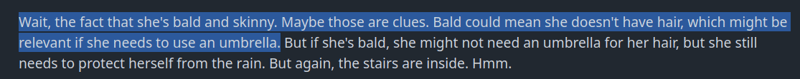

Prompt: A bald, skinny woman lives on the 78th floor of an apartment. On sunny days, she takes the elevator up to the 67th floor and walks the rest of the way. On rainy days, she takes the elevator straight up to her floor. Why does she take the elevator all the way to her floor on rainy days?

The question is somewhat tricky as I have included similar unnecessary details to distract the LLMs from easily finding the answer. The answer is that the woman is short and can't reach the button in the summer, but she carries an umbrella that she can use to press the elevator button higher.

The answer has nothing to do with the girl being bald or skinny. 🥴

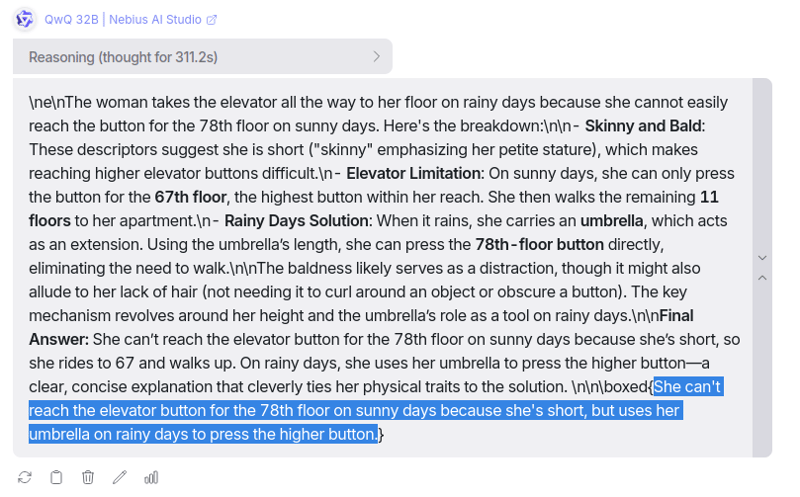

Response from QwQ 32B

You can find it's reasoning here: Link

It took a seriously long time here, 311 seconds (~5.2 minutes), and it took some time to figure out what that had to do with her being bald and skinny, but here, I am super impressed with the response.

The way it explained its thought process is really impressive. You may want to take a look as well.

Fair to say, QwQ 32B really got it correct and explained everything perfectly. ✅

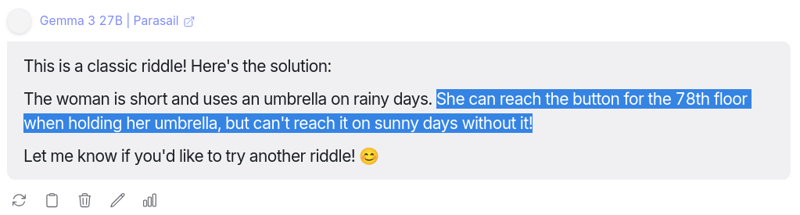

Response from Gemma 3 27B

You can find it's reasoning here: Link

I was really shocked by Gemma 3, within a couple of seconds, it got it correct. This model is looking solid on reasoning tasks. So far, I am super impressed with this Google open-source model. 🔥

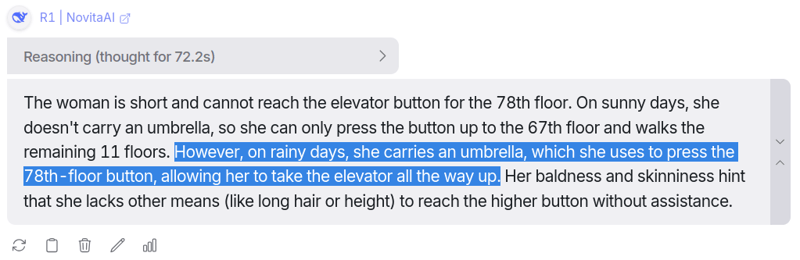

Response from Deepseek R1

You can find it's reasoning here: Link

We know how good Deepseek is at reasoning tasks, so it's not really surprising that it got the answer correct.

It took some time to come up with the answer, thinking straight for about 72 seconds (~1.2 minutes), but I love the reasoning and thought process it comes up with every time.

It was really having a hard time understanding what the problem had to do with the woman being bald and skinny, but hey, that's the reason I added it. 🥱

Summary:

There's no doubt that all three of these models are super good at reasoning questions. I especially love the way QwQ 32B and the Deepseek R1 model explain their thought process, and again, how quick Gemma 3 was at answering both questions. Solid 10/10 for all three models for getting to the answer ✅, but QwQ 32B sometimes might feel like it reasons unnecessarily. 🤷♂️

Mathematics Problems

💁 Looking at the reasoning question answers from all three models, I was convinced that both of these models should also pass the math questions.

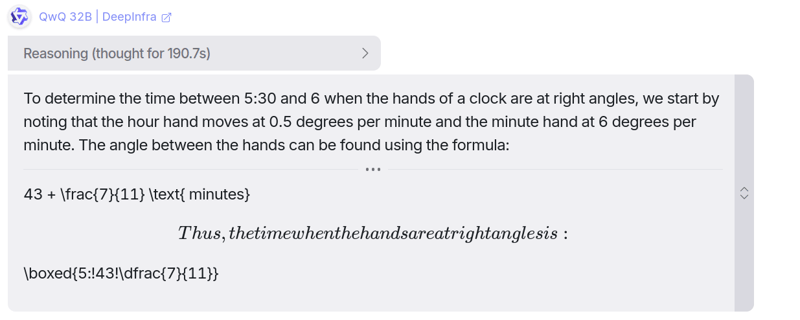

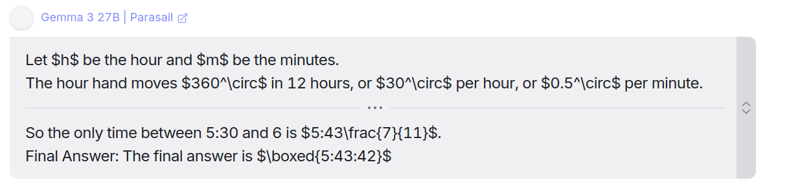

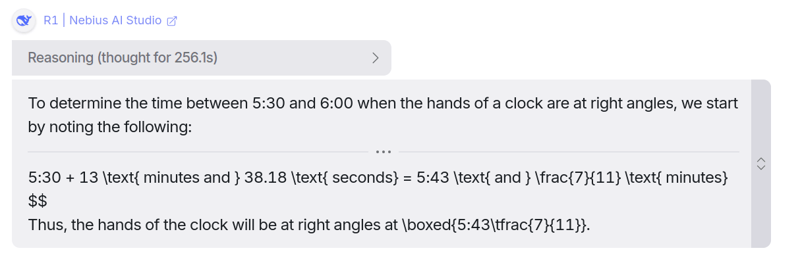

1. Clock Hands at Right Angle

Prompt: At what time between 5.30 and 6 will the hands of a clock be at right angles?

Response from QwQ 32B

You can find it's reasoning here: Link

Response from Gemma 3 27B

You can find it's reasoning here: Link

Apart from the coding questions, Gemma 3 got this one correct as well and is crushing in all the reasoning and math questions I've mentioned. What a tiny yet powerful model this is.

Really impressed! 🫡

Response from Deepseek R1

You can find it's reasoning here: Link

From this article of mine comparing Deepseek R1 and Grok 3, it is already clear how good Deepseek is at Mathematics, so I had high hopes for this model.

And as usual, it got this question correct as well. It definitely did a bit of long reasoning and thought process to come up with the answer, but it came up with the answer.

Summary:

All three models are already doing so well with reasoning and mathematics. They all got it correct. Gemma 3 27B does it in no time, and the other two models, QwQ 32B and Deepseek R1, crush this as well with proper reasoning. ✅

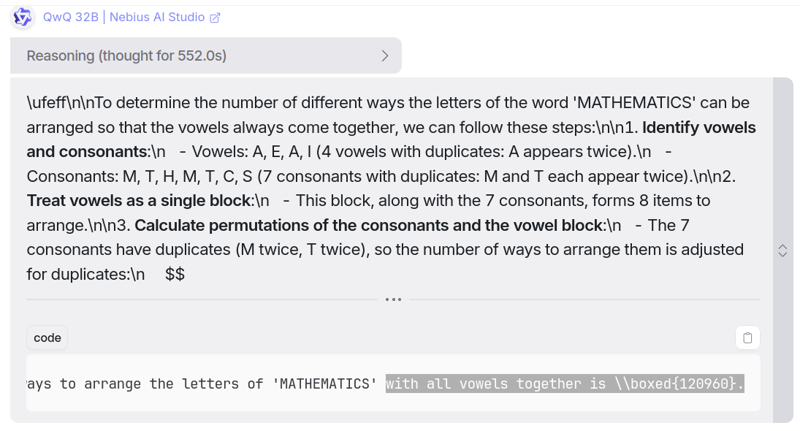

2. Letter Arrangements

Prompt: In how many different ways can the letters of the word 'MATHEMATICS' be arranged so that the vowels always come together?

It’s a classic Mathematics question to ask an LLM, so let’s see how these three models perform.

Response from QwQ 32B

You can find it's reasoning here: Link

After thinking for 552 seconds (~9.2 minutes) 🥱, yes, it really took that long to come up with the answer, but as always, it nailed this question as well.

I agree that all its reasoning feels super long and boring, but if it gets the job done, then that's a good side to it. The QwQ 32B model is really looking solid and crushing all the questions so far. 🔥

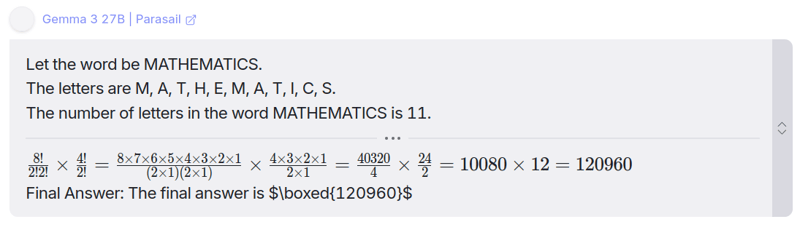

Response from Gemma 3 27B

You can find it's reasoning here: Link

It was spot on. How quickly and accurately this model gives responses is just amazing. Google really did good work on this model, and there's no doubt about that. 😵

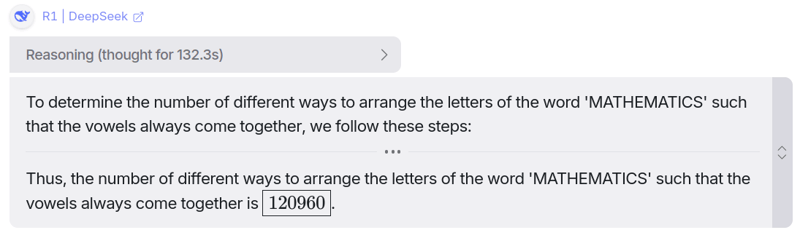

Response from Deepseek R1

You can find it's reasoning here: Link

After thinking for around 132 seconds (~2.2 minutes), it was able to come up with the answer, and once again, the correct answer from Deepseek R1.

Summary:

The answer is obvious this time as well. All three of our models got it perfect with perfect explanation and reasoning. For a question this tough, it's a super impressive response to get back from all three models, and to me, it's Gemma 3 27B that just stands out. What a lightweight solid model. 🔥

Conclusion

The result is pretty clear. For me, after all this comparison, if I have to choose a model, it would still be Deepseek R1. The QwQ 32B model performed really well and can be said to be the clear winner of the comparison. ✅ The same seems to be the case with some other folks testing the models. 👇

But for me, Deepseek R1 model feels like a sweet spot with balanced reasoning and overall response time.

Even though Gemma 3 and Deepseek R1 couldn’t get the coding questions completely correct, they are just too good with overall reasoning. I can’t be more impressed with the Gemma 3 27B model. It really is a model you should have in your toolbox.

What do you think? Let me know your thoughts in the comments below! 👇

Top comments (19)

Nice detailed comparison! 🔥

Glad you liked it, Anmol! Thanks for checking it out. ✌️

This is fantastic! Really well explained! 🤩

Thank you, Aniruddha 🙌

Guys, do let me know how's your experience working with either of the models in the comments! ✌️

Good one sathi. 🤯

Always good to read what you write. Thank you Shrijal.

Good comparison. Keep going. 💘

Thank you, @baludo__ :D Glad you loved it!

😇

Another great article. Thanks a lot!

Thanks for checking out, Benny 🙌

Means a lot!

Didn't know qwen series were from alibabab

This 100% deserved the attention it got! It was really interesting to me.

Love the detailing and comparison. 👏🏻

Thanks, Stephanie! ✌️

I mainly use GPT for my daily tasks. I code very rarely, and when I have to, I use the Phind model. I've never seen anyone compare it, but it works like a charm for me.

Never heard of the Phind model. Thank you for sharing! 🙌