🌟 Hello! I’m André, a Staff Software Engineer and proud member of the AWS Community Builders program. With 9 AWS certifications earned along my journey, I’m constantly pushing the boundaries of what’s possible in the cloud. I’m passionate about designing scalable architectures, experimenting with emerging technologies like Generative AI, and giving back to the tech community by sharing what I learn. Excited to connect with fellow builders and innovators as we shape the future of cloud computing! 🚀

Introduction

In this article, we’ll explore how you can build your own AI-powered video generation workflow using Amazon Bedrock, AWS Lambda, AWS Cloudwatch and Amazon S3. We'll leverage Amazon Nova Reels as the foundation model to generate custom videos based on text prompts provided by users.

Initial Approach adopted

This solution follows an asynchronous architecture: the first Lambda function receives the prompt and triggers the video generation process in Amazon Bedrock. Once the video is created and saved automatically in an S3 bucket, an S3 Event Notification triggers a second Lambda function that generates a pre-signed URL on AWS Cloudwatch for enabling access to the video.

Target Audience

This guide is ideal for AWS Community Builders, IT professionals, and Generative AI enthusiasts who want to integrate AI-driven video generation into real-world serverless applications.

Bedrock Prerequisites

Accessing Amazon Nova Foundation Model via Amazon Bedrock

Before starting your project, make sure you complete the following steps:

AWS Account Setup:

- Have an active AWS Account with billing enabled.

- Ensure your IAM user or role has the necessary Bedrock service permissions, such as:

bedrock:InvokeModel

bedrock:ListFoundationModels

Step 1: Enable Amazon Bedrock in Your AWS Account:

- Log in to the AWS Management Console.

- Navigate to the Amazon Bedrock service.

- Make sure you are in a supported AWS region, like us-east-1.

Step 2: Request Access to Amazon Nova Foundation Model:

- In the Bedrock Console, go to “Model access”.

Under “Manage model access”, locate Amazon Nova Foundation Model.

Click “Request access” for the Nova model if you don’t already have it.

Wait for AWS approval. This process can take from a few minutes to several hours, depending on your AWS account and region.

Step 3: Gather Model API Details:

Go to the “Foundation models” section in the Bedrock Console (left-hand menu).

First lambda - Start video creation process

Code explaned below

Importing Required Libraries and Initializing Parameters

import json # For returning API responses in JSON format

import boto3 # AWS SDK for Python (to call Bedrock)

import random # To generate a random seed for output variability

def lambda_handler(event, context):

# Initialize Bedrock client in the desired AWS region

bedrock = boto3.client("bedrock-runtime", region_name="us-east-1")

# Get the input prompt from the event (or use default)

prompt = event.get("prompt", "Sample video")

# Generate a random seed to ensure unique output per request

seed = random.randint(0, 2_147_483_646)

Triggering Asynchronous Video Generation

response = bedrock.start_async_invoke(

modelId="amazon.nova-reel-v1:0",

modelInput={

"taskType": "TEXT_VIDEO",

"textToVideoParams": {"text": prompt},

"videoGenerationConfig": {

"fps": 24,

"durationSeconds": 6,

"dimension": "1280x720",

"seed": seed

}

},

outputDataConfig={"s3OutputDataConfig": {"BUCKETNAME/outputs/"}}

)

This is the heart of the Lambda function. We are calling the Bedrock API's start_async_invoke method, which is used for models that run *asynchronously *(like video generation with Nova Reels).

return {

"statusCode": 202,

"body": json.dumps({"invocationArn": response["invocationArn"]}),

}

Finally, the Lambda returns a 202 Accepted HTTP status code, along with the invocationArn, which acts as the Job ID for tracking the status of the asynchronous generation process.

Second lambda - Post processing event

Code explaned below

import boto3

import json

s3 = boto3.client("s3") # S3 client for generating pre-signed URLs

def lambda_handler(event, context):

print(json.dumps(event)) # Log the incoming S3 event payload

for record in event['Records']:

bucket = record['s3']['bucket']['name'] # Bucket name where the video was saved

key = record['s3']['object']['key'] # Object key (file path) of the uploaded video

# Generate a pre-signed URL valid for 1 hour

presigned_url = s3.generate_presigned_url(

ClientMethod='get_object',

Params={'Bucket': bucket, 'Key': key},

ExpiresIn=3600

)

print(f"Video URL: {presigned_url}") # Log the generated link (you can replace this with SNS, DynamoDB, etc.)

return {

"statusCode": 200,

"body": json.dumps({"message": "Processed"})

}

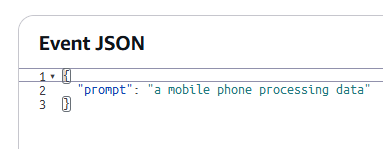

Calling the first lambda

Getting the video pre-signedUrl after de S3 event calls the second lambda

AI Generated video from your application

And that’s a wrap!

Thank you for taking the time to read through this guide. I hope it brought you clarity and practical knowledge to help you on your cloud and AI journey. I’m passionate about making technical topics more accessible, and there’s plenty more content coming soon. Stay curious, keep experimenting, and let’s continue building great things together!

![Cover image for Video Generation using BedRock [Part 1] Amazon Nova Canvas, Lambda and S3](https://media2.dev.to/dynamic/image/width=1000,height=420,fit=cover,gravity=auto,format=auto/https%3A%2F%2Fdev-to-uploads.s3.amazonaws.com%2Fuploads%2Farticles%2Fh0jce2plmv12y8vbpqpb.png)

Top comments (0)

Some comments may only be visible to logged-in visitors. Sign in to view all comments.