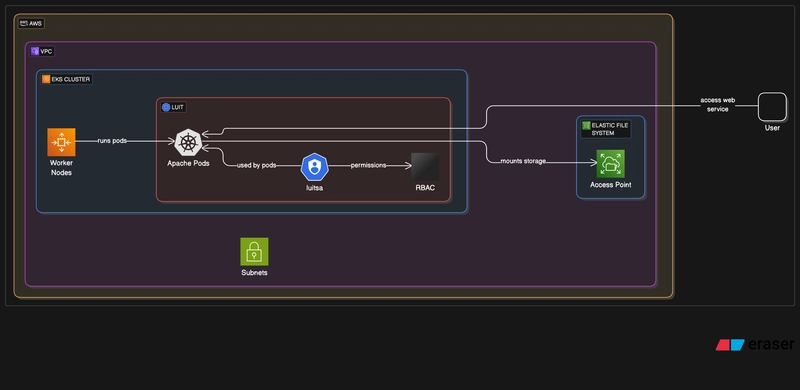

Featuring Namespaces, Role-Based Access Control(Cluster-Role and Cluster Rolebinding), Observability(Liveness and Readiness Probe) and a Kubernetes Apache Deployment with 5 replicas. This is my first ever Kubernetes on EKS and I can’t wait to build more!!! The GitHub repo for this project can be found here:

Repo-link

This project was also published on my public portfolio page over on Medium.com at:

Business Use Application/Relevance:

- This project shows how a containerized apache web server can be deployed on an EKS provisioned kubernetes cluster and exposed to traffic. The cluster infrastructure is deployed infrastructure-as-code with terraform and then by setting local kubeconfig to the EKS cluster the apache deployment is made.

Challenges I Faced and How I Solved Them:

What service type to use to expose apache deployment. I realized after a lot of trying that a load balancer service would work best for me.

Security groups trouble. Be sure to let security groups include ports you’ve opened in your kubernetes manifests.

Part1: Setup GitHub Repository and Lock It Down

- I will create a GitHub repository, and then lock down the repository:

- I will create a dev branch:

- I will clone the repository and edit with Visual Studio Code:

Part2: Create Kubernetes Cluster Terraform Files and Deploy Infrastructure

In Visual Studio Code I will create the following files for my cluster(I used EKS Auto)

I will then open a terminal and run the commands:

export AWS_ACCESS_KEY_ID=<your-aws-access-key-id>

export AWS_SECRET_ACCESS_KEY=<your-secret-access-key>

terraform init

terraform fmt

terraform validate

terraform plan

terraform apply -auto-approve

My files:

main.tf:

provider "aws" {

region = "us-east-1"

}

resource "aws_eks_cluster" "example" {

name = "dtmcluster"

access_config {

authentication_mode = "API"

}

role_arn = aws_iam_role.cluster.arn

version = "1.32"

bootstrap_self_managed_addons = false

compute_config {

enabled = true

node_pools = ["general-purpose"]

node_role_arn = aws_iam_role.node.arn

}

kubernetes_network_config {

elastic_load_balancing {

enabled = true

}

}

storage_config {

block_storage {

enabled = true

}

}

vpc_config {

endpoint_private_access = true

endpoint_public_access = true

subnet_ids = [

var.subnet_1,

var.subnet_2,

var.subnet_3,

]

}

# Ensure that IAM Role permissions are created before and deleted

# after EKS Cluster handling. Otherwise, EKS will not be able to

# properly delete EKS managed EC2 infrastructure such as Security Groups.

depends_on = [

aws_iam_role_policy_attachment.cluster_AmazonEKSClusterPolicy,

aws_iam_role_policy_attachment.cluster_AmazonEKSComputePolicy,

aws_iam_role_policy_attachment.cluster_AmazonEKSBlockStoragePolicy,

aws_iam_role_policy_attachment.cluster_AmazonEKSLoadBalancingPolicy,

aws_iam_role_policy_attachment.cluster_AmazonEKSNetworkingPolicy,

]

}

resource "aws_iam_role" "node" {

name = "eks-auto-node-example"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = ["sts:AssumeRole"]

Effect = "Allow"

Principal = {

Service = "ec2.amazonaws.com"

}

},

]

})

}

resource "aws_iam_role_policy_attachment" "node_AmazonEKSWorkerNodeMinimalPolicy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSWorkerNodeMinimalPolicy"

role = aws_iam_role.node.name

}

resource "aws_iam_role_policy_attachment" "node_AmazonEC2ContainerRegistryPullOnly" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryPullOnly"

role = aws_iam_role.node.name

}

resource "aws_iam_role" "cluster" {

name = "eks-cluster-example"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = [

"sts:AssumeRole",

"sts:TagSession"

]

Effect = "Allow"

Principal = {

Service = "eks.amazonaws.com"

}

},

]

})

}

resource "aws_iam_role_policy_attachment" "cluster_AmazonEKSClusterPolicy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSClusterPolicy"

role = aws_iam_role.cluster.name

}

resource "aws_iam_role_policy_attachment" "cluster_AmazonEKSComputePolicy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSComputePolicy"

role = aws_iam_role.cluster.name

}

resource "aws_iam_role_policy_attachment" "cluster_AmazonEKSBlockStoragePolicy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSBlockStoragePolicy"

role = aws_iam_role.cluster.name

}

resource "aws_iam_role_policy_attachment" "cluster_AmazonEKSLoadBalancingPolicy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSLoadBalancingPolicy"

role = aws_iam_role.cluster.name

}

resource "aws_iam_role_policy_attachment" "cluster_AmazonEKSNetworkingPolicy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSNetworkingPolicy"

role = aws_iam_role.cluster.name

}

terraform.tf:

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = ">= 5.38"

}

}

backend "s3" {

bucket = "eks-backend-dtm"

key = "backend.hcl"

region = "us-east-1"

}

}

.gitignore:

variables.tf

.terraform/

*.hcl

variables.tf file whose content I won’t show for security reasons:

Part3: Connect To Cluster; Develop Kubernetes Files Locally and Then Apply Resources On Cluster

- I will go my cluster in the AWS console and add the following add-ons to make my cluster even better:

- I will navigate to add-ons and move to add some add-ons:

- I will move to the next page and click on create recommended role:

- I will move to the review page and add these add-ons:

- I will then create an IAM Access Entry:

- I have developed my kubernetes files: cluster-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: luitrole

rules:

- apiGroups:

- ""

resources:

- '*'

verbs:

- '*'

cluster-rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: luitrolebinding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: luitrole

subjects:

- kind: ServiceAccount

name: luitsa

namespace: luit

deployment.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: apache-deployment

labels:

app: apache

namespace: luit

spec:

replicas: 5

selector:

matchLabels:

app: apache

template:

metadata:

labels:

app: apache

spec:

containers:

- name: nginx

image: public.ecr.aws/docker/library/httpd:latest

command: ["sh", "-c", "touch /tmp/healthy && httpd-foreground"]

livenessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 5

periodSeconds: 5

readinessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 5

periodSeconds: 5

resources:

requests:

memory: "64Mi"

cpu: "250m"

limits:

memory: "128Mi"

cpu: "500m"

ports:

- containerPort: 80

namespace.yaml:

apiVersion: v1

kind: Namespace

metadata:

name: luit

service-account.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: luitsa

namespace: luit

service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx

name: luitservice

namespace: luit

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

nodePort: 30080

selector:

app: apache

type: NodePort

- I will additionally open up port 30080 on my eks cluster security group:

Connecting To Cluster Via CLI

- Connect to AWS CLI using the command:

aws configure

#Enter in information as required

- Connect to cluster using command:

aws eks update-kubeconfig --name=<your-cluster-name>

- To check we are truly connected to the cluster I will try the command:

alias k=kubectl

k get all -A

Part4: Test Kubernetes Deployment

- I built out my files from earlier. I will create the resources one-by-one in the order that makes sense:

k create -f namespace.yaml

k create -f service-account.yaml

k create -f cluster-role.yaml

k create -f cluster-rolebinding.yaml

#to give my serviceaccount an IAM Role that allows access to EFS(IAM Role for Service Account)

sh irsa-oidc.sh

k create -f deployment.yaml

k create -f service.yaml

- Our apache deployment should be exposed by a load balancer service(which will create an AWS load balancer). To get the DNS for the load balancer I will use the command:

kubectl get svc -n luit

#Copy the external IP and paste it into your browser

Cleaning Up

I will push my tracked changes to my github repo and merge with the main branch:

- I will navigate to my GitHub repo on the main branch and initiate a merge request:

- I will clean up my resources using the command;

terraform destroy -auto-approve

Top comments (0)