Some companies only hire for engineers that are fully utilizing AI. Whether this makes sense is topic for another time. Here are some ways to wow your interviewer at these companies with your Cursor setup.

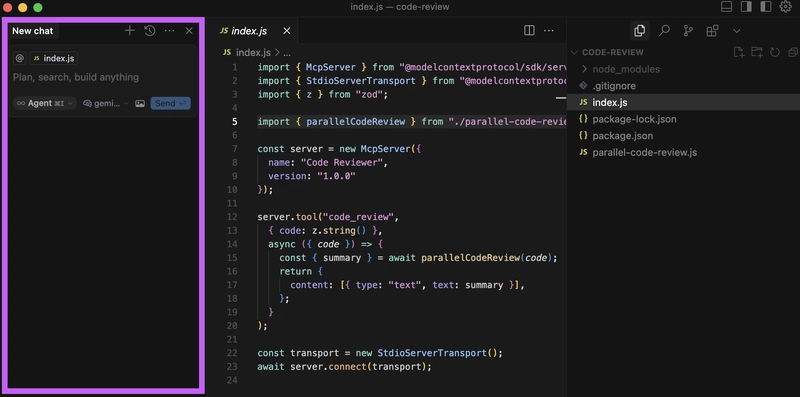

1. Have Chat on the left

What a statement! Not only did you take the extra effort to customize your Cursor but you also show that prompting is the most important part of your workflow.

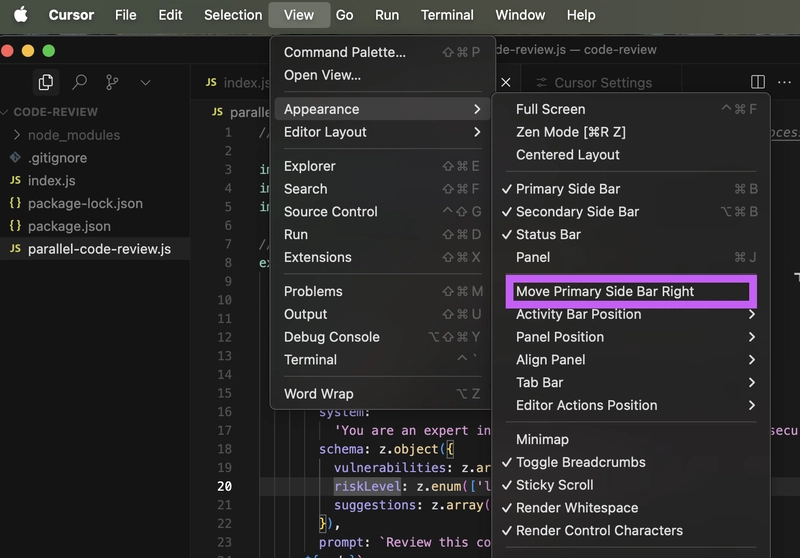

You can do this by going to View -> Appearance -> Move Primary Sidebar Right.

2. Have opinions on what model you are using

Instead of auto-selecting your model, explicitly choose the right model for the task and explain to the interviewer why you chose it.

Here's an example of what you can say today (this will soon be outdated as new models come out):

I use Gemini 2.5 Pro for coding. Claude 3.7 is too ambitious with changes so I'm able to make better incremental changes with Gemini 2.5 Pro. For non-coding tasks I use GPT 4.5. While GPT 4.5 didn't meet people's high expectations, it's been able to outperform other models on non-coding tasks.

This demonstrates to the interviewer that you have an informed opinion on the latest models and can enthusiastically talk about them.

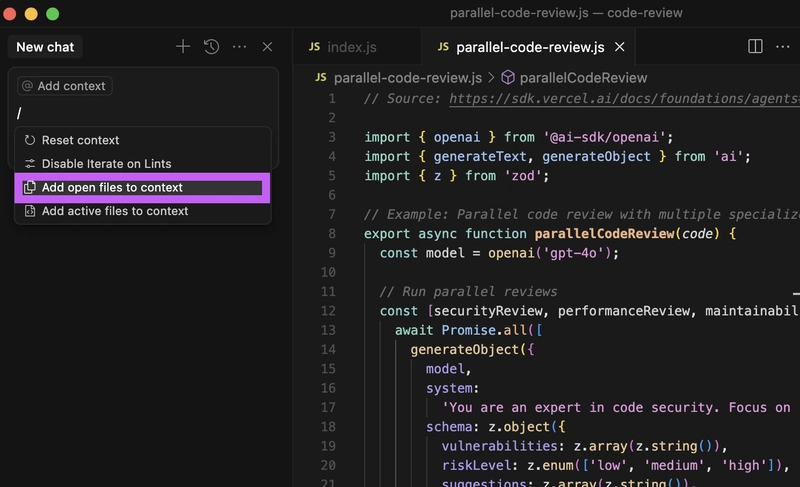

3. Add active files to context

You will most likely want to add all open files into context during the interview. Instead of manually adding each one, you can type / in the chat and then click Add open files to context.

This nifty trick will signal to the interviewer that you are not a casual.

Bonus: Use your own MCP

Build yourself a simple local MCP to help you during the interview. Here is an example one you can use to review your code from different perspectives: https://dev.to/andyrewlee/how-to-build-mcp-server-that-triggers-an-agent-using-ai-sdk-pd1. This demonstrates to the interviewer that you want to give Cursor even more powers.

Top comments (0)