Table of Contents

- Introduction

- What is

awk? - Core

awkCommands - Real-World Scenario: Using

awkCommands - Conclusion

- Let's Connect

Introduction

Welcome back to day 18!. Today, we are talking about a core command in Linux, the awk command.

If you work with text files, logs, or any kind of structured output in Linux, awk is your friend. It reads line by line, splits by fields, and gives you superpowers for data extraction and automation.

Let’s get into it!

What is awk?

awk is a command-line text-processing tool used to:

Filter and extract specific fields (columns) from files

Format reports from structured data

Perform pattern matching and calculations

It is widely used in shell scripting, log analysis, and server automation.

Core awk Commands

Before we list the core awk commands, here is the basic syntax;

awk 'pattern {action}' <filename>

- pattern: What to search for

- action: What to do when pattern matches

- filename: The file to process

Each line is treated as a record. Fields are usually separated by spaces and referred to as $1, $2, ..., $NF (for the last field).

Here are the awk commands and their use;

awk Command |

Description |

|---|---|

awk '{print $1}' file |

Prints the first column from a file. Useful for quickly extracting usernames, IPs, or fields. |

awk '{print $1, $3}' file |

Prints the first and third columns. Helpful when working with logs or CSV data. |

awk -F ":" '{print $1}' /etc/passwd |

Uses : as a field separator to extract usernames from the /etc/passwd file. |

awk '/error/ {print $0}' file |

Displays lines that contain "error". A go-to when scanning logs. |

awk '{sum += $2} END {print sum}' file |

Adds all values in the second column, and prints the total. Perfect for quick column math. |

awk 'NR==1' file |

Prints the first line only. Think of it like grabbing a file header. |

awk 'NF' file |

Filters out empty lines. Keeps output neat and clean. |

awk '$NF == 404 {count++} END {print count}' file |

Counts how many lines end with 404 (great for web logs). |

Real-World Scenario: Using awk Commands

You work as a Junior Linux Admin. Your team gives you a log file and says:

"Can you find out how many people got a 404 error and which pages they were trying to visit?"

Don’t panic. You have got awk!!.

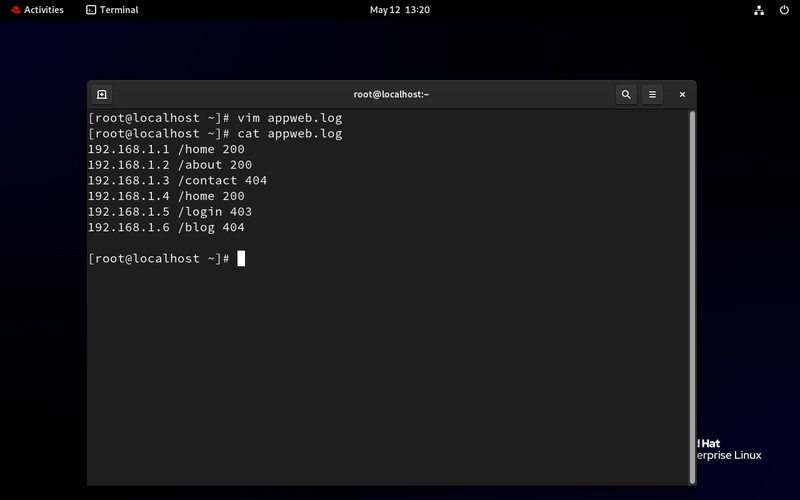

- Let's create a simple log file.

vim appweb.log

# now paste this into it

192.168.1.1 /home 200

192.168.1.2 /about 200

192.168.1.3 /contact 404

192.168.1.4 /home 200

192.168.1.5 /login 403

192.168.1.6 /blog 404

What is in this file?

- IP address (who visited),

- Page (what they tried to access),

- Status code (was it successful or not?).

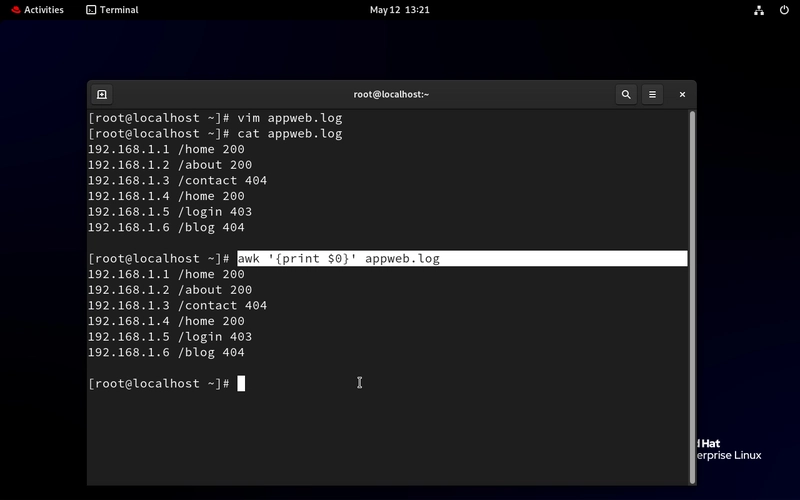

- First, you have to show the whole file

awk '{print $0}' appweb.log - # this prints the entire file

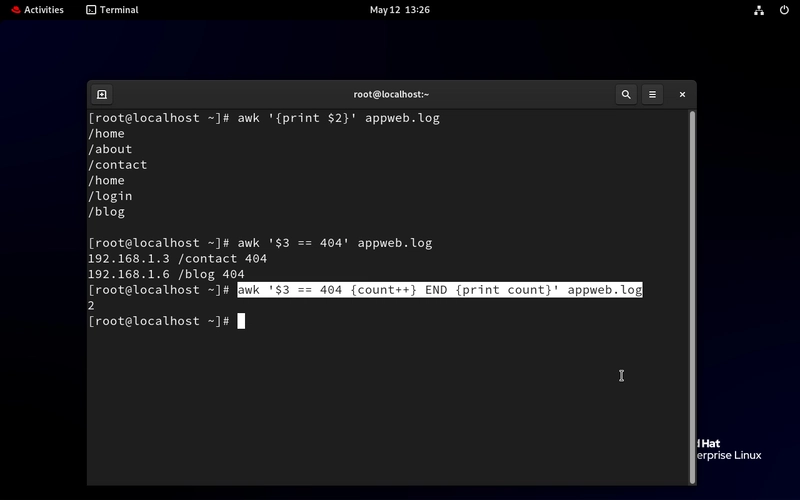

- Show only the page paths

awk '{print $2}' appweb.log # $2 means: show the 2nd word in each line. In our case, that is the page like /home, /about, etc.

- Show only the 404 error

awk '$3 == 404' appweb.log # $3 is the 3rd word — the status code. We are saying: if status code equals 404, print the line.

- Now count how many 404 error that happened

awk '$3 == 404 {count++} END {print count}' appweb.log

# This means if column 3 equals 404 → add 1 to count and when you are done reading the file → print count

- We can also just show the IP and pages with the 404 error

awk '$3 == 404 {print $1, $2}' appweb.log

Conclusion

awk is one of those tools that initially appears tricky but becomes second nature with continued use. awk command is useful for;

- Reviewing logs on a Linux server

- Investigating failed requests on a web app

- Auditing what users are accessing

You will use awk to filter, analyze, and summarize logs quickly, without needing a full-blown script.

If this is helpful to you, feel free to bookmark, comment, like and follow me for Day 19!

Let's Connect!

If you want to connect or share your journey, feel free to reach out on LinkedIn.

I am always happy to learn and build with others in the tech space.

#30DaysLinuxChallenge #Redhat#RHCSA #RHCE #CloudWhistler #Linux #Rhel #Ansible #Vim #CloudComputing #DevOps #LinuxAutomation #IaC #SysAdmin#CloudEngineer

Top comments (0)