Deep unsupervised learning using nonequilibrium thermodynamics represents a groundbreaking approach in the field of artificial intelligence, particularly in the realm of generative modeling. By leveraging principles from nonequilibrium thermodynamics, researchers have developed diffusion models that transform data into noise and back into structured data, mimicking physical processes.

This method provides a novel way to model complex data distributions, offering advantages in stability and flexibility over traditional methods such as GANs. The integration of thermodynamic concepts into machine learning not only enhances the theoretical framework but also opens new avenues for practical applications across various domains.

1. Introduction to Deep Unsupervised Learning using Nonequilibrium Thermodynamics

Overview

In the ever-evolving landscape of artificial intelligence, deep unsupervised learning has emerged as a crucial tool for understanding and generating data without labeled examples.

This technique falls under the broader category of machine learning, where models learn to find patterns and structures in data by themselves. Deep unsupervised learning is pivotal in applications ranging from image generation to anomaly detection, making it a cornerstone of modern AI research.

Nonequilibrium thermodynamics, a branch of physics that deals with systems out of thermal equilibrium, provides an intriguing lens through which we can view these learning processes. Traditionally, thermodynamics has been used to study energy transfer and transformation in physical systems.

However, its principles, such as entropy production and diffusion processes, can be metaphorically applied to understand how data transforms and evolves in neural networks.

Definition of Deep Unsupervised Learning

Deep unsupervised learning refers to the use of deep neural networks to identify hidden patterns and features in unlabeled data.

Unlike supervised learning, which relies on labeled datasets to train models, unsupervised learning algorithms do not require pre-labeled data. Instead, they learn to represent the underlying structure of the data, often through techniques like clustering or dimensionality reduction.

This ability to extract meaningful features from raw data makes deep unsupervised learning invaluable for tasks where labeled data is scarce or expensive to obtain.

Importance in AI

The significance of deep unsupervised learning in AI cannot be overstated. It enables machines to learn from vast amounts of unstructured data, which is abundant in the real world. For instance, in computer vision, unsupervised learning can help in generating new images or enhancing existing ones.

In natural language processing, it can uncover latent semantic structures in text, improving language understanding and generation. Moreover, unsupervised learning is crucial in fields like genomics and astronomy, where labeled data is often limited, yet the need to understand complex patterns is high.

Brief Explanation of Nonequilibrium Thermodynamics

Nonequilibrium thermodynamics focuses on systems that are not in a state of thermal equilibrium, meaning they are undergoing changes and transformations. Key concepts include entropy production, which measures the increase in disorder as systems evolve, and diffusion processes, which describe how particles spread in a medium.

These concepts are particularly relevant to machine learning because they provide a framework for understanding how data can be transformed from one state to another, akin to how noise is added and removed in diffusion models.

Role in Modeling Dynamic Systems

In machine learning, nonequilibrium thermodynamics offers a powerful analogy for modeling dynamic systems. Just as physical systems evolve from one state to another through processes like diffusion, data in neural networks can be seen as transitioning from structured information to noise and back.

This perspective helps in designing algorithms that can effectively capture and generate complex data distributions, providing a more intuitive understanding of the learning process.

Motivation

The motivation behind integrating nonequilibrium thermodynamics into deep unsupervised learning stems from the need to balance model flexibility with computational tractability.

Traditional generative models, such as GANs and VAEs, often struggle with issues like mode collapse or unstable training dynamics. Diffusion models, inspired by nonequilibrium thermodynamics, offer a promising alternative by providing a stable and flexible framework for generative modeling.

Addressing the Trade-off Between Model Flexibility and Computational Tractability

One of the primary challenges in generative modeling is achieving a balance between the flexibility of the model and its computational efficiency. Highly flexible models can capture complex data distributions but may be computationally intensive to train and sample from.

Conversely, simpler models might be easier to handle but may fail to represent the intricacies of the data. Diffusion models address this trade-off by using a step-by-step process that gradually adds and removes noise, allowing for precise control over the model's complexity and computational demands.

Historical Breakthrough: Sohl-Dickstein et al. (2015)

The seminal work by Sohl-Dickstein et al. in 2015 marked a significant breakthrough in the application of nonequilibrium thermodynamics to machine learning. Their paper introduced the concept of diffusion probabilistic models, which laid the groundwork for subsequent developments in the field.

By framing the problem of generative modeling as a reverse diffusion process, they demonstrated how nonequilibrium thermodynamics could be used to design stable and effective algorithms for data generation.

Context: Evolution from Early Diffusion Models to Advanced Systems

Since the initial introduction of diffusion models, the field has seen rapid evolution. Early models were primarily focused on simple datasets and struggled with scalability.

However, advancements like the Denoising Diffusion Probabilistic Models (DDPM) by Ho et al. in 2020 have significantly improved the performance and applicability of these models. DDPMs and their successors have achieved state-of-the-art results in image synthesis, demonstrating the potential of nonequilibrium thermodynamics-inspired approaches in handling complex, high-dimensional data.

Context

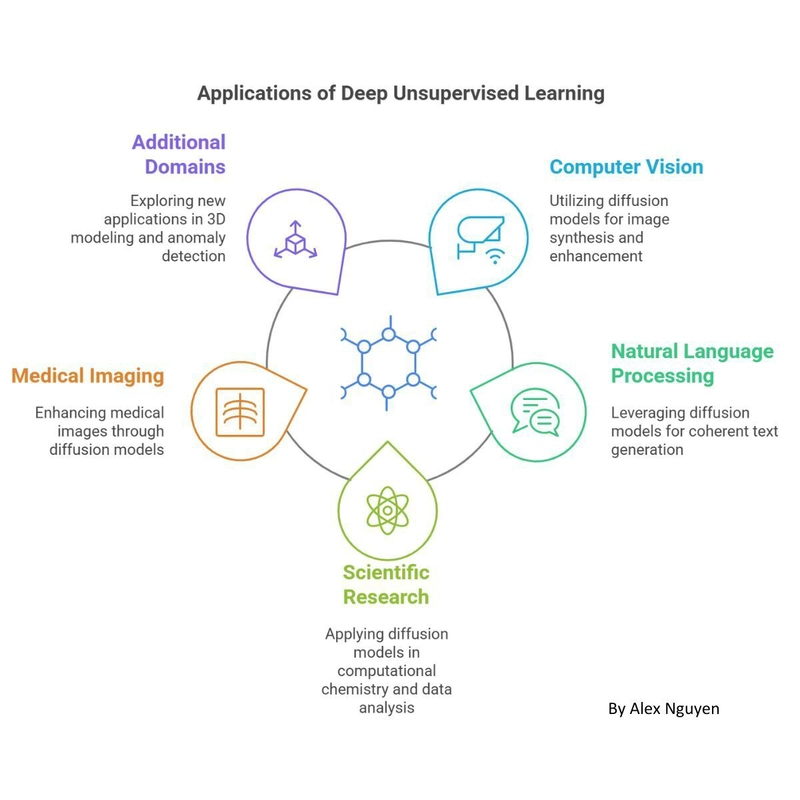

The context of deep unsupervised learning using nonequilibrium thermodynamics extends beyond theoretical advancements to practical applications across various domains. From computer vision to natural language processing, and from scientific research to medical imaging, these models are finding increasing relevance and utility.

Emerging Applications Across Computer Vision, NLP, Scientific Research, and More

In computer vision, diffusion models have been used to generate high-quality images and perform tasks like image inpainting and super-resolution.

Tools like DALL-E 2 and Stable Diffusion showcase the power of these models in creating realistic and diverse visual content. In natural language processing, diffusion-based approaches are being explored for text generation and sequence modeling, offering new ways to understand and generate human language.

Scientific research benefits from these models in areas like computational chemistry, where they aid in molecule design and protein structure prediction. In medicine, diffusion models are used for image reconstruction and denoising, enhancing the quality of under-sampled scans.

Beyond these fields, applications in 3D structure generation, anomaly detection, and reinforcement learning further illustrate the versatility and potential of deep unsupervised learning using nonequilibrium thermodynamics.

2. Theoretical Foundations of Nonequilibrium Thermodynamics

The theoretical foundations of deep unsupervised learning using nonequilibrium thermodynamics rest on a deep understanding of both the physical principles and their application to machine learning. This section delves into the key concepts of nonequilibrium thermodynamics and how they are translated into mathematical frameworks for modeling data distributions.

Key Concepts and Basics of Nonequilibrium Thermodynamic

Nonequilibrium thermodynamics provides a rich set of concepts that can be metaphorically applied to understand the dynamics of data in neural networks. By drawing parallels between physical systems and data transformations, we can gain insights into the mechanisms that drive learning and generation processes.

Entropy Production

Entropy production is a fundamental concept in nonequilibrium thermodynamics, measuring the increase in disorder as a system evolves away from equilibrium.

In machine learning, entropy production can be seen as a measure of how much information is lost or transformed as data is processed through a neural network. High entropy production indicates a significant transformation, which is crucial for understanding how noise is added and removed in diffusion models.

Entropy production also plays a role in assessing the efficiency of learning algorithms. By minimizing entropy production, models can achieve more efficient and stable training dynamics, leading to better performance in generative tasks. This concept helps in designing algorithms that balance the need for exploration (adding noise) and exploitation (removing noise) in the learning process.

Diffusion Processes

Diffusion processes describe how particles spread in a medium, moving from regions of higher concentration to lower concentration. In machine learning, diffusion processes serve as an analogy for how noise is gradually added to data, transforming it into a less structured form. This process is central to the forward pass of diffusion models, where data is iteratively corrupted until it resembles Gaussian noise.

Understanding diffusion processes helps in designing the noise schedules used in these models. By carefully controlling the rate at which noise is added, researchers can ensure that the data retains enough information to be reconstructed during the reverse process. This balance is crucial for achieving high-fidelity data generation and reconstruction.

Fluctuation Theorems and Dissipative Structures in Nonequilibrium Thermodynamics

Fluctuation theorems and dissipative structures are advanced concepts in nonequilibrium thermodynamics that explain the dynamic behavior of systems far from equilibrium.

Fluctuation theorems describe the probability of observing certain fluctuations in a system, which can be related to the likelihood of different data transformations in neural networks. Dissipative structures, on the other hand, refer to the emergence of ordered patterns in systems driven by external forces, akin to how structured data emerges from noise in diffusion models.

These concepts provide a deeper understanding of the stochastic nature of learning processes and the emergence of order from chaos. By applying fluctuation theorems, researchers can better predict and control the behavior of diffusion models, leading to more robust and reliable generative algorithms.

Connection to Machine Learning

The connection between nonequilibrium thermodynamics and machine learning lies in the ability to model data distributions using physical analogies. By treating data transformations as diffusion processes, researchers can design algorithms that effectively capture and generate complex data distributions.

Modeling Data Distributions Using Physical Diffusion Analogies

In diffusion models, data distributions are modeled by treating the data as a physical system that undergoes a diffusion process. The forward process involves gradually adding noise to the data, transforming it into a Gaussian distribution. This process is analogous to the physical diffusion of particles, where the system moves from a structured state to a disordered one.

The reverse process, on the other hand, involves learning to remove the noise step-by-step, reconstructing the original data. This process is guided by a neural network that learns the inverse of the forward diffusion, enabling the generation of new, high-fidelity data samples. By leveraging the principles of nonequilibrium thermodynamics, these models can effectively model and generate complex data distributions.

Forward Process: Gradual Noise Injection Resembling Physical Diffusion

The forward process in diffusion models is designed to mimic the physical diffusion of particles. At each step, a small amount of noise is added to the data, gradually transforming it into a Gaussian distribution. This process is controlled by a noise schedule, which dictates the rate at which noise is added over time.

The mathematical formulation of the forward process is given by:

q(xt | xt-1) = 𝒩(xt; (1-βt)xt-1, βtI)

Where:

- βt is the noise level at step t

- I is the identity matrix

This equation describes how the data evolves from one step to the next, with the noise level increasing gradually until the data becomes indistinguishable from Gaussian noise.

Mathematical Underpinnings

The mathematical underpinnings of deep unsupervised learning using nonequilibrium thermodynamics involve a combination of Markov chains, Gaussian transitions, and reparameterization tricks. These mathematical tools provide the foundation for designing and optimizing diffusion models.

Markov Chains & Gaussian Transitions

Markov chains are a fundamental concept in probability theory, describing sequences of events where the future state depends only on the current state. In diffusion models, Markov chains are used to model the transition of data from one step to the next, with each step representing a small change in the data due to the addition or removal of noise.

The transition probabilities in diffusion models are typically modeled using Gaussian distributions, which provide a tractable and flexible way to represent the noise added at each step.

The equation for the forward process, mentioned earlier, illustrates how Gaussian transitions are used to model the gradual addition of noise:

q(xt | xt-1) = 𝒩(xt; (1-βt)xt-1, βtI)

This equation shows that the next state xt is a Gaussian random variable centered around (1-βt)xt-1 with variance βtI. The choice of Gaussian transitions ensures that the model remains tractable and allows for efficient computation of the forward and reverse processes.

Noise Schedule (Linear or Cosine) Over T Steps

The noise schedule in diffusion models determines the rate at which noise is added to the data over time. Two common types of noise schedules are linear and cosine schedules. A linear schedule increases the noise level linearly over time, while a cosine schedule follows a cosine function, providing a smoother transition.

The choice of noise schedule can significantly impact the performance of the model. Linear schedules are simpler to implement and can be effective for many applications, but cosine schedules may offer better performance in certain scenarios by providing a more gradual increase in noise. Researchers often experiment with different noise schedules to find the optimal configuration for their specific task.

Reparameterization Trick

The reparameterization trick is a key technique in variational inference and diffusion models, allowing for efficient computation of gradients during training. In diffusion models, the reparameterization trick is used to express the data at any step ( t ) as a function of the initial data ( x_0 ) and a noise term ( \epsilon ).

The equation for the reparameterization trick in diffusion models is:

xt = α̅t x0 + √(1 - α̅t) ε

- α̅t is a cumulative product of the noise levels up to step t

- ε is a standard Gaussian random variable

This equation allows for direct sampling of xt from x0 without iterating through all intermediate steps, significantly speeding up the computation.

The reparameterization trick is crucial for training diffusion models efficiently, as it enables the use of backpropagation to optimize the model parameters. By expressing the data at any step as a function of the initial data and noise, researchers can compute gradients and update the model parameters to minimize the loss function.

3. Key Methodologies and Algorithmic Innovations

The development of deep unsupervised learning using nonequilibrium thermodynamics has led to several key methodologies and algorithmic innovations. This section explores the main approaches, including diffusion models, energy-based models, and emerging hybrid and alternative techniques.

Diffusion Models

Diffusion models represent a significant advancement in generative modeling, leveraging the principles of nonequilibrium thermodynamics to transform data into noise and back into structured data. These models have shown remarkable success in various applications, from image synthesis to text generation.

Overview & Mechanism

Diffusion models operate by iteratively adding and removing noise from data, transforming it between structured and unstructured states. The forward process involves gradually injecting noise into the data, while the reverse process learns to remove this noise, reconstructing the original data.

Forward Process

The forward process in diffusion models is designed to resemble a quasi-static process from nonequilibrium thermodynamics. At each step, a small amount of noise is added to the data, gradually transforming it into a Gaussian distribution.

This process is controlled by a noise schedule, which dictates the rate at which noise is added over time. The mathematical formulation of the forward process is given by:

q(xt | xt-1) = 𝒩(xt; (1-βt)xt-1, βtI)

- βt is the noise level at step t

- I is the identity matrix

This equation describes how the data evolves from one step to the next, with the noise level increasing gradually until the data becomes indistinguishable from Gaussian noise.

Reverse Process

The reverse process in diffusion models involves learning to remove the noise added during the forward process, reconstructing the original data.

This is achieved using a neural network, often based on architectures like U-Net, that learns the inverse of the forward diffusion. The neural network predicts the noise at each step and subtracts it from the current state of the data, enabling the generation of high-fidelity new data samples.

The mathematical formulation of the reverse process involves learning the parameters of a neural network that approximates the reverse diffusion. The goal is to minimize the difference between the predicted noise and the actual noise added during the forward process, enabling the reconstruction of structured data from noise.

Mathematical Formulation

The training objective of diffusion models is to minimize the KL divergence between the forward and reverse processes. This ensures that the model learns to accurately reverse the noise injection process, enabling the generation of new data samples that closely resemble the original data distribution.

The use of closed-form density functions in diffusion models allows for exact likelihood evaluation, providing a significant advantage over other generative models like GANs. This exactness ensures that the model can accurately assess the quality of generated samples and optimize its parameters accordingly.

Advantages

Diffusion models offer several advantages over traditional generative models.

One of the key benefits is stable training dynamics, as the gradual addition and removal of noise provides a more controlled learning process compared to the adversarial training of GANs. Additionally, diffusion models allow for exact log-likelihood evaluation, enabling precise assessment of model performance.

Another advantage is the flexibility of diffusion models across different data types, including images, video, and text. This versatility makes them suitable for a wide range of applications, from image synthesis to natural language processing.

Limitations

Despite their advantages, diffusion models also face several limitations. One of the primary challenges is the slow sampling process, which can require hundreds to thousands of steps to generate a single data sample. This slow sampling speed can be a bottleneck in applications that require real-time generation.

Additionally, diffusion models can be computationally intensive, requiring significant resources for training and sampling. This high computational cost can limit their applicability in resource-constrained environments.

Performance Metrics

The performance of diffusion models is often evaluated using metrics like the Inception score and the Fréchet Inception Distance (FID) score. For example, Ho et al. (2020) reported an Inception score of 9.46 and an FID score of 3.17 on the CIFAR-10 dataset, demonstrating the high quality of generated images.

These metrics provide quantitative insights into the quality and diversity of generated samples, helping researchers assess the effectiveness of diffusion models in capturing complex data distributions.

Energy-Based Models (EBMs)

Energy-based models (EBMs) represent another approach to generative modeling, defining probability distributions through energy functions. While EBMs share some similarities with diffusion models, they also face unique challenges and offer distinct advantages.

Overview

EBMs define probability distributions over data using energy functions, where the probability of a data point is proportional to the exponential of its negative energy.

The mathematical formulation of an EBM is given by:

P(x) ∝ exp(-U(x))

- U(x) is the energy function

- P(x) is the probability of the data point x

Training Challenges

One of the primary challenges in training EBMs is the intractability of the partition function, which is required to normalize the probability distribution. To overcome this challenge, researchers often use techniques like contrastive divergence, which approximate the gradient of the log-likelihood.

Despite these challenges, EBMs offer a flexible framework for generative modeling and anomaly detection. By defining the probability distribution through an energy function, EBMs can capture complex dependencies in the data, making them suitable for a wide range of applications.

Advantages & Limitations

EBMs offer several advantages, including their flexibility in modeling complex data distributions and their ability to detect anomalies. By defining the probability distribution through an energy function, EBMs can capture intricate patterns in the data, making them valuable for tasks like image generation and anomaly detection.

However, EBMs also face several limitations. One of the primary challenges is their computational intensity, as the training process can be slow and resource-intensive. Additionally, EBMs can suffer from mode collapse, where the model fails to capture the full diversity of the data distribution, leading to poor generative performance.

Emerging Hybrid & Alternative Approaches

As the field of deep unsupervised learning continues to evolve, researchers are exploring hybrid and alternative approaches that combine the strengths of different methodologies. These emerging techniques aim to enhance the performance and applicability of generative models.

Hybrid Models

Hybrid models combine the strengths of diffusion models with other generative techniques, such as GANs or autoregressive models. By integrating different approaches, researchers can leverage the stability of diffusion models with the efficiency of GANs or the sequential modeling capabilities of autoregressive models.

For example, combining diffusion models with GANs can lead to faster sampling and improved image quality, while combining them with autoregressive models can enhance their ability to model sequential data. These hybrid approaches offer a promising direction for advancing the field of generative modeling.

Other Techniques

In addition to hybrid models, researchers are exploring other techniques to enhance the training dynamics of generative models. One such approach is entropy maximization, which aims to increase the diversity of generated samples by maximizing the entropy of the model's output.

Another technique is the use of stochastic thermodynamics, which applies principles from nonequilibrium thermodynamics to improve the efficiency and stability of training processes. By incorporating these advanced concepts, researchers can design more effective and robust generative algorithms.

4. Algorithmic Implementation Details of Deep Unsupervised Learning with Nonequilibrium Thermodynamics

The implementation of deep unsupervised learning using nonequilibrium thermodynamics involves several key components, including the forward and reverse diffusion processes, neural network parameterization, and training strategies. This section provides a detailed overview of these implementation details.

Forward Diffusion Process

The forward diffusion process is a critical component of diffusion models, responsible for gradually adding noise to the data. This process is designed to mimic the physical diffusion of particles, transforming the data into a Gaussian distribution over time.

Step-by-Step Noise Injection

The forward diffusion process involves iteratively adding noise to the data according to a predetermined noise schedule. At each step, a small amount of noise is added, gradually transforming the data into a less structured form.

The mathematical formulation of the forward process is given by:

q(xt | xt-1) = 𝒩(xt; (1-βt)xt-1, βtI)

- βt is the noise level at step t

- I is the identity matrix

This equation describes how the data evolves from one step to the next, with the noise level increasing gradually until the data becomes indistinguishable from Gaussian noise.

Equation Highlight

The equation for the forward diffusion process highlights the gradual addition of noise over time:

q(xt | xt-1) = 𝒩(xt; (1-βt)xt-1, βtI)

This equation is central to the implementation of diffusion models, as it defines the transition probabilities between consecutive steps in the forward process.

Reverse Diffusion Process

The reverse diffusion process is responsible for learning to remove the noise added during the forward process, reconstructing the original data. This process is guided by a neural network that learns the inverse of the forward diffusion, enabling the generation of new, high-fidelity data samples.

Neural Network Parameterization

The reverse diffusion process is parameterized using a neural network, often based on architectures like U-Net. The neural network learns to predict the noise at each step and subtract it from the current state of the data, enabling the reconstruction of structured data from noise.

The parameters of the neural network include:

- μθ - the mean

- Σθ - the covariance

which are learned during training to minimize the difference between the predicted noise and the actual noise added during the forward process.

Learning Objective

The learning objective of the reverse diffusion process is to minimize the regression error and KL divergence between the forward and reverse processes. This ensures that the model learns to accurately reverse the noise injection process, enabling the generation of new data samples that closely resemble the original data distribution.

The training objective is typically formulated as a variational lower bound, derived from the Jarzynski equality, which provides a tractable way to optimize the model parameters.

Training Strategies

The training of diffusion models involves several strategies to optimize the model's performance and efficiency. These strategies include the use of variational lower bounds, multi-scale architectures, and advanced optimization techniques.

Use of Variational Lower Bound

The variational lower bound, derived from the Jarzynski equality, provides a tractable way to optimize the model parameters. By minimizing the variational lower bound, researchers can ensure that the model learns to accurately reverse the noise injection process, enabling the generation of high-fidelity data samples.

The variational lower bound is a key component of the training process, as it allows for efficient computation of gradients and optimization of the model parameters.

Multi-Scale Architectures

Multi-scale architectures, such as U-Net with time-conditional layers, are commonly used in diffusion models to capture the hierarchical structure of the data. These architectures enable the model to learn features at different scales, improving its ability to reconstruct structured data from noise.

By incorporating multi-scale architectures, researchers can enhance the performance and robustness of diffusion models, enabling them to generate high-quality data samples across a wide range of applications.

5. Deep Unsupervised Learning Applications Across Domains

The applications of deep unsupervised learning using nonequilibrium thermodynamics span a wide range of domains, from computer vision to natural language processing, and from scientific research to medical imaging. This section explores the key applications and their impact on various fields.

Computer Vision

In the field of computer vision, diffusion models have shown remarkable success in tasks like image synthesis and inpainting. These models leverage the principles of nonequilibrium thermodynamics to generate high-quality images and enhance existing ones.

Image Synthesis & Inpainting

Diffusion models have been used to generate high-quality images and perform tasks like image inpainting and super-resolution. Tools like DALL-E 2 and Stable Diffusion showcase the power of these models in creating realistic and diverse visual content.

For example, DALL-E 2 uses diffusion models to generate images from textual descriptions, enabling users to create visually compelling images based on natural language inputs. Stable Diffusion, on the other hand, focuses on image inpainting, allowing users to fill in missing parts of an image with realistic content.

Quantitative Metrics

The performance of diffusion models in computer vision is often evaluated using quantitative metrics like the Inception score and the Fréchet Inception Distance (FID) score. For example, Ho et al. (2020) reported an Inception score of 9.46 and an FID score of 3.17 on the CIFAR-10 dataset, demonstrating the high quality of generated images.

These metrics provide quantitative insights into the quality and diversity of generated samples, helping researchers assess the effectiveness of diffusion models in capturing complex data distributions.

Natural Language Processing

In the field of natural language processing, diffusion models are being explored for text generation and sequence modeling. These models offer new ways to understand and generate human language, leveraging the principles of nonequilibrium thermodynamics.

Text Generation

Diffusion models have been used to generate coherent and contextually relevant text, improving the quality of language generation tasks. By treating text as a sequence of tokens and applying diffusion processes, researchers can generate text that closely resembles human-written content.

For example, diffusion-based approaches have been used to generate stories, poems, and dialogues, showcasing their potential in creative writing and conversational AI. These models offer a promising direction for advancing the field of natural language generation.

Temporal Data Modeling

In addition to text generation, diffusion models are being explored for temporal data modeling, including time-series forecasting. By applying diffusion processes to sequential data, researchers can capture the temporal dependencies and generate accurate forecasts.

Applications in weather prediction and financial data analysis demonstrate the potential of diffusion models in modeling and forecasting temporal data. These models offer a flexible and powerful framework for understanding and predicting complex time-series patterns.

Scientific & Medical Fields

In the scientific and medical fields, diffusion models are finding increasing relevance and utility. From computational chemistry to medical imaging, these models are aiding in research and enhancing the quality of data analysis.

Computational Chemistry

In computational chemistry, diffusion models are used for molecule design and protein structure prediction. By treating molecules as data points and applying diffusion processes, researchers can generate new molecular structures and predict their properties.

For example, diffusion models have been used to design novel drug compounds and predict their binding affinities, aiding in the discovery of new pharmaceuticals. These models offer a powerful tool for advancing research in computational chemistry and drug discovery.

Medical Imaging

In medical imaging, diffusion models are used for image reconstruction and denoising, enhancing the quality of under-sampled scans. By applying diffusion processes to medical images, researchers can remove noise and artifacts, improving the diagnostic accuracy of imaging techniques.

For example, diffusion models have been used to enhance the quality of MRI and CT scans, enabling more accurate diagnosis and treatment planning. These models offer a promising direction for advancing the field of medical imaging and improving patient care.

Additional Domains

Beyond the fields mentioned above, diffusion models are finding applications in additional domains, including 3D structure generation, anomaly detection, and reinforcement learning enhancements. These models offer a versatile and powerful framework for addressing a wide range of challenges.

3D Structure Generation

In the field of 3D structure generation, diffusion models are used to generate realistic and diverse 3D models. By treating 3D structures as data points and applying diffusion processes, researchers can generate new 3D models that closely resemble real-world objects.

For example, diffusion models have been used to generate 3D models of furniture, vehicles, and architectural designs, showcasing their potential in computer-aided design and manufacturing. These models offer a promising direction for advancing the field of 3D structure generation.

Anomaly Detection

In the field of anomaly detection, diffusion models are used to identify unusual patterns and outliers in data. By treating data as a distribution and applying diffusion processes, researchers can detect anomalies and deviations from the norm.

For example, diffusion models have been used to detect fraud in financial transactions and identify defects in manufacturing processes, showcasing their potential in anomaly detection and quality control. These models offer a powerful tool for enhancing the accuracy and efficiency of anomaly detection techniques.

Reinforcement Learning Enhancements

In the field of reinforcement learning, diffusion models are being explored to enhance the training and performance of agents. By applying diffusion processes to the state and action spaces, researchers can improve the exploration and exploitation of reinforcement learning algorithms.

For example, diffusion models have been used to enhance the exploration of agents in complex environments, leading to faster learning and better performance. These models offer a promising direction for advancing the field of reinforcement learning and improving the capabilities of autonomous agents.

6. Experimental Results and Comparative Analysis of Deep Unsupervised Learning with Nonequilibrium Thermodynamics

The experimental results and comparative analysis of deep unsupervised learning using nonequilibrium thermodynamics provide valuable insights into the performance and effectiveness of these models. This section explores the benchmark performance, quantitative insights, and empirical data that highlight the strengths and limitations of diffusion models.

Benchmark Performance

The benchmark performance of diffusion models is often evaluated using state-of-the-art log-likelihood and image quality metrics. These metrics provide a quantitative assessment of the model's ability to capture complex data distributions and generate high-fidelity data samples.

State-of-the-Art Log-Likelihood and Image Quality Metrics

Diffusion models have achieved state-of-the-art results on various datasets, including MNIST and CIFAR-10. For example, Ho et al. (2020) reported an Inception score of 9.46 and an FID score of 3.17 on the CIFAR-10 dataset, demonstrating the high quality of generated images.

These metrics provide a quantitative assessment of the model's performance, helping researchers compare the effectiveness of diffusion models against other generative techniques like GANs, VAEs, and autoregressive models.

Comparative Studies Against GANs, VAEs, and Autoregressive Models

Comparative studies have shown that diffusion models offer several advantages over traditional generative models. For example, diffusion models exhibit more stable training dynamics compared to GANs, which can suffer from mode collapse and adversarial training instability.

Additionally, diffusion models allow for exact log-likelihood evaluation, providing a significant advantage over VAEs, which rely on approximate inference. Autoregressive models, on the other hand, can capture complex dependencies in sequential data but may struggle with parallelization and computational efficiency.

These comparative studies highlight the strengths and limitations of diffusion models, providing valuable insights into their applicability and performance across different tasks and datasets.

Quantitative Insights

Quantitative insights into the performance of diffusion models provide a deeper understanding of their strengths and limitations. These insights include improvements in sampling efficiency, reductions in computational cost, and advancements in model architecture.

Improvements in Sampling Efficiency with Techniques Like Rectified Flow

One of the key challenges in diffusion models is the slow sampling process, which can require hundreds to thousands of steps to generate a single data sample. To address this challenge, researchers have developed techniques like rectified flow, which aim to improve the sampling efficiency of diffusion models.

Rectified flow involves modifying the noise schedule and transition probabilities to reduce the number of steps required for sampling. By optimizing the flow of data through the model, researchers can achieve faster and more efficient sampling, enhancing the applicability of diffusion models in real-time applications.

Reduction in Computational Cost Through Optimized Noise Schedules

Another challenge in diffusion models is their high computational cost, which can limit their applicability in resource-constrained environments. To address this challenge, researchers have developed optimized noise schedules that reduce the computational demands of training and sampling.

By carefully designing the noise schedule, researchers can achieve a balance between model flexibility and computational efficiency, enabling the use of diffusion models in a wider range of applications. These optimized noise schedules provide a promising direction for advancing the field of deep unsupervised learning and improving the scalability of diffusion models.

Empirical Tables & Graphs

Empirical tables and graphs provide a visual representation of the performance and effectiveness of diffusion models. These visualizations help researchers and practitioners assess the strengths and limitations of diffusion models across different datasets and tasks.

Summary Tables Detailing Performance on CIFAR-10, MNIST, and Other Datasets

Summary tables provide a comprehensive overview of the performance of diffusion models on various datasets, including CIFAR-10 and MNIST. These tables detail the log-likelihood, Inception score, and FID score of diffusion models, enabling researchers to compare their performance against other generative techniques.

For example, a summary table might show that diffusion models achieve an Inception score of 9.46 and an FID score of 3.17 on the CIFAR-10 dataset, outperforming GANs and VAEs in terms of image quality and diversity. These tables provide valuable insights into the strengths and limitations of diffusion models, guiding researchers in their selection and optimization of generative models.

Graphs Illustrating Sampling Efficiency and Computational Cost

Graphs provide a visual representation of the sampling efficiency and computational cost of diffusion models. These graphs illustrate the trade-offs between model flexibility and computational demands, helping researchers optimize the performance of diffusion models.

For example, a graph might show that the use of rectified flow reduces the number of steps required for sampling, improving the efficiency of diffusion models. Another graph might illustrate the impact of optimized noise schedules on the computational cost of training and sampling, highlighting the potential for reducing resource demands.

These graphs provide a clear and concise visualization of the performance and effectiveness of diffusion models, enabling researchers to make informed decisions about their use and optimization.

7. Challenges, Limitations, and Future Directions

The challenges, limitations, and future directions of deep unsupervised learning using nonequilibrium thermodynamics provide a roadmap for advancing the field. This section explores the key challenges, ongoing research, and potential future developments in diffusion models and related techniques.

Challenges

The challenges in deep unsupervised learning using nonequilibrium thermodynamics include computational cost, sampling speed, and theoretical questions. These challenges highlight the areas where further research and development are needed to enhance the performance and applicability of diffusion models.

Computational Cost

One of the primary challenges in diffusion models is their high computational cost, which can limit their applicability in resource-constrained environments. The training and sampling processes of diffusion models can be resource-intensive, requiring significant computational power and memory.

To address this challenge, researchers are exploring techniques like optimized noise schedules and multi-scale architectures to reduce the computational demands of diffusion models. By improving the efficiency of training and sampling, researchers can enhance the scalability and applicability of diffusion models across a wider range of applications.

Sampling Speed

Another challenge in diffusion models is the slow sampling process, which can require hundreds to thousands of steps to generate a single data sample. This slow sampling speed can be a bottleneck in applications that require real-time generation, limiting the practicality of diffusion models.

To address this challenge, researchers are developing techniques like rectified flow and fast samplers to improve the sampling efficiency of diffusion models. By reducing the number of steps required for sampling, researchers can enhance the speed and practicality of diffusion models, enabling their use in real-time applications.

Theoretical Questions

Theoretical questions in deep unsupervised learning using nonequilibrium thermodynamics include ongoing investigations into entropy production bounds and efficiency. These questions highlight the need for a deeper understanding of the underlying principles and mechanisms that drive the performance of diffusion models.

By addressing these theoretical questions, researchers can gain insights into the fundamental limits and potential improvements of diffusion models. This deeper understanding can guide the development of more effective and efficient generative algorithms, advancing the field of deep unsupervised learning.

Future Directions

The future directions of deep unsupervised learning using nonequilibrium thermodynamics include hybrid generative models, domain expansion, and theoretical enhancements. These directions provide a roadmap for advancing the field and enhancing the performance and applicability of diffusion models.

Hybrid Generative Models

Hybrid generative models combine the strengths of diffusion models with other generative techniques, such as GANs and EBMs. By integrating different approaches, researchers can leverage the stability of diffusion models with the efficiency of GANs or the flexibility of EBMs.

For example, combining diffusion models with GANs can lead to faster sampling and improved image quality, while combining them with EBMs can enhance their ability to capture complex dependencies in the data. These hybrid approaches offer a promising direction for advancing the field of generative modeling and improving the performance of diffusion models.

Domain Expansion

Domain expansion involves applying diffusion models to new and emerging domains, such as graph generation, reinforcement learning, and beyond. By extending the applicability of diffusion models to these domains, researchers can address a wider range of challenges and enhance the versatility of generative algorithms.

For example, diffusion models can be used to generate realistic and diverse graphs, aiding in the analysis and understanding of complex networks. In reinforcement learning, diffusion models can enhance the exploration and exploitation of agents, leading to faster learning and better performance.

These domain expansions provide a promising direction for advancing the field of deep unsupervised learning and improving the capabilities of diffusion models.

Theoretical Enhancements

Theoretical enhancements involve further integrating principles from nonequilibriumthermodynamics, such as fluctuation theorems and stochastic thermodynamics, into the framework of diffusion models. These enhancements aim to deepen our understanding of the underlying dynamics and improve the efficiency and effectiveness of generative algorithms.

For instance, incorporating fluctuation theorems can provide insights into the reversibility and irreversibility of the diffusion process, potentially leading to more efficient sampling methods. Stochastic thermodynamics can help model the energy landscapes and transitions in data generation, offering a more nuanced approach to optimizing the training and sampling processes.

By advancing the theoretical foundations of diffusion models, researchers can develop more robust and adaptable algorithms that better capture the complexities of real-world data distributions. This theoretical work is crucial for pushing the boundaries of what is possible with deep unsupervised learning and for addressing the current limitations of diffusion models.

Final Thoughts on Deep Unsupervised Learning Using Nonequilibrium Thermodynamics by Alex Nguyen

In conclusion, deep unsupervised learning using nonequilibrium thermodynamics represents a significant advancement in the field of artificial intelligence.

The integration of concepts from physics, such as entropy production and diffusion processes, into machine learning has led to the development of powerful generative models like diffusion models. These models have shown remarkable performance across various domains, including computer vision, natural language processing, and scientific research.

Despite their successes, diffusion models face challenges related to computational cost, sampling speed, and theoretical understanding. Addressing these challenges through innovative techniques and hybrid approaches will be crucial for further enhancing their applicability and efficiency.

The future of deep unsupervised learning lies in expanding the domains where these models can be applied, developing hybrid generative models, and deepening the theoretical understanding of their underlying principles.

As research continues to evolve, the potential of diffusion models and related techniques to revolutionize generative modeling and contribute to a wide range of applications remains vast. By continuing to explore and refine these methods, we can unlock new possibilities in AI and drive forward the next generation of intelligent systems.

Hi, I'm Alex Nguyen. With 10 years of experience in the financial industry, I've had the opportunity to work with a leading Vietnamese securities firm and a global CFD brokerage. I specialize in Stocks, Forex, and CFDs - focusing on algorithmic and automated trading.

I develop Expert Advisor bots on MetaTrader using MQL5, and my expertise in JavaScript and Python enables me to build advanced financial applications. Passionate about fintech, I integrate AI, deep learning, and n8n into trading strategies, merging traditional finance with modern technology.

Top comments (0)