In high-performance .NET applications, memory allocation is a double-edged sword. While the Garbage Collector (GC) makes memory management easier, careless or excessive allocations can lead to severe performance issues.

This guide explores how to reduce GC pressure by using efficient memory patterns, including the use of ArrayPool<T>, structs, and design best practices. You'll also learn how to measure GC impact and avoid common pitfalls.

🔍 What Is GC Pressure?

GC pressure refers to the load placed on the .NET Garbage Collector due to memory allocations, particularly of short-lived or large objects. If too many objects are created and discarded quickly, or if large allocations occur frequently, the GC is forced to run more often and for longer durations.

This can lead to:

- High CPU usage.

- Application pauses (“stop-the-world” events).

- Latency spikes in APIs and services.

- Increased memory usage due to heap fragmentation.

🧪 Real-World Example (Fictional)

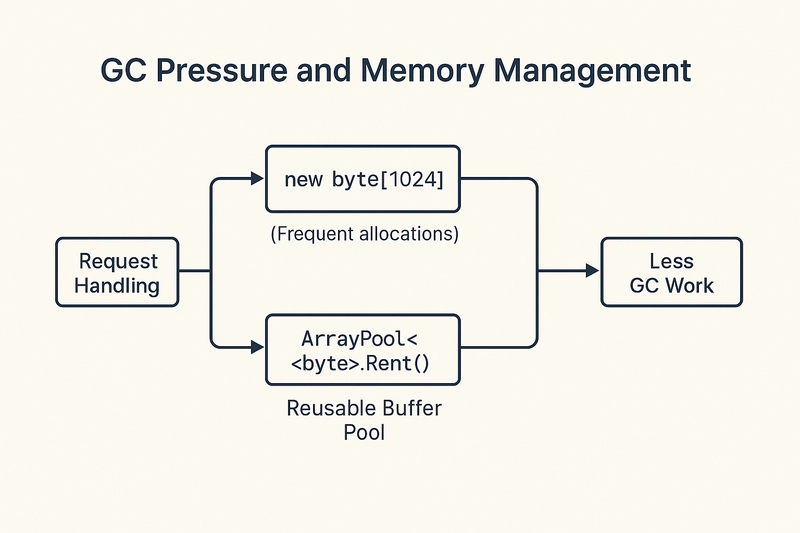

A fintech company built a high-throughput .NET 8 API that handled thousands of real-time market data events per second. Despite fast endpoints, latency spikes started appearing under load.

Investigation revealed excessive use of new byte[1024] buffers on each request. These temporary allocations were quickly discarded but filled up the Gen 0 heap rapidly, triggering frequent GC cycles.

By switching to ArrayPool<byte>.Shared, the team reduced GC collections by over 60%, smoothed out latency, and dropped CPU usage by nearly 20%.

📊 GC-Friendly Coding: Before vs After

| ❌ Inefficient Pattern | ✅ Improved Alternative |

|---|---|

new byte[1024] in every request |

ArrayPool<byte>.Shared.Rent(1024) |

| Frequent short-lived objects | Object pooling or reuse |

| Classes for small, immutable data | Use readonly struct

|

LINQ-heavy processing with ToList()

|

Use foreach with lazy enumeration |

| Capturing variables in lambdas | Avoid closures in performance-critical paths |

🧠 GC Pressure and Memory Management

Visualising the difference between direct allocations and pooled memory:

🧱 Use Structs (Value Types) When Appropriate

Value types in C# (structs) are stored on the stack or inlined within objects, avoiding heap allocation and GC tracking — as long as they're small and immutable.

✅ When to Use Structs

- You need small, frequently used objects.

- You want to avoid boxing/unboxing.

- You care about CPU cache performance.

⚠️ When Not to Use

- The struct is large (e.g. >16 bytes).

- It’s mutated often (immutability is safer).

- It's used polymorphically (structs can’t inherit).

public readonly struct Coordinates

{

public int X { get; }

public int Y { get; }

public Coordinates(int x, int y) => (X, Y) = (x, y);

}

`

♻️ Use ArrayPool<T> for Buffer Reuse

Avoid allocating a new array every time. Instead:

`csharp

var pool = ArrayPool.Shared;

var buffer = pool.Rent(1024);

try

{

// Use buffer

}

finally

{

pool.Return(buffer);

}

`

This drastically reduces GC pressure in buffer-heavy operations (web APIs, serialisation, etc.).

🔥 Avoid Allocations in Hot Paths

Allocating inside tight loops or critical code paths hurts performance.

Avoid:

- LINQ +

ToList()in real-time code -

string.Concatin loops - Closures/lambdas that capture local variables

Prefer:

foreach-

Span<T>when possible - Explicit pooling

csharp

foreach (var item in collection)

{

Process(item);

}

🧩 Design Patterns That Reduce Allocations

- Object Pooling: For expensive objects (parsers, buffers).

- Flyweight: Share immutable objects instead of duplicating.

- Factories: Centralised creation allows reuse or pooling.

`csharp

ObjectPool pool = new DefaultObjectPool(new MyParserPolicy());

var parser = pool.Get();

try

{

parser.Parse(data);

}

finally

{

pool.Return(parser);

}

`

📏 Measuring GC Pressure

Use BenchmarkDotNet or dotnet-counters to measure memory usage and GC stats:

`csharp

[MemoryDiagnoser]

public class GCPressureTests

{

[Benchmark]

public void WithArrayAllocation()

{

var buffer = new byte[1024];

}

[Benchmark]

public void WithArrayPool()

{

var pool = ArrayPool<byte>.Shared;

var buffer = pool.Rent(1024);

pool.Return(buffer);

}

}

`

Other tools:

dotnet-counters monitor -p <PID>dotnet-tracePerfView

✅ Conclusion

Reducing GC pressure is not just about micro-optimisations — it’s about writing efficient, stable software. With small, deliberate changes (structs, pooling, pattern-based reuse), you can prevent many hidden performance issues.

Mastering memory behaviour in .NET will help you build systems that scale, respond faster, and cost less to run in production.

🔗 Resources

- ArrayPool Documentation

- BenchmarkDotNet

- Understanding .NET GC

- Performance Best Practices in .NET

- GitHub Code Examples

Top comments (2)

Not sure I agree with the statement that structs should not be used for values > 16bytes

Large structs allow for

You've hit on a really important point, and I appreciate you bringing it up! It's true that the advice to avoid structs larger than 16 bytes is a common guideline, often rooted in concerns about copying costs and pass-by-value semantics within the CLR. If you're constantly passing large structs by value or using them carelessly in collections, you can definitely take a performance hit.

However, as you rightly pointed out, that's not the whole story. Data locality and cache efficiency are huge benefits of using structs, especially in performance-critical code or tight loops. And by using in, ref, or ref readonly to pass structs by reference, you can completely mitigate the copy overhead. This gives you the best of both worlds: the value-type semantics you want with the efficiency of reference-like passing.

So, you're absolutely right: the key isn't to outright avoid large structs. Instead, it's about understanding their behavior and using them strategically when their characteristics align with your performance goals. I'll make sure to clarify this in the article, shifting it from a strict rule to a more nuanced discussion about intelligent struct usage. Thanks again for the excellent input! 😊